A Kickstart in Deep Learning Real-Time Video Processing

Working with video data in ML. Streaming video through HTTP, WebSockets, WebRTC with Python. A kick-start towards vision ML.

This article aims to provide a few insights from my expertise in working with real-time video processing within deep learning applications at Cloud/Edge. Apart from getting the right data, bridging the research-to-production gap, monitoring, versioning, and improving huge datasets (>300 GB) there’s a tight engineering module that’s linked to any project when working with Deep Learning.

But, let’s start it simple!

Throughout the article, we’ll cover the basics of video data, what libraries you could use, understand video formats, and set up a basic app to stream media between two entities (API, Client).

Table of Contents:

What is video processing?

Encoder, Decoder, Bitrate, Containers

Video re-encoding

Common libraries to work with video in Python?

Python OpenCV

Albumentations

PyAV FFmpeg wrappers

Video Streaming Methods

Streaming over HTTP

Streaming over Websockets

Streaming over WebRTC

Conclusion - End of Part I

Let’s get into it!

1. Video Processing

Video processing refers to a set of techniques and methods used to manipulate and analyze video streams.

Codec

A codec is a hardware- or software-based process that compresses (encodes) and decompresses (decodes) large amounts of video and audio data.

Let’s take an example and verify the raw size for a 60-second 1920x1080 30 FPS video file.

W = Width (pixels)

H = Height (pixels)

FPS = Frame Rate (frames/s)

BIT = Bit Depth (bits per pixel)

DUR = Duration (video length in seconds)

FSize (bytes) = W x H × FPS x BIT x DUR

FSize (bytes) = 1920 x 1080 x 30 x (24 / 8) x 60 = 11197440000 (bytes)

FSize (mbytes) = 11197440000 / (1024 ** 2) = 10678,71 (mbytes)

FSize (gbytes) = 10678,71 / 1024 = 10,42 (gbytes)In contrast, compressing this to MP4 using H264 we would get a file of ~100-200 mb. Popular codecs are H264(AVC) and H265(HEVC).

Bitrate

Refers to the amount of data processed in a given amount of time, typically measured in bits per second (bps). In video, bitrate is crucial as it directly affects the quality and size of the video:

Resolution

Indicates the number of pixels in each dimension that can be displayed. We’re all familiar with HD (1280x720), FHD (1920x1080), and 4K (3840x2160) which are the resolutions widely used everywhere.

Container Formats

Containers such as MP4, and AVI encapsulate video, audio, and metadata. They manage how data is stored and interchanged without affecting the quality. When your media player streams a video, it processes blocks of data from within a container.

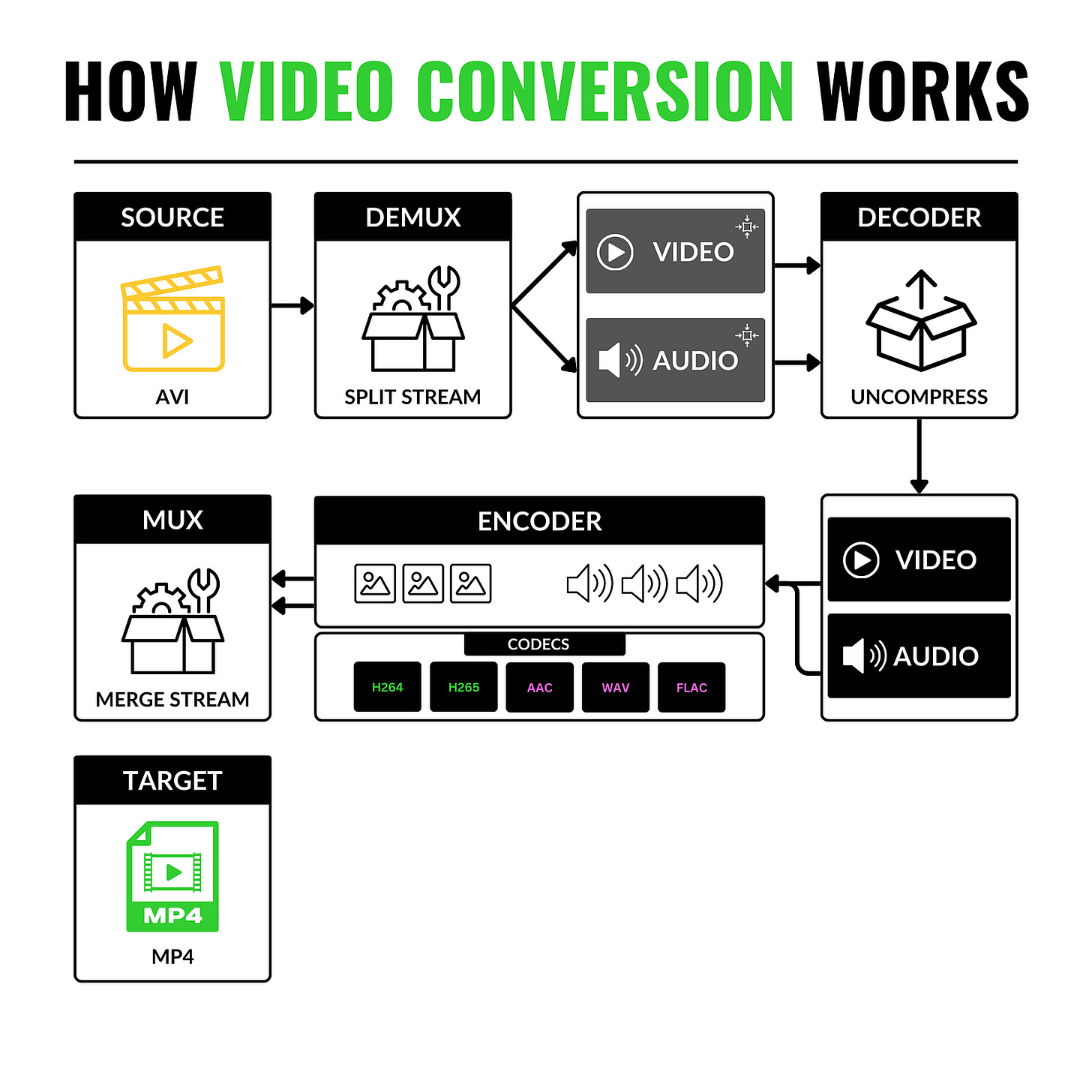

On a more detailed note, container formats make it simple to convert from one video format to another. In this case, the following key terms are employed:

SOURCE — the video in format A.

DEMUX — the component that splits the video stream from the audio stream.

DECODER — decompresses both streams (from low format to RAW format)

ENCODER — re-compresses the RAW streams using new Video and Audio codecs.

MUX — re-links and synchronizes the video stream with the audio stream.

TARGET — dumps the new data stream (video + audio) into a new container.

2. Libraries to work with video in Python

When working on Computer Vision projects, image processing and manipulation are mandatory.

Here’s a list of libraries and tools a Computer Vision Engineer has to know/work with:

1. OpenCV

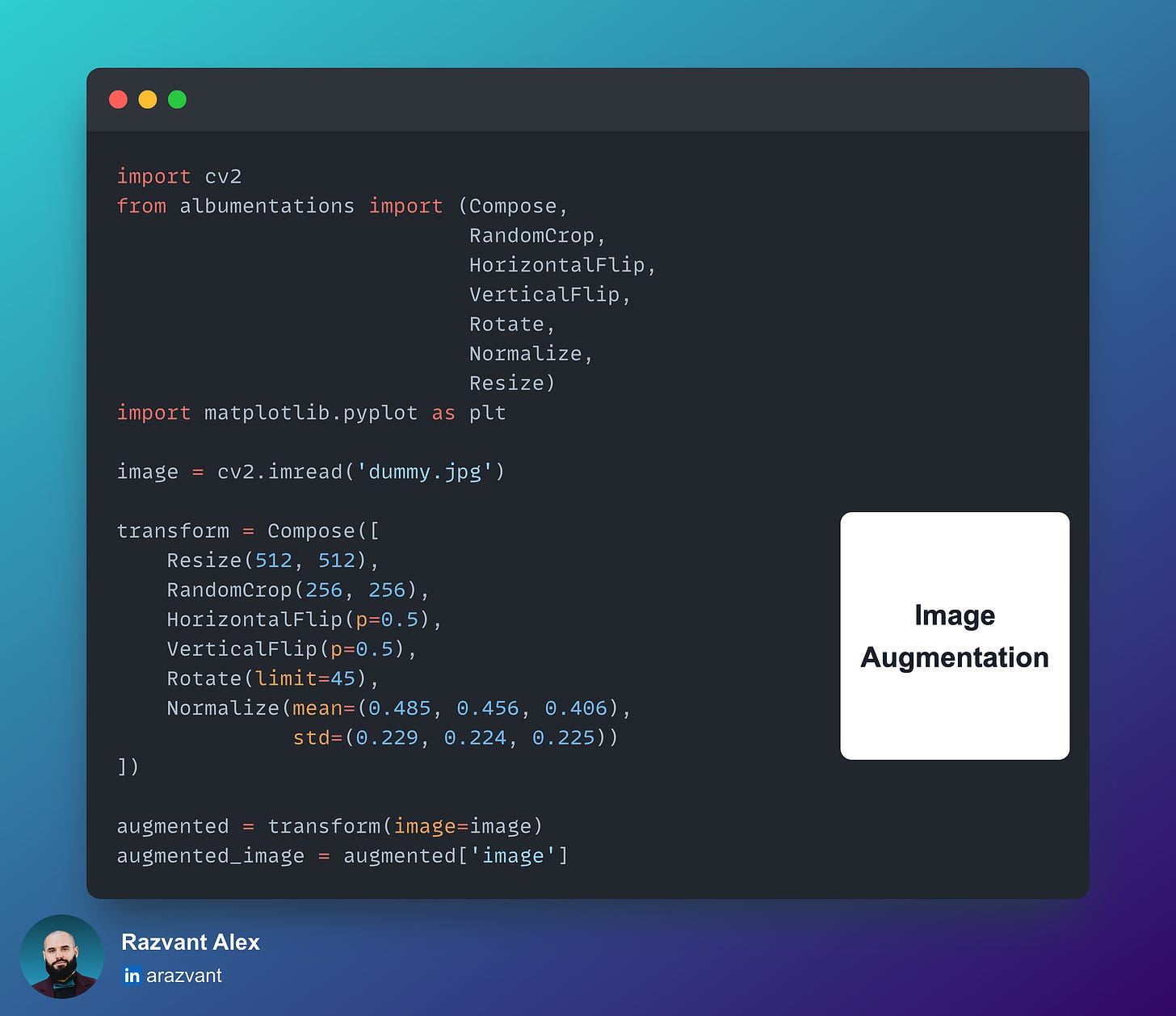

2. Albumentations

Fast and efficient library widely used within dataset augmentation when working on vision tasks. The majority of augmentations are implemented as GPU kernels.

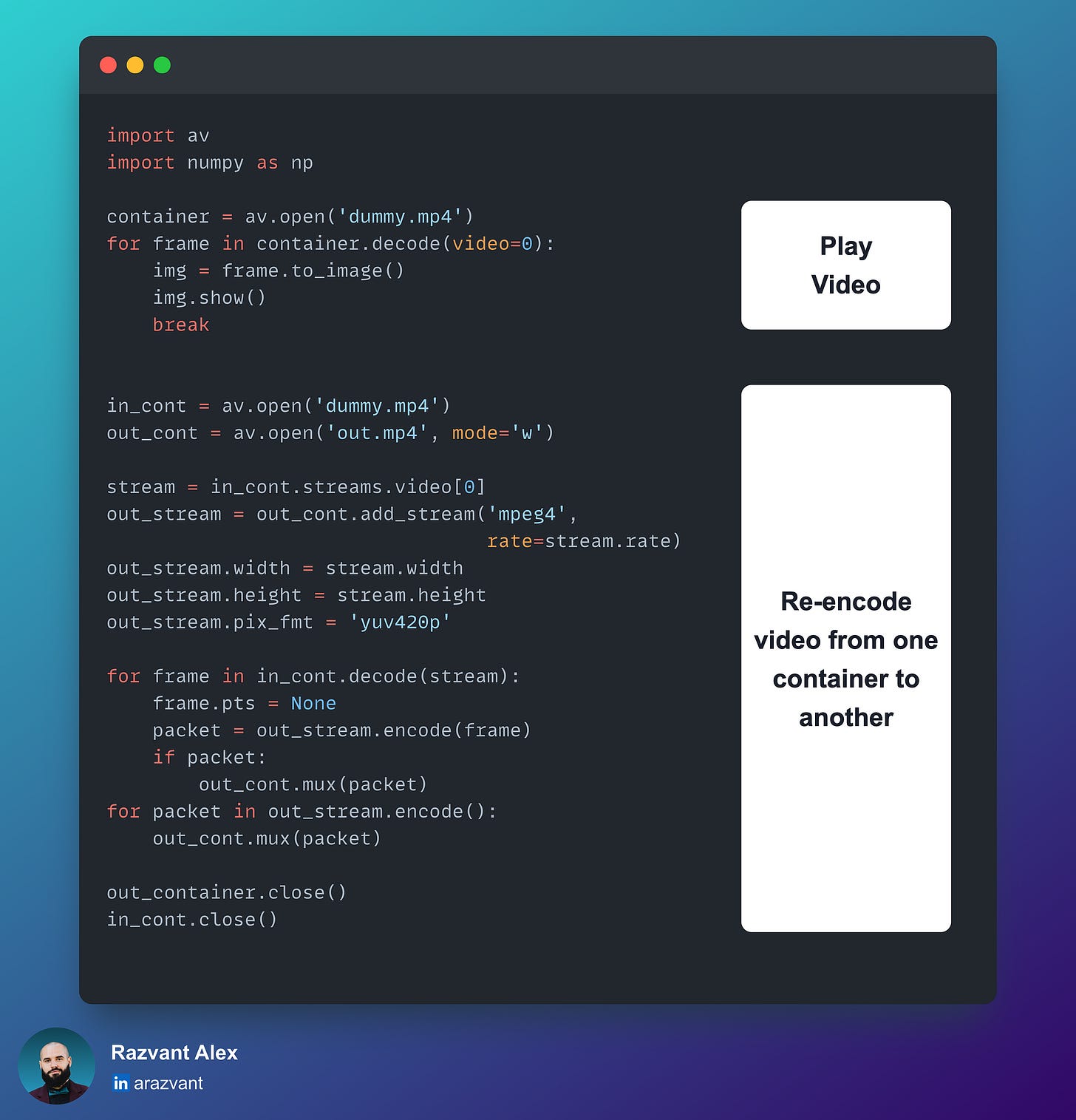

3. PyAv

Packages the Python FFmpeg bindings. PyAv is recommended when more detailed control over the raw image frame packets or audio packets is required.

Here, frames are unpacked in YUV420p format, with Y(luma) and U, V(chroma) planes to store color information, which is lighter than RGB.

3. Video Streaming Methods

For the scope of this article, let’s focus on a few video streaming methods that one could implement using Python, to solve the problem of streaming frames in real-time, from an API to a Client App.

We’ll cover 3 methods, HTTP, WebSockets, and WebRTC.

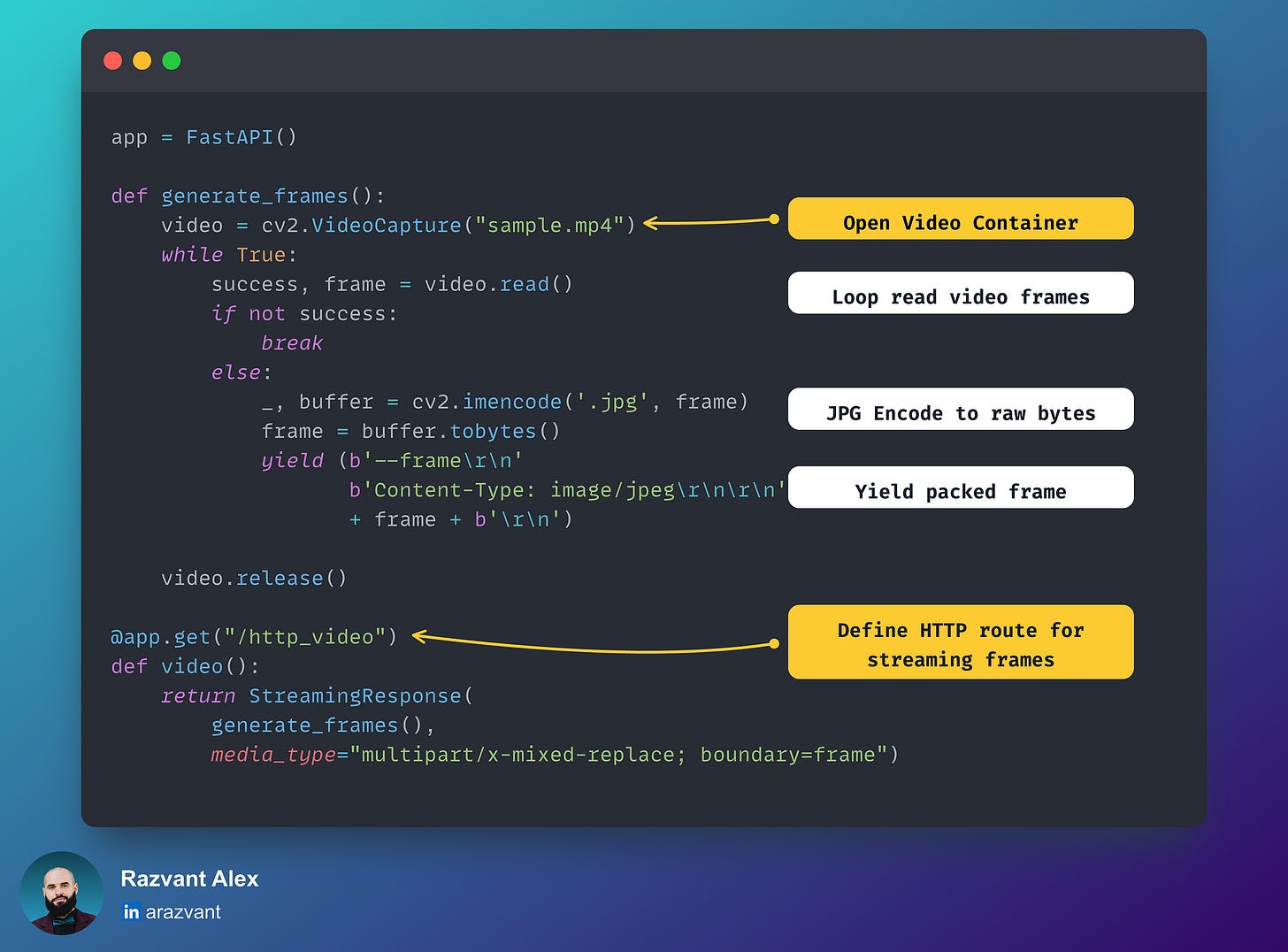

3.1 Streaming through HTTP

For small use cases, this might work but once the application scales and has to support many devices or workflow streams, the latency, overhead added by HTTP headers, and bandwidth start to impose challenges.

FastAPI Endpoint

React Web Endpoint

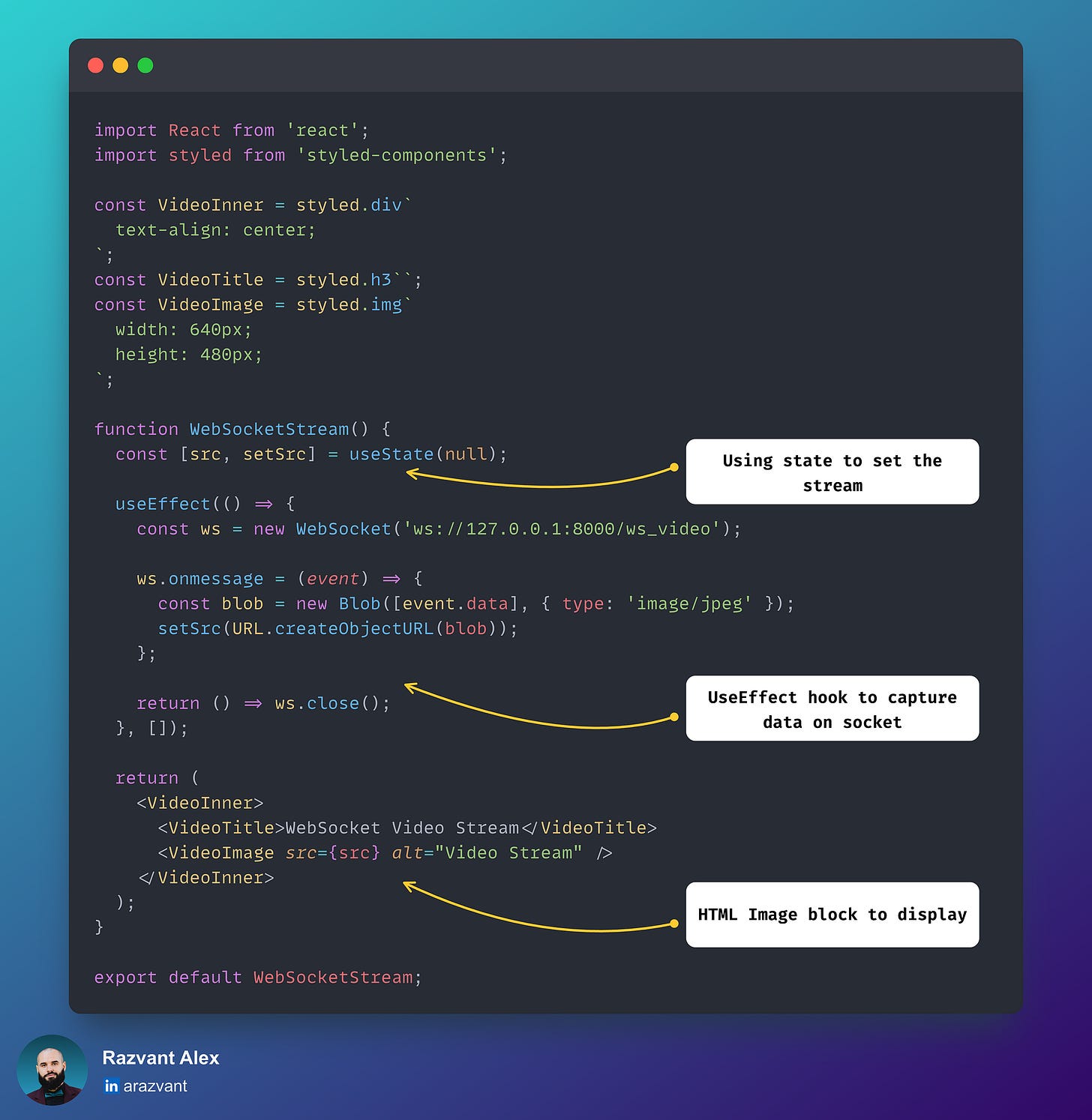

3.2 Streaming through WebSockets

Websockets provide a more efficient way compared to HTTP as they allow for lower latency, real-time interaction, and a more optimized way to send data.

FastAPI Endpoint

React Web Endpoint

3.3 Streaming through WebRTC

WebRTC (Web Real-Time Communication) is a technology standard that enables real-time communication over P2P (peer-to-peer) connections without the need for complex server-side implementations. Main components are:

Data channels: Enables the arbitrary exchange of data between different peers, be it browser-to-browser or API-to-client.

Encryption: All communication, audio, and video are encrypted ensuring secure communications.

SDP (session-description-protocol): During the WebRTC handshake, both peers exchange SDP offers and answers. In short, SDP describes the media capabilities of the peers, such that they can gather information about the session.

Signaling: The method through which the offer-response communication is achieved.

FastAPI Endpoint

React Web Endpoint

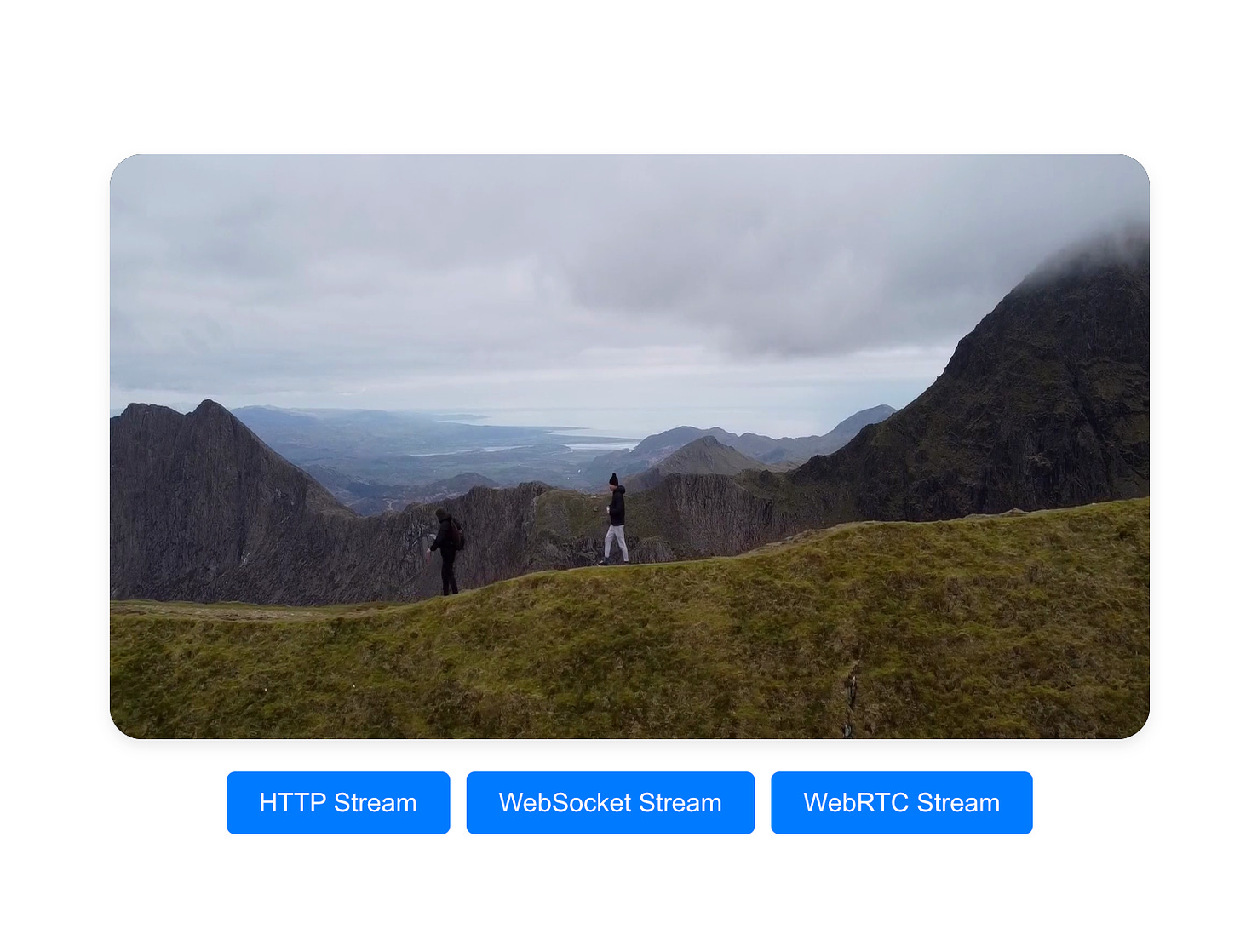

As we’ve iterated through the streaming methods, let’s test them live. Once you’ve cloned the 🔗 Repository , run the following:

# To start the FastAPI

make run_api

# To start the React Web App

make run_uiOnce you’ve started both, the FastAPI backend and the ReactWeb frontend, head over to localhost:3000 in your browser and check the results.

4. Conclusion - End of Part I

In this article, we covered the structure of a video format, and key components one must get a grasp of to understand how video works.

We’ve also iterated over a few widely-known libraries that make it easy to start up and work with video/image data, and ended up with the walkthrough over 3 common video streaming protocols, HTTP, Sockets, and WebRTC.

In Part II, in the same manner, we’ll extend our little project into a system where we’ll detach Ingestion from Inference, deploy our model using Triton, and view results on our React App - in real-time.

Stay tuned!