Build a semantic news search engine with 0 delay

How to build a real-time news search engine using Kafka, vector DBs, RAG and streaming engines.

This week’s topics:

The architecture of the semantic news search engine

How to implement the RAG streaming pipeline

The architecture of the semantic news search engine

Let’s understand how to design a semantic real-time news search engine using RAG, serverless, Kafka, vector DBs, Streamlit and a streaming engine.

More specifically, what do we want to build?

A real-time news search engine that:

- leverages semantic search to find relevant news

- it’s always up to date with the latest news with minimal delay

The world of news is in continuous motion. Thus, implementing a batch pipeline that populates our database every hour or day will quickly introduce a delay between the reality and the state of our database.

The most obvious solution is to schedule the batch pipeline to run more often, such as every 10 minutes. But that might result in redundant costs.

Another solution gaining traction is based on streaming technologies, which process everything on the fly as soon as it’s available.

Let’s examine what we need to implement a streaming solution and understand how to build it.

What is in our toolbelt?

- serverless Kafka & vector DB clusters hosted on Upstash: hosting them yourself is complex, and Upstash makes it straightforward through their serverless approach

Hosting your own Kafka cluster is hard... really hard!

→ Upstash is a great choice for serverless Kafka. Another popular option is Confluent.

- a Bytewax streaming engine: built in Rust for speed & with a clean Python interface

- pydantic: data modeling, validation, and serialization

- Streamlit: UI in Python

Let’s understand the architecture

On the producer side:

1. We will have multiple producers that aggregate news from various sources and ingest them into a Kafka topic.

2. We use Pydantic to standardize, validate, and serialize the collected news. Thus, all the news documents will have the same interface.

This approach allows us to add multiple news types from different producers into the same Kafka topic without breaking the system.

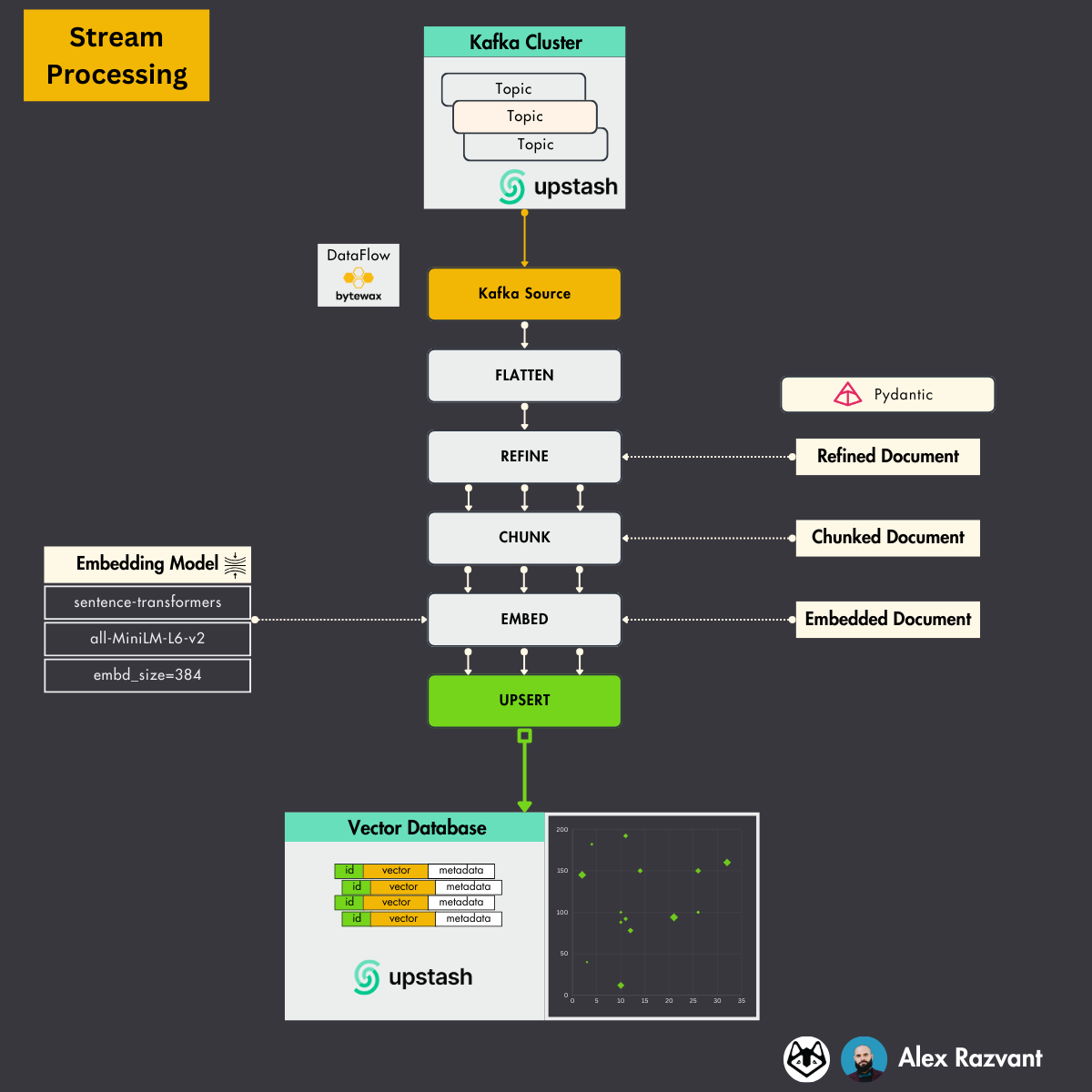

On the streaming pipeline side:

3. Using Bytewax, we build a streaming microservice in Python that reads real-time messages from the news Kafka topic and starts processing them.

4. Every message is deserialized to a Pydantic Model, cleaned, chunked, embedded and pushed to the Upstash vector DB.

On the UI client side:

5. We build a dashboard in Streamlit that takes queries as inputs and outputs the most similar news.

6. Under the hood, we have a retrieval module that cleans and embeds the user query, used to call the Upstash vector DB, which returns the most similar news

Note that the vector DB will constantly be updated by the Bytewax streaming pipeline. Thus, results are always returned based on the latest news.

How do we scale the system?

- the streaming pipeline should be shipped to a K8s cluster that can scale horizontally based on demand

- you can move the cleaning, embedding, and retrieval logic from the Streamlit UI to a FastAPI backend

How to implement the RAG streaming pipeline

Most of the engineering for implementing the real-time news search project is done on the RAG streaming pipeline.

The flow of the streaming pipeline is standard for ingesting data into a vector DB for RAG:

Kafka → clean, chunk, embed [RAG] → vector DB

The trickiest part is using a streaming engine like Bytewax to perform all the steps in real-time. This means that the streaming pipeline will process the message in the Kafka news topic as soon as it is available.

No cron jobs or polling. It's just on-demand processing.

Remember, what is our end goal?

To build a real-time semantic news search engine using vector DBs.

Thus, after gathering news from multiple sources and aggregating everything into a Kafka topic, we must process them using our streaming pipeline.

We must embed and index the news into a vector DB at extremely low latencies to build a real-time semantic search engine on top of this news.

To do that, we will use a Bytewax streaming engine & Kafka, as follows:

- collect the news documents from a Kafka topic

- normalize the news to a standard format that follows the same interface and can be processed uniformly

- map the document to a Pydantic model to validate it’s signature

- clean, chunk, and embed the news document for RAG

- load the embedded chunks to the Upstash vector DB

On the implementation side, we will first define the Bytewax streaming pipeline that performs all the steps from above:

This is how we read from a Kafka topic using Bytewax’s out-of-the-box connectors:

To output embedded news documents to the Upstash vector DB, we have to define a custom output connector by inheriting from Bytewax’s DynamicSink class:

When working with streaming technologies, you split your data into multiple partitions that can be processed independently to scale your application. For each partition, you have one or more workers. By avoiding creating dependencies between data points (aka partitioning), you can efficiently parallelize your work.

Thus, within the build() method, we instantiate an object that defines the actual job that should be done for each partition.

Hence, within the UpstashVectorSinkPartition class, we have the actual logic that knows how to process each partition and push data points to the vector DB:

Are you curious to see the whole code and try it yourself?

→ Such as the news producers’ code, the Streamlit UI or the whole RAG code.

Then, consider reading our end-to-end tutorial and learn to build your real-time news search engine. It’s free:

↓↓↓

Images

If not otherwise stated, all images are created by the author.

seem like Kafka is deprecated in Upstash. Any alternative for it?