DML: 4 key ideas you must know to train an LLM successfully

My time series forecasting Python code was a disaster until I started using this package. 4 key ideas you must know to train an LLM successfully.

Hello there, I am Paul Iusztin 👋🏼

Within this newsletter, I will help you decode complex topics about ML & MLOps one week at a time 🔥

This week’s ML & MLOps topics:

My time series forecasting Python code was a disaster until I started using this package

4 key ideas you must know to train an LLM successfully

Extra: My favorite ML & MLOps newsletter

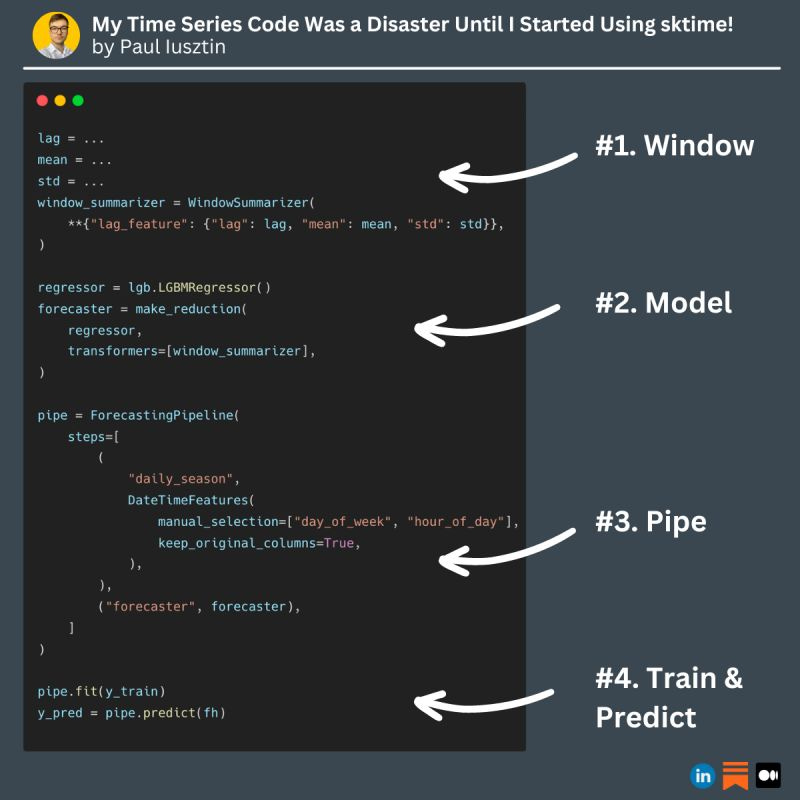

#1. My time series forecasting Python code was a disaster until I started using this package

Does building time series models sound more complicated than modeling standard tabular datasets?

Well... maybe it is... but that is precisely why you need to learn more about 𝘀𝗸𝘁𝗶𝗺𝗲!

When I first built forecasting models, I manually coded the required preprocessing and postprocessing steps. What a newbie I was...

How easy would my life have been if I had started from the beginning to use 𝘀𝗸𝘁𝗶𝗺𝗲?

.

𝐖𝐡𝐚𝐭 𝐢𝐬 𝐬𝐤𝐭𝐢𝐦𝐞?

𝘀𝗸𝘁𝗶𝗺𝗲 is a Python package that adds time-series functionality over well-known packages such as statsmodels, fbprophet, scikit-learn, autoarima, xgboost, etc.

Thus, all of a sudden, all your beloved packages will support time series features such as:

- easily swap between different models (e.g., xgboost, lightgbm, decision trees, etc.)

- out-of-the-box windowing transformations & aggregations

- functionality for multivariate, panel, and hierarchical learning

- cross-validation adapted to time-series

- cool visualizations

and more...

↳ If you want to see 𝘀𝗸𝘁𝗶𝗺𝗲 in action, check out my article: 🔗 A Guide to Building Effective Training Pipelines for Maximum Results

#2. 4 key ideas you must know to train an LLM successfully

These are 4 key ideas you must know to train an LLM successfully

📖 𝗛𝗼𝘄 𝗶𝘀 𝘁𝗵𝗲 𝗺𝗼𝗱𝗲𝗹 𝗹𝗲𝗮𝗿𝗻𝗶𝗻𝗴?

LLMs still leverage supervised learning.

A standard NLP task is to build a classifier.

For example, you have a sequence of tokens as inputs and, as output, a set of classes (e.g., negative and positive).

When training an LLM for text generation, you have as input a sequence of tokens, and its task is to predict the next token:

- Input: JavaScript is all you [...]

- Output: Need

This is known as an autoregressive process.

⚔️ 𝘄𝗼𝗿𝗱𝘀 != 𝘁𝗼𝗸𝗲𝗻𝘀

Tokens are created based on the frequency of sequences of characters.

For example:

- In the sentence: "Learning new things is fun!" every work is a different token as each is frequently used.

- In the sentence: "Prompting is a ..." the word 'prompting' is divided into 3 tokens: 'prom', 'pt', and 'ing'

This is important because different LLMs have different limits for the input number of tokens.

🧠 𝗧𝘆𝗽𝗲𝘀 𝗼𝗳 𝗟𝗟𝗠𝘀

There are 3 primary types of LLMs:

- base LLM

- instruction tuned LLM

- RLHF tuned LLM

𝘚𝘵𝘦𝘱𝘴 𝘵𝘰 𝘨𝘦𝘵 𝘧𝘳𝘰𝘮 𝘢 𝘣𝘢𝘴𝘦 𝘵𝘰 𝘢𝘯 𝘪𝘯𝘴𝘵𝘳𝘶𝘤𝘵𝘪𝘰𝘯-𝘵𝘶𝘯𝘦𝘥 𝘓𝘓𝘔:

1. Train the Base LLM on a lot of data (trillions of tokens) - trained for months on massive GPU clusters

2. Fine-tune the Base LLM on a Q&A dataset (millions of tokens) - trained for hours or days on modest-size computational resources

3. [Optional] Fine-tune the LLM further on human ratings reflecting the quality of different LLM outputs, on criteria such as if the answer is helpful, honest and harmless using RLHF. This will increase the probability of generating a more highly rated output.

🏗️ 𝗛𝗼𝘄 𝘁𝗼 𝗯𝘂𝗶𝗹𝗱 𝘁𝗵𝗲 𝗽𝗿𝗼𝗺𝗽𝘁 𝘁𝗼 𝗳𝗶𝗻𝗲-𝘁𝘂𝗻𝗲 𝘁𝗵𝗲 𝗟𝗟𝗠 𝗼𝗻 𝗮 𝗤&𝗔 𝗱𝗮𝘁𝗮𝘀𝗲𝘁

The most common approach consists of 4 steps:

1. A system message that sets the general tone & behavior.

2. The context that adds more information to help the model to answer (Optional).

3. The user's question.

4. The answer to the question.

Note that you need to know the answer to the question during training. You can intuitively see it as your label.

Extra: My favorite ML & MLOps newsletter

Do you want to learn ML & MLOps from real-world experience?

Then I suggest you join Pau Labarta Bajo's Real-World Machine Learning

weekly newsletter, along with another 8k+ ML developers.

Pau Labarta Bajo inspired me to start my weekly newsletter and is a great teacher who makes learning seamless ✌

That’s it for today 👾

See you next Thursday at 9:00 a.m. CET.

Have a fantastic weekend!

Paul

Whenever you’re ready, here is how I can help you:

The Full Stack 7-Steps MLOps Framework: a 7-lesson FREE course that will walk you step-by-step through how to design, implement, train, deploy, and monitor an ML batch system using MLOps good practices. It contains the source code + 2.5 hours of reading & video materials on Medium.

Machine Learning & MLOps Blog: in-depth topics about designing and productionizing ML systems using MLOps.

Machine Learning & MLOps Hub: a place where all my work is aggregated in one place (courses, articles, webinars, podcasts, etc.).

Thanks for the shout out Paul. I love the content you share