DML: How do you generate a Q&A dataset in <30 minutes to fine-tune your LLMs?

Lesson 7 | The Hands-on LLMs Series

Hello there, I am Paul Iusztin 👋🏼

Within this newsletter, I will help you decode complex topics about ML & MLOps one week at a time 🔥

Lesson 7 | The Hands-on LLMs Series

Table of Contents:

Real-time feature pipeline video lesson

How do you generate a synthetic domain-specific Q&A dataset in <30 minutes to fine-tune your open-source LLM?

My personal list of filtered resources about LLMs & vector DBs

Previous Lessons:

Lesson 4: How to implement a streaming pipeline to populate a vector DB for real-time RAG?

Lesson 6: What do you need to fine-tune an open-source LLM to create your financial advisor?

↳🔗 Check out the Hands-on LLMs course and support it with a ⭐.

#1. Real-time feature pipeline video lesson

I know we are currently talking about the training pipeline and Q&A dataset generation, but sometimes, mixing the information to remember and make new connections is healthy.

…or maybe that is only an excuse to share the video lesson about the feature pipeline that wasn’t ready when I started this series.

It will teach you how to 𝗶𝗻𝗴𝗲𝘀𝘁 𝗳𝗶𝗻𝗮𝗻𝗰𝗶𝗮𝗹 𝗻𝗲𝘄𝘀 in 𝗿𝗲𝗮𝗹-𝘁𝗶𝗺𝗲 from Alpaca, 𝗰𝗹𝗲𝗮𝗻 & 𝗲𝗺𝗯𝗲𝗱 the 𝗱𝗼𝗰𝘂𝗺𝗲𝗻𝘁𝘀, and 𝗹𝗼𝗮𝗱 them in a 𝘃𝗲𝗰𝘁𝗼𝗿 𝗗𝗕.

𝗛𝗲𝗿𝗲 𝗶𝘀 𝗮𝗻 𝗼𝘃𝗲𝗿𝘃𝗶𝗲𝘄 𝗼𝗳 𝘁𝗵𝗲 𝘃𝗶𝗱𝗲𝗼 ↓

1. Step-by-step instructions on how to set up the streaming pipeline code & a Qdrant vector DB serverless cluster

2. Why we used Bytewax to build the streaming pipeline

3. How we used Bytewax to ingest financial news in real-time leveraging a WebSocket, clean the documents, chunk them, embed them and ingest them in the Qdrant vector DB

4. How we adapted the Bytewax streaming pipeline to also work in batch mode to populate the vector DB with historical data

5. How to run the code

6. How to deploy the code to AWS

Here it is ↓ Enjoy 👀

#2. How do you generate a synthetic domain-specific Q&A dataset in <30 minutes to fine-tune your open-source LLM?

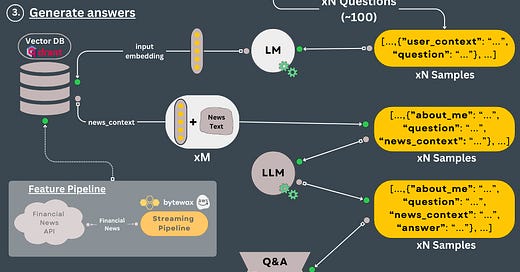

This method is also known as 𝗳𝗶𝗻𝗲𝘁𝘂𝗻𝗶𝗻𝗴 𝘄𝗶𝘁𝗵 𝗱𝗶𝘀𝘁𝗶𝗹𝗹𝗮𝘁𝗶𝗼𝗻. Here are its 3 𝘮𝘢𝘪𝘯 𝘴𝘵𝘦𝘱𝘴 ↓

𝘍𝘰𝘳 𝘦𝘹𝘢𝘮𝘱𝘭𝘦, 𝘭𝘦𝘵'𝘴 𝘨𝘦𝘯𝘦𝘳𝘢𝘵𝘦 𝘢 𝘘&𝘈 𝘧𝘪𝘯𝘦-𝘵𝘶𝘯𝘪𝘯𝘨 𝘥𝘢𝘵𝘢𝘴𝘦𝘵 𝘶𝘴𝘦𝘥 𝘵𝘰 𝘧𝘪𝘯𝘦-𝘵𝘶𝘯𝘦 𝘢 𝘧𝘪𝘯𝘢𝘯𝘤𝘪𝘢𝘭 𝘢𝘥𝘷𝘪𝘴𝘰𝘳 𝘓𝘓𝘔.

𝗦𝘁𝗲𝗽 𝟭: 𝗠𝗮𝗻𝘂𝗮𝗹𝗹𝘆 𝗴𝗲𝗻𝗲𝗿𝗮𝘁𝗲 𝗮 𝗳𝗲𝘄 𝗶𝗻𝗽𝘂𝘁 𝗲𝘅𝗮𝗺𝗽𝗹𝗲𝘀

Generate a few input samples (~3) that have the following structure:

- 𝘶𝘴𝘦𝘳_𝘤𝘰𝘯𝘵𝘦𝘹𝘵: describe the type of investor (e.g., "I am a 28-year-old marketing professional")

- 𝘲𝘶𝘦𝘴𝘵𝘪𝘰𝘯: describe the user's intention (e.g., "Is Bitcoin a good investment option?")

𝗦𝘁𝗲𝗽 𝟮: 𝗘𝘅𝗽𝗮𝗻𝗱 𝘁𝗵𝗲 𝗶𝗻𝗽𝘂𝘁 𝗲𝘅𝗮𝗺𝗽𝗹𝗲𝘀 𝘄𝗶𝘁𝗵 𝘁𝗵𝗲 𝗵𝗲𝗹𝗽 𝗼𝗳 𝗮 𝘁𝗲𝗮𝗰𝗵𝗲𝗿 𝗟𝗟𝗠

Use a powerful LLM as a teacher (e.g., GPT4, Falcon 180B, etc.) to generate up to +N similar input examples.

We generated 100 input examples in our use case, but you can generate more.

You will use the manually filled input examples to do few-shot prompting.

This will guide the LLM to give you domain-specific samples.

𝘛𝘩𝘦 𝘱𝘳𝘰𝘮𝘱𝘵 𝘸𝘪𝘭𝘭 𝘭𝘰𝘰𝘬 𝘭𝘪𝘬𝘦 𝘵𝘩𝘪𝘴:

"""

...

Generate 100 more examples with the following pattern:

# USER CONTEXT 1

...

# QUESTION 1

...

# USER CONTEXT 2

...

"""

𝗦𝘁𝗲𝗽 𝟯: 𝗨𝘀𝗲 𝘁𝗵𝗲 𝘁𝗲𝗮𝗰𝗵𝗲𝗿 𝗟𝗟𝗠 𝘁𝗼 𝗴𝗲𝗻𝗲𝗿𝗮𝘁𝗲 𝗼𝘂𝘁𝗽𝘂𝘁𝘀 𝗳𝗼𝗿 𝗮𝗹𝗹 𝘁𝗵𝗲 𝗶𝗻𝗽𝘂𝘁 𝗲𝘅𝗮𝗺𝗽𝗹𝗲𝘀

Now, you will have the same powerful LLM as a teacher, but this time, it will answer all your N input examples.

But first, to introduce more variance, we will use RAG to enrich the input examples with news context.

Afterward, we will use the teacher LLM to answer all N input examples.

...and bam! You generated a domain-specific Q&A dataset with almost 0 manual work.

.

Now, you will use this data to train a smaller LLM (e.g., Falcon 7B) on a niched task, such as financial advising.

This technique is known as finetuning with distillation because you use a powerful LLM as the teacher (e.g., GPT4, Falcon 180B) to generate the data, which will be used to fine-tune a smaller LLM (e.g., Falcon 7B), which acts as the student.

✒️ 𝘕𝘰𝘵𝘦: To ensure that the generated data is of high quality, you can hire a domain expert to check & refine it.

↳ To learn more about this technique, check out “How to generate a Q&A dataset in less than 30 minutes” Pau Labarta's article from

.#3. My personal list of filtered resources about LLMs & vector DBs

The internet is full of 𝗹𝗲𝗮𝗿𝗻𝗶𝗻𝗴 𝗿𝗲𝘀𝗼𝘂𝗿𝗰𝗲𝘀 about 𝗟𝗟𝗠𝘀 & 𝘃𝗲𝗰𝘁𝗼𝗿 𝗗𝗕𝘀. But 𝗺𝗼𝘀𝘁 𝗼𝗳 𝗶𝘁 is 𝘁𝗿𝗮𝘀𝗵.

After 𝟲 𝗺𝗼𝗻𝘁𝗵𝘀 of 𝗿𝗲𝘀𝗲𝗮𝗿𝗰𝗵𝗶𝗻𝗴 𝗟𝗟𝗠𝘀 & 𝘃𝗲𝗰𝘁𝗼𝗿 𝗗𝗕𝘀, here is a 𝗹𝗶𝘀𝘁 𝗼𝗳 𝗳𝗶𝗹𝘁𝗲𝗿𝗲𝗱 𝗿𝗲𝘀𝗼𝘂𝗿𝗰𝗲𝘀 that I 𝗽𝗲𝗿𝘀𝗼𝗻𝗮𝗹𝗹𝘆 𝘂𝘀𝗲 ↓

𝘉𝘭𝘰𝘨𝘴:

- philschmid

- Chip Huyen

- eugeneyan

- LLM Learning Lab

- Lil'Log

- VectorHub by SuperLinked

- Qdrant Blog

𝘈𝘳𝘵𝘪𝘤𝘭𝘦𝘴:

- Patterns for Building LLM-based Systems & Products

- RLHF: Reinforcement Learning from Human Feedback

- Illustrating Reinforcement Learning from Human Feedback (RLHF)

- Understanding Encoder And Decoder LLMs

- Building LLM applications for production

- Prompt Engineering

- Transformers

- Bidirectional Encoder Representations from Transformers (BERT)

- Multimodality and Large Multimodal Models (LMMs) by Chip Huyen

𝘝𝘪𝘥𝘦𝘰𝘴:

- Word Embedding and Word2Vec, Clearly Explained!!!

- Let's build GPT: from scratch, in code, spelled out

- Transformer Neural Networks, ChatGPT's foundation, Clearly Explained!!!

- Large Language Models with Semantic Search

- Decoder-Only Transformers, ChatGPTs specific Transformer, Clearly Explained!!!

𝘊𝘰𝘥𝘦 𝘙𝘦𝘱𝘰𝘴𝘪𝘵𝘰𝘳𝘪𝘦𝘴:

- OpenAI Cookbook

- generative-ai-for-beginners

𝘊𝘰𝘶𝘳𝘴𝘦𝘴:

- LangChain for LLM Application Development

- Building Systems with the ChatGPT API

- ChatGPT Prompt Engineering for Developers

.

...and hopefully, my 🔗 Hands-on LLMs course will soon appear along them.

Let me know what you think of this list and have fun learning 🔥

That’s it for today 👾

See you next Thursday at 9:00 a.m. CET.

Have a fantastic weekend!

…and see you next week for Lesson 8 of the Hands-On LLMs series 🔥

Paul

Whenever you’re ready, here is how I can help you:

The Full Stack 7-Steps MLOps Framework: a 7-lesson FREE course that will walk you step-by-step through how to design, implement, train, deploy, and monitor an ML batch system using MLOps good practices. It contains the source code + 2.5 hours of reading & video materials on Medium.

Machine Learning & MLOps Blog: in-depth topics about designing and productionizing ML systems using MLOps.

Machine Learning & MLOps Hub: a place where all my work is aggregated in one place (courses, articles, webinars, podcasts, etc.).