DML: How to design an LLM system for a financial assistant using the 3-pipeline design

Lesson 1 | The Hands-on LLMs Series

Hello there, I am Paul Iusztin 👋🏼

Within this newsletter, I will help you decode complex topics about ML & MLOps one week at a time 🔥

As promised, starting this week, we will begin the series based on the Hands-on LLMs FREE course.

Note that this is not the course itself. It is an overview for all the busy people who will focus on the key aspects.

The entire course will soon be available on 🔗 GitHub.

Lesson 1 | The Hands-on LLMs Series

Table of Contents:

What is the 3-pipeline design

How to apply the 3-pipeline design in architecting a financial assistant powered by LLMs

The tech stack used to build an end-to-end LLM system for a financial assistant

As the Hands-on LLMs course is still a 𝘄𝗼𝗿𝗸 𝗶𝗻 𝗽𝗿𝗼𝗴𝗿𝗲𝘀𝘀, we want to 𝗸𝗲𝗲𝗽 𝘆𝗼𝘂 𝘂𝗽𝗱𝗮𝘁𝗲𝗱 on our progress ↓

↳ Thus, we opened up the 𝗱𝗶𝘀𝗰𝘂𝘀𝘀𝗶𝗼𝗻 𝘁𝗮𝗯 under the course's GitHub Repository, where we will 𝗸𝗲𝗲𝗽 𝘆𝗼𝘂 𝘂𝗽𝗱𝗮𝘁𝗲𝗱 with everything is happening.

Also, if you have any 𝗶𝗱𝗲𝗮𝘀, 𝘀𝘂𝗴𝗴𝗲𝘀𝘁𝗶𝗼𝗻𝘀, 𝗾𝘂𝗲𝘀𝘁𝗶𝗼𝗻𝘀 or want to 𝗰𝗵𝗮𝘁, we encourage you to 𝗰𝗿𝗲𝗮𝘁𝗲 𝗮 "𝗻𝗲𝘄 𝗱𝗶𝘀𝗰𝘂𝘀𝘀𝗶𝗼𝗻".

↓ We want the course to fill your real needs ↓

↳ Hence, if your suggestion fits well with our hands-on course direction, we will consider implementing it.

Check it out and leave a ⭐ if you like what you see:

↳🔗 Hands-on LLMs course

#1. What is the 3-pipeline design

We all know how 𝗺𝗲𝘀𝘀𝘆 𝗠𝗟 𝘀𝘆𝘀𝘁𝗲𝗺𝘀 can get. That is where the 𝟯-𝗽𝗶𝗽𝗲𝗹𝗶𝗻𝗲 𝗮𝗿𝗰𝗵𝗶𝘁𝗲𝗰𝘁𝘂𝗿𝗲 𝗸𝗶𝗰𝗸𝘀 𝗶𝗻.

The 3-pipeline design is a way to bring structure & modularity to your ML system and improve your MLOps processes.

This is how ↓

=== 𝗣𝗿𝗼𝗯𝗹𝗲𝗺 ===

Despite advances in MLOps tooling, transitioning from prototype to production remains challenging.

In 2022, only 54% of the models get into production. Auch.

So what happens?

Sometimes the model is not mature enough, sometimes there are some security risks, but most of the time...

...the architecture of the ML system is built with research in mind, or the ML system becomes a massive monolith that is extremely hard to refactor from offline to online.

So, good processes and a well-defined architecture are as crucial as good tools and models.

=== 𝗦𝗼𝗹𝘂𝘁𝗶𝗼𝗻 ===

𝘛𝘩𝘦 3-𝘱𝘪𝘱𝘦𝘭𝘪𝘯𝘦 𝘢𝘳𝘤𝘩𝘪𝘵𝘦𝘤𝘵𝘶𝘳𝘦.

First, let's understand what the 3-pipeline design is.

It is a mental map that helps you simplify the development process and split your monolithic ML pipeline into 3 components:

1. the feature pipeline

2. the training pipeline

3. the inference pipeline

...also known as the Feature/Training/Inference (FTI) architecture.

.

#𝟭. The feature pipeline transforms your data into features & labels, which are stored and versioned in a feature store.

#𝟮. The training pipeline ingests a specific version of the features & labels from the feature store and outputs the trained models, which are stored and versioned inside a model registry.

#𝟯. The inference pipeline takes a given version of the features and trained models and outputs the predictions to a client.

.

This is why the 3-pipeline design is so beautiful:

- it is intuitive

- it brings structure, as on a higher level, all ML systems can be reduced to these 3 components

- it defines a transparent interface between the 3 components, making it easier for multiple teams to collaborate

- the ML system has been built with modularity in mind since the beginning

- the 3 components can easily be divided between multiple teams (if necessary)

- every component can use the best stack of technologies available for the job

- every component can be deployed, scaled, and monitored independently

- the feature pipeline can easily be either batch, streaming or both

But the most important benefit is that...

...by following this pattern, you know 100% that your ML model will move out of your Notebooks into production.

What do you think about the 3-pipeline architecture? Have you used it?

If you want to know more about the 3-pipeline design, I recommend this awesome article from Hopsworks ↓

↳🔗 From MLOps to ML Systems with Feature/Training/Inference Pipelines

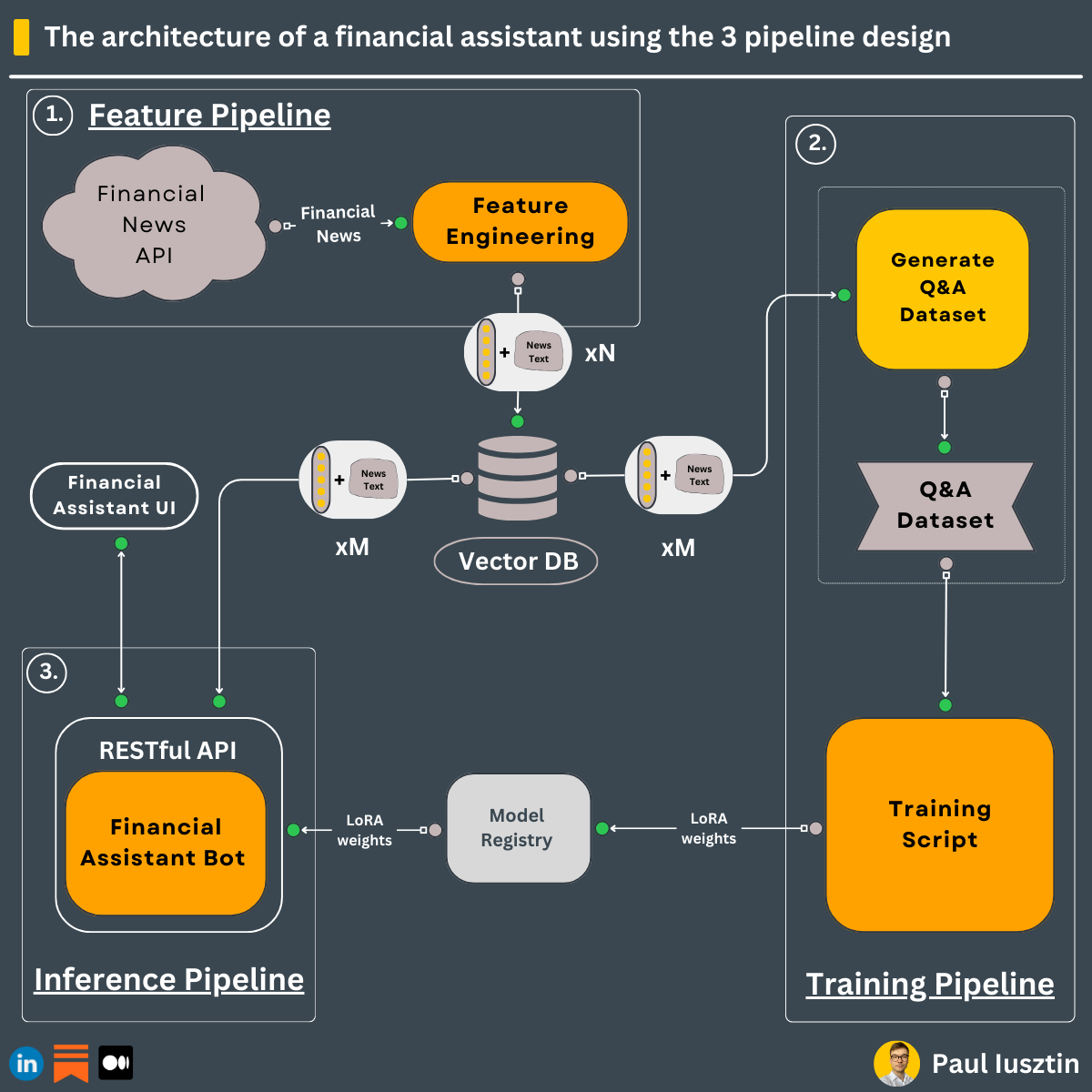

#2. How to apply the 3-pipeline design in architecting a financial assistant powered by LLMs

Building ML systems is hard, right? Wrong.

Here is how the 𝟯-𝗽𝗶𝗽𝗲𝗹𝗶𝗻𝗲 𝗱𝗲𝘀𝗶𝗴𝗻 can make 𝗮𝗿𝗰𝗵𝗶𝘁𝗲𝗰𝘁𝗶𝗻𝗴 the 𝗠𝗟 𝘀𝘆𝘀𝘁𝗲𝗺 for a 𝗳𝗶𝗻𝗮𝗻𝗰𝗶𝗮𝗹 𝗮𝘀𝘀𝗶𝘀𝘁𝗮𝗻𝘁 𝗲𝗮𝘀𝘆 ↓

.

I already covered the concepts of the 3-pipeline design in my previous post, but here is a quick recap:

"""

It is a mental map that helps you simplify the development process and split your monolithic ML pipeline into 3 components:

1. the feature pipeline

2. the training pipeline

3. the inference pipeline

...also known as the Feature/Training/Inference (FTI) architecture.

"""

.

Now, let's see how you can use the FTI architecture to build a financial assistant powered by LLMs ↓

#𝟭. 𝗙𝗲𝗮𝘁𝘂𝗿𝗲 𝗽𝗶𝗽𝗲𝗹𝗶𝗻𝗲

The feature pipeline is designed as a streaming pipeline that extracts real-time financial news from Alpaca and:

- cleans and chunks the news documents

- embeds the chunks using an encoder-only LM

- loads the embeddings + their metadata in a vector DB

- deploys it to AWS

In this architecture, the vector DB acts as the feature store.

The vector DB will stay in sync with the latest news to attach real-time context to the LLM using RAG.

#𝟮. 𝗧𝗿𝗮𝗶𝗻𝗶𝗻𝗴 𝗣𝗶𝗽𝗲𝗹𝗶𝗻𝗲

The training pipeline is split into 2 main steps:

↳ 𝗤&𝗔 𝗱𝗮𝘁𝗮𝘀𝗲𝘁 𝘀𝗲𝗺𝗶-𝗮𝘂𝘁𝗼𝗺𝗮𝘁𝗲𝗱 𝗴𝗲𝗻𝗲𝗿𝗮𝘁𝗶𝗼𝗻 𝘀𝘁𝗲𝗽

It takes the vector DB (feature store) and a set of predefined questions (manually written) as input.

After, you:

- use RAG to inject the context along the predefined questions

- use a large & powerful model, such as GPT-4, to generate the answers

- save the generated dataset under a new version

↳ 𝗙𝗶𝗻𝗲-𝘁𝘂𝗻𝗶𝗻𝗴 𝘀𝘁𝗲𝗽

- download a pre-trained LLM from Huggingface

- load the LLM using QLoRA

- preprocesses the generated Q&A dataset into a format expected by the LLM

- fine-tune the LLM

- push the best QLoRA weights (model) to a model registry

- deploy it using a serverless solution as a continuous training pipeline

#𝟯. 𝗜𝗻𝗳𝗲𝗿𝗲𝗻𝗰𝗲 𝗣𝗶𝗽𝗲𝗹𝗶𝗻𝗲

The inference pipeline is the financial assistant that the clients actively use.

It uses the vector DB (feature store) and QLoRA weights (model) from the model registry in the following way:

- download the pre-trained LLM from Huggingface

- load the LLM using the pretrained QLoRA weights

- connect the LLM and vector DB into a chain

- use RAG to add relevant financial news from the vector DB

- deploy it using a serverless solution under a RESTful API

Here are the main benefits of using the FTI architecture:

- it defines a transparent interface between the 3 modules

- every component can use different technologies to implement and deploy the pipeline

- the 3 pipelines are loosely coupled through the feature store & model registry

- every component can be scaled independently

See this architecture in action in my 🔗 𝗛𝗮𝗻𝗱𝘀-𝗼𝗻 𝗟𝗟𝗠𝘀 FREE course.

#3. The tech stack used to build an end-to-end LLM system for a financial assistant

The tools are divided based on the 𝟯-𝗽𝗶𝗽𝗲𝗹𝗶𝗻𝗲 (aka 𝗙𝗧𝗜) 𝗮𝗿𝗰𝗵𝗶𝘁𝗲𝗰𝘁𝘂𝗿𝗲:

=== 𝗙𝗲𝗮𝘁𝘂𝗿𝗲 𝗣𝗶𝗽𝗲𝗹𝗶𝗻𝗲 ===

What do you need to build a streaming pipeline?

→ streaming processing framework: Bytewax (brings the speed of Rust into our beloved Python ecosystem)

→ parse, clean, and chunk documents: unstructured

→ validate document structure: pydantic

→ encoder-only language model: HuggingFace sentence-transformers, PyTorch

→ vector DB: Qdrant

→deploy: Docker, AWS

→ CI/CD: GitHub Actions

=== 𝗧𝗿𝗮𝗶𝗻𝗶𝗻𝗴 𝗣𝗶𝗽𝗲𝗹𝗶𝗻𝗲 ===

What do you need to build a fine-tuning pipeline?

→ pretrained LLM: HuggingFace Hub

→ parameter efficient tuning method: peft (= LoRA)

→ quantization: bitsandbytes (= QLoRA)

→ training: HuggingFace transformers, PyTorch, trl

→ distributed training: accelerate

→ experiment tracking: Comet ML

→ model registry: Comet ML

→ prompt monitoring: Comet ML

→ continuous training serverless deployment: Beam

=== 𝗜𝗻𝗳𝗲𝗿𝗲𝗻𝗰𝗲 𝗣𝗶𝗽𝗲𝗹𝗶𝗻𝗲 ===

What do you need to build a financial assistant?

→ framework for developing applications powered by language models: LangChain

→ model registry: Comet ML

→ inference: HuggingFace transformers, PyTorch, peft (to load the LoRA weights)

→ quantization: bitsandbytes

→ distributed inference: accelerate

→ encoder-only language model: HuggingFace sentence-transformers

→ vector DB: Qdrant

→ prompt monitoring: Comet ML

→ RESTful API serverless service: Beam

.

As you can see, some tools overlap between the FTI pipelines, but not all.

This is the beauty of the 3-pipeline design, as every component represents a different entity for which you can pick the best stack to build, deploy, and monitor.

You can go wild and use Tensorflow in one of the components if you want your colleges to hate you 😂

See the tools in action in my 🔗 𝗛𝗮𝗻𝗱𝘀-𝗼𝗻 𝗟𝗟𝗠𝘀 FREE course.

That’s it for today 👾

See you next Thursday at 9:00 a.m. CET.

Have a fantastic weekend!

…and see you next week for Lesson 2 of the Hands-On LLMs series 🔥

Paul

Whenever you’re ready, here is how I can help you:

The Full Stack 7-Steps MLOps Framework: a 7-lesson FREE course that will walk you step-by-step through how to design, implement, train, deploy, and monitor an ML batch system using MLOps good practices. It contains the source code + 2.5 hours of reading & video materials on Medium.

Machine Learning & MLOps Blog: in-depth topics about designing and productionizing ML systems using MLOps.

Machine Learning & MLOps Hub: a place where all my work is aggregated in one place (courses, articles, webinars, podcasts, etc.).