DML: What is the difference between your ML development and continuous training environments?

3 techniques you must know to evaluate your LLMs quickly. Experimentation vs. continuous training environments.

Hello there, I am Paul Iusztin 👋🏼

Within this newsletter, I will help you decode complex topics about ML & MLOps one week at a time 🔥

This week’s ML & MLOps topics:

3 techniques you must know to evaluate your LLMs quickly

What is the difference between your ML development and continuous training environments?

Story: Job roles tell you there is just one type of MLE, but there are actually 3.

But first, I want to let you know that after 1 year of making content, I finally decided to share my content on Twitter/X.

I took this decision because everybody has a different way of reading and interacting with their socials.

...and I want everyone to enjoy my content on their favorite platform.

I even bought that stu*** blue ticker to see that I am serious about this 😂

So...

If you like my content and you are a Twitter/X person ↓

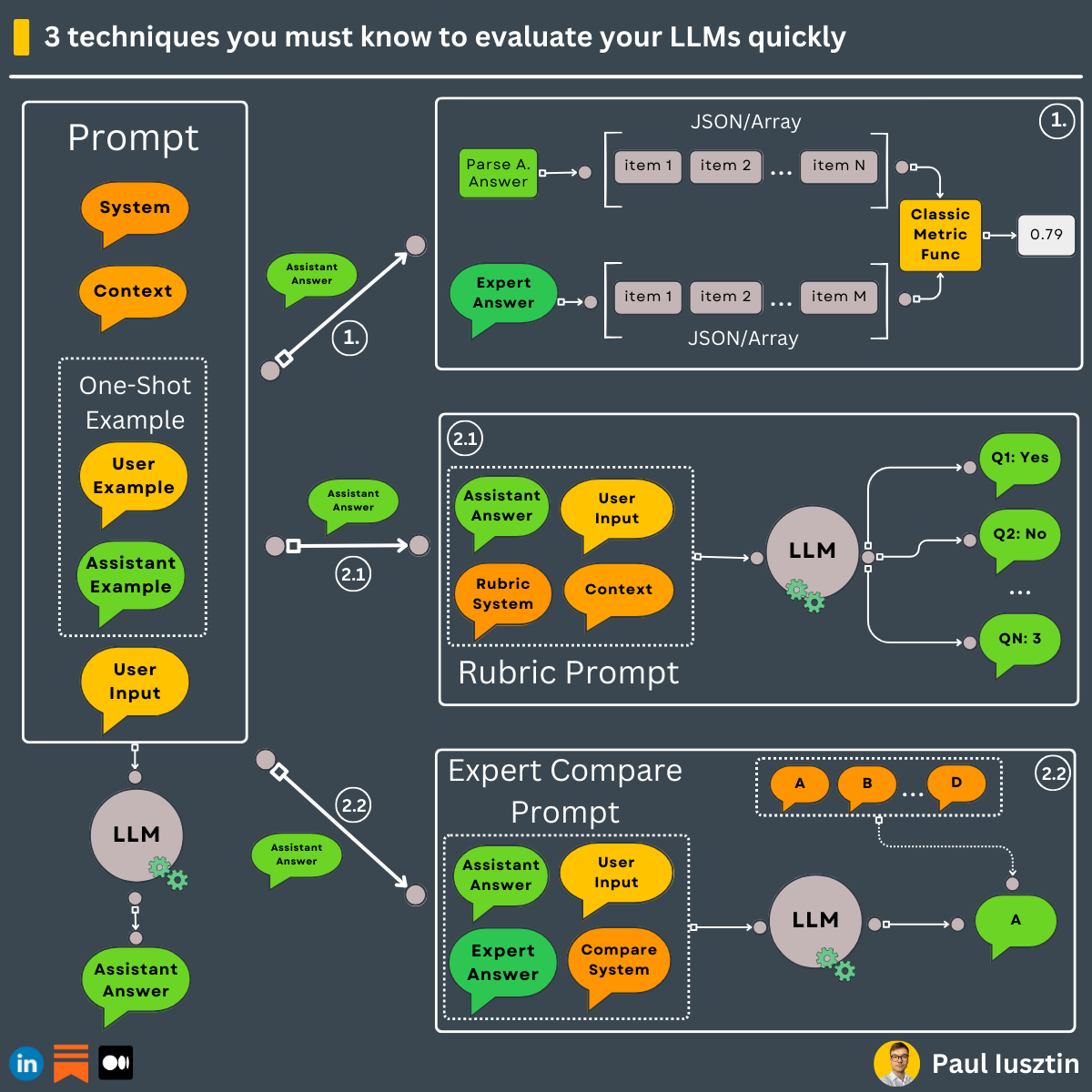

#1. 3 techniques you must know to evaluate your LLMs quickly

Manually testing the output of your LLMs is a tedious and painful process → you need to automate it.

In generative AI, most of the time, you cannot leverage standard metrics.

Thus, the real question is, how do you evaluate the outputs of an LLM?

Depending on your problem, here is what you can do ↓

#𝟭. 𝗦𝘁𝗿𝘂𝗰𝘁𝘂𝗿𝗲𝗱 𝗮𝗻𝘀𝘄𝗲𝗿𝘀 - 𝘆𝗼𝘂 𝗸𝗻𝗼𝘄 𝗲𝘅𝗮𝗰𝘁𝗹𝘆 𝘄𝗵𝗮𝘁 𝘆𝗼𝘂 𝘄𝗮𝗻𝘁 𝘁𝗼 𝗴𝗲𝘁

Even if you use an LLM to generate text, you can ask it to generate a response in a structured format (e.g., JSON) that can be parsed.

You know exactly what you want (e.g., a list of products extracted from the user's question).

Thus, you can easily compare the generated and ideal answers using classic approaches.

For example, when extracting the list of products from the user's input, you can do the following:

- check if the LLM outputs a valid JSON structure

- use a classic method to compare the generated and real answers

#𝟮. 𝗡𝗼 "𝗿𝗶𝗴𝗵𝘁" 𝗮𝗻𝘀𝘄𝗲𝗿 (𝗲.𝗴., 𝗴𝗲𝗻𝗲𝗿𝗮𝘁𝗶𝗻𝗴 𝗱𝗲𝘀𝗰𝗿𝗶𝗽𝘁𝗶𝗼𝗻𝘀, 𝘀𝘂𝗺𝗺𝗮𝗿𝗶𝗲𝘀, 𝗲𝘁𝗰.)

When generating sentences, the LLM can use different styles, words, etc. Thus, traditional metrics (e.g., BLUE score) are too rigid to be useful.

You can leverage another LLM to test the output of our initial LLM. The trick is in what questions to ask.

When testing LLMs, you won't have a big testing split size as you are used to. A set of 10-100 tricky examples usually do the job (it won't be costly).

Here, we have another 2 sub scenarios:

↳ 𝟮.𝟭 𝗪𝗵𝗲𝗻 𝘆𝗼𝘂 𝗱𝗼𝗻'𝘁 𝗵𝗮𝘃𝗲 𝗮𝗻 𝗶𝗱𝗲𝗮𝗹 𝗮𝗻𝘀𝘄𝗲𝗿 𝘁𝗼 𝗰𝗼𝗺𝗽𝗮𝗿𝗲 𝘁𝗵𝗲 𝗮𝗻𝘀𝘄𝗲𝗿 𝘁𝗼 (𝘆𝗼𝘂 𝗱𝗼𝗻'𝘁 𝗵𝗮𝘃𝗲 𝗴𝗿𝗼𝘂𝗻𝗱 𝘁𝗿𝘂𝘁𝗵)

You don't have access to an expert to write an ideal answer for a given question to compare it to.

Based on the initial prompt and generated answer, you can compile a set of questions and pass them to an LLM. Usually, these are Y/N questions that you can easily quantify and check the validity of the generated answer.

This is known as "Rubric Evaluation"

For example:

"""

- Is there any disagreement between the response and the context? (Y or N)

- Count how many questions the user asked. (output a number)

...

"""

This strategy is intuitive, as you can ask the LLM any question you are interested in as long it can output a quantifiable answer (Y/N or a number).

↳ 𝟮.𝟮. 𝗪𝗵𝗲𝗻 𝘆𝗼𝘂 𝗱𝗼 𝗵𝗮𝘃𝗲 𝗮𝗻 𝗶𝗱𝗲𝗮𝗹 𝗮𝗻𝘀𝘄𝗲𝗿 𝘁𝗼 𝗰𝗼𝗺𝗽𝗮𝗿𝗲 𝘁𝗵𝗲 𝗿𝗲𝘀𝗽𝗼𝗻𝘀𝗲 𝘁𝗼 (𝘆𝗼𝘂 𝗵𝗮𝘃𝗲 𝗴𝗿𝗼𝘂𝗻𝗱 𝘁𝗿𝘂𝘁𝗵)

When you can access an answer manually created by a group of experts, things are easier.

You will use an LLM to compare the generated and ideal answers based on semantics, not structure.

For example:

"""

(A) The submitted answer is a subset of the expert answer and entirely consistent.

...

(E) The answers differ, but these differences don't matter.

"""

#2. What is the difference between your ML development and continuous training environments?

They might do the same thing, but their design is entirely different ↓

𝗠𝗟 𝗗𝗲𝘃𝗲𝗹𝗼𝗽𝗺𝗲𝗻𝘁 𝗘𝗻𝘃𝗶𝗿𝗼𝗻𝗺𝗲𝗻𝘁

At this point, your main goal is to ingest the raw and preprocessed data through versioned artifacts (or a feature store), analyze it & generate as many experiments as possible to find the best:

- model

- hyperparameters

- augmentations

Based on your business requirements, you must maximize some specific metrics, find the best latency-accuracy trade-offs, etc.

You will use an experiment tracker to compare all these experiments.

After you settle on the best one, the output of your ML development environment will be:

- a new version of the code

- a new version of the configuration artifact

Here is where the research happens. Thus, you need flexibility.

That is why we decouple it from the rest of the ML systems through artifacts (data, config, & code artifacts).

𝗖𝗼𝗻𝘁𝗶𝗻𝘂𝗼𝘂𝘀 𝗧𝗿𝗮𝗶𝗻𝗶𝗻𝗴 𝗘𝗻𝘃𝗶𝗿𝗼𝗻𝗺𝗲𝗻𝘁

Here is where you want to take the data, code, and config artifacts and:

- train the model on all the required data

- output a staging versioned model artifact

- test the staging model artifact

- if the test passes, label it as the new production model artifact

- deploy it to the inference services

A common strategy is to build a CI/CD pipeline that (e.g., using GitHub Actions):

- builds a docker image from the code artifact (e.g., triggered manually or when a new artifact version is created)

- start the training pipeline inside the docker container that pulls the feature and config artifacts and outputs the staging model artifact

- manually look over the training report -> If everything went fine, manually trigger the testing pipeline

- manually look over the testing report -> if everything worked fine (e.g., the model is better than the previous one), manually trigger the CD pipeline that deploys the new model to your inference services

Note how the model registry quickly helps you to decouple all the components.

Also, because training and testing metrics are not always black & white, it is tough to 100% automate the CI/CD pipeline.

Thus, you need a human in the loop when deploying ML models.

To conclude...

The ML development environment is where you do your research to find better models:

- 𝘪𝘯𝘱𝘶𝘵: data artifact

- 𝘰𝘶𝘵𝘱𝘶𝘵: code & config artifacts

The continuous training environment is used to train & test the production model at scale:

- 𝘪𝘯𝘱𝘶𝘵: data, code, config artifacts

- 𝘰𝘶𝘵𝘱𝘶𝘵: model artifact

This is not a fixed solution, as ML systems are still an open question.

But if you want to see this strategy in action ↓

↳🔗 Check out my The Full Stack 7-Steps MLOps Framework FREE Course.

Story: Job roles tell you there is just one type of MLE, but there are actually 3

Here they are ↓

These are the 3 ML engineering personas I found while working with different teams in the industry:

#𝟭. 𝗥𝗲𝘀𝗲𝗮𝗿𝗰𝗵𝗲𝗿𝘀 𝘂𝗻𝗱𝗲𝗿𝗰𝗼𝘃𝗲𝗿

They like to stay in touch with the latest papers, understand the architecture of models, optimize them, run experiments, etc.

They are great at picking the best models but not that great at writing clean code and scaling the solution.

#𝟮. 𝗦𝗪𝗘 𝘂𝗻𝗱𝗲𝗿𝗰𝗼𝘃𝗲𝗿

They pretend they read papers but don't (maybe only when they have to). They are more concerned with writing modular code and data quality than the latest hot models. Usually, these are the "data-centric" people.

They are great at writing clean code & processing data at scale but lack deep mathematical skills to develop complex DL solutions.

#𝟯. 𝗠𝗟𝗢𝗽𝘀 𝗳𝗿𝗲𝗮𝗸𝘀

They ultimately don't care about the latest research & hot models. They are more into the latest MLOps tools and building ML systems. They love to automate everything and use as many tools as possible.

Great at scaling the solution and building ML pipelines, but not great at running experiments & tweaking ML models. They love to treat the ML model as a black box.

I started as #1. , until I realized I hated it - now I am a mix of:

→ #𝟭. 20%

→ #𝟮. 40%

→ #𝟯. 40%

But that doesn't mean one is better - these types are complementary.

A great ML team should have at least one of each persona.

What do you think? Did I get it right?

That’s it for today 👾

See you next Thursday at 9:00 a.m. CET.

Have a fantastic weekend!

Paul

Whenever you’re ready, here is how I can help you:

The Full Stack 7-Steps MLOps Framework: a 7-lesson FREE course that will walk you step-by-step through how to design, implement, train, deploy, and monitor an ML batch system using MLOps good practices. It contains the source code + 2.5 hours of reading & video materials on Medium.

Machine Learning & MLOps Blog: in-depth topics about designing and productionizing ML systems using MLOps.

Machine Learning & MLOps Hub: a place where all my work is aggregated in one place (courses, articles, webinars, podcasts, etc.).