This article was authored by Trishala Thakur, a full-stack data scientist specializing in predictive maintenance, time series forecasting, and deep learning for environmental and energy applications. It draws on a Data Center Scale Computing project completed at the University of Colorado Boulder in collaboration with Kalyani Kailas Jaware, a talented data analyst.

This article introduces a framework for fine-tuning pre-trained deep learning models on Google Cloud Platform (GCP) and deploying them as APIs for pipeline integration and real-time predictions.

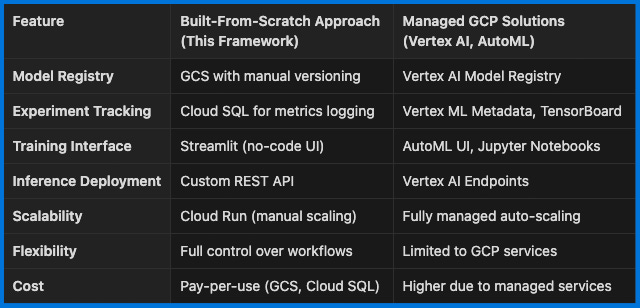

Unlike standard GCP machine learning (ML) workflows, this system is built entirely from scratch, providing hands-on experience in constructing each component: model registry, experiment tracking, and inference system; without relying on Vertex AI, MLFlow, or other managed solutions.

The goal is twofold:

For ML practitioners – Gain a deeper understanding of how core ML infrastructure works by manually building the essential components (along with a no-code UI for fine tuning).

For non-experts – Provide a no-code interface for fine-tuning models without dealing with infrastructure complexities.

Why Build Instead of Using Existing Tools?

Many cloud-based ML pipelines use off-the-shelf solutions like Vertex AI, Comet, W&B, or MLFlow. While these services streamline workflows, they abstract away key design decisions related to model versioning, experiment tracking, and inference scaling.

By implementing everything from scratch, we:

Learn how ML infrastructure works under the hood

Gain full control over model management and deployment

Understand trade-offs between managed vs. custom-built solutions

This system replicates the essential functionalities of a modern MLOps pipeline while staying cloud-native and cost-efficient.

ARCHITECTURE

Key Components of the Framework

1. Model Registry: Storing Models in Google Cloud Storage (GCS)

A model registry serves as a structured repository for managing different versions of trained models. Instead of using a managed solution like Vertex AI Model Registry, we leverage Google Cloud Storage (GCS) as a custom-built model registry.

How GCS Functions as a Model Registry

Versioning: Models are stored in structured directories (e.g., /models/{model_name}/{version}/) to enable retrieval and rollback.

Flexibility: Unlike Vertex AI’s registry, this allows unrestricted access to model artifacts via API calls, making it vendor-agnostic.

Takeaway: While tools like Vertex AI automate model tracking, using GCS teaches us how manual version control works in a production ML system.

2. Experiment Tracking: Storing Metrics in Cloud SQL

An experiment tracker stores training runs, hyperparameters, and performance metrics, allowing users to compare models systematically. Instead of using tools like MLFlow, Weights & Biases (W&B), or Comet, we use Google Cloud SQL to build our own lightweight tracking system.

How Cloud SQL Functions as an Experiment Tracker

Stores training metadata (model version, dataset, hyperparameters, loss/accuracy) in a structured SQL table.

Supports querying for best models (e.g., “Fetch top 5 models with the lowest validation loss”).

Provides persistence and relational integrity compared to JSON logs or spreadsheets.

Example:

Takeaway: While tools like TensorBoard and Vertex ML Metadata automate experiment logging, this approach teaches the fundamentals of structured ML metadata management.

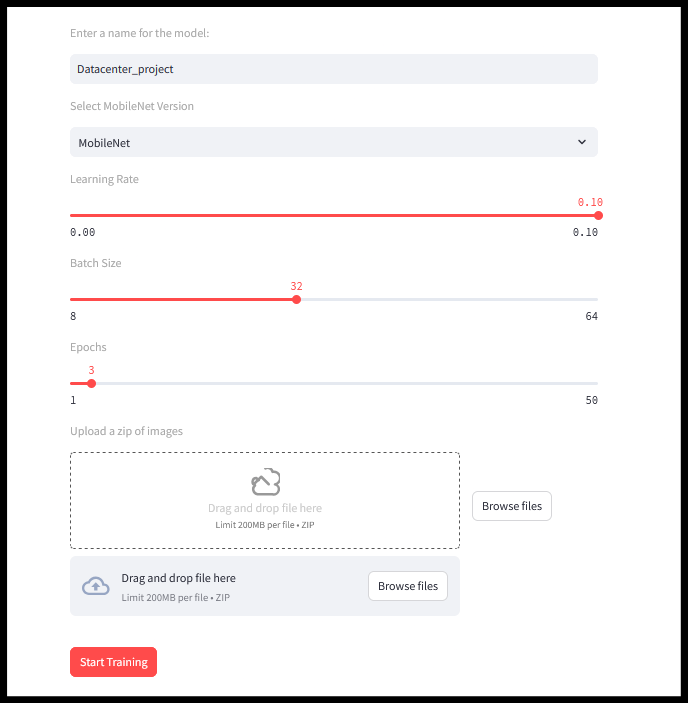

3. Unified Accessibility with Streamlit: No-Code Model Training

Unlike traditional GCP pipelines requiring Python and Jupyter notebooks, this framework provides a Streamlit-based UI that allows users to:

Upload datasets for fine-tuning

Select hyperparameters and start training

View live training metrics and model performance

Takeaway: This simplifies ML workflows for non-experts, similar to AutoML solutions, but without the black-box limitations.

4. Deployment & Real-Time Inference: Custom REST API

Instead of Vertex AI Prediction endpoints, we build a RESTful API that serves fine-tuned models directly from GCS.

Sample code:

Why Not Managed Prediction Services?

Custom Preprocessing: Managed services have strict input constraints (e.g., Gemini API doesn’t support JSON payloads). Our approach enables flexible input handling.

Scalability Control: Serverless deployments like Cloud Run provide auto-scaling while avoiding vendor lock-in.

Lower Cost: Avoids the per-query pricing model of Vertex AI endpoints.

Takeaway: This method offers full control over inference logic, unlike managed ML services that impose strict format constraints.

Comparing This Approach to Managed GCP Solutions

Workflow Example: Fine-Tuning and Deploying a Language Model for Customer Support

Scenario:

You want to fine-tune a language model (like DistilBERT) on customer chat data to automatically classify queries into categories like Billing, Tech Support, and Cancellations.

How the Workflow Applies:

User Interaction:

Upload the CSV dataset (chat logs) via Streamlit UI

Choose base model: distilbert-base-uncased

Set hyperparameters (e.g., learning rate 0.0005, epochs 5)

Training Workflow:

User clicks Train Model

Fine-tuning happens on a GCP VM

Model saved at /models/chat_classifier/v1.0/model.pkl in GCS

Test Metrics:

Validation accuracy and loss logged in Cloud SQL for experiment tracking

Model API:

User clicks Activate API

Flask API is deployed on Cloud Run for real-time predictions

Why This Approach Matters

Teaches how ML infrastructure works from the ground up (model tracking, experiment logging, inference serving).

Provides full control over storage, versioning, and deployment instead of relying on black-box services.

Lowers costs by avoiding proprietary ML tooling while keeping a cloud-native architecture.

This system is ideal for ML engineers who want hands-on experience in designing ML infrastructure or data scientists who prefer an accessible, no-code approach to model fine-tuning.

While this framework proves that we can build everything from scratch, it also highlights why tools like MLFlow, Vertex AI, and W&B exist.

Lessons Learned from Building Our Own ML Infrastructure

Model management is complex – A structured registry (like Vertex AI) prevents versioning issues.

Tracking experiments manually can be tedious – MLFlow automates metadata storage and comparisons.

Inference requires careful optimization – Managed endpoints simplify model hosting and scaling.

By experiencing these challenges firsthand, we gain a better insight into when to use pre-built solutions versus rolling out custom implementations.

Conclusion: When to Build vs. When to Use Existing Tools

Build from scratch if you need full control, want to avoid vendor lock-in, or are learning how ML infrastructure works.

Use managed tools if scalability, ease of use, and automation are priorities.

Go hybrid for the best of both worlds – A balanced approach often works best.

Use GCS as a model registry but integrate MLFlow for experiment tracking.

Deploy a custom REST API for inference while leveraging Cloud Run for auto-scaling.

Manage model training in custom pipelines but utilize Vertex AI for hyperparameter tuning.

This project is NOT about avoiding existing tools. It’s about learning their purpose and appreciating their value.

Next Steps: Deploy this system and experiment with different datasets to refine your fine-tuned ML models!

Whenever you’re ready, there are 3 ways we can help you:

Perks: Exclusive discounts on our recommended learning resources

(books, live courses, self-paced courses and learning platforms).

The LLM Engineer’s Handbook: Our bestseller book on teaching you an end-to-end framework for building production-ready LLM and RAG applications, from data collection to deployment (get up to 20% off using our discount code).

Free open-source courses: Master production AI with our end-to-end open-source courses, which reflect real-world AI projects and cover everything from system architecture to data collection, training and deployment.

Images

If not otherwise stated, all images are created by the author.

Thanks for contributing to Decoding ML with this fantastic article, Trishala. Love it!