Real-time feature pipelines for RAG

RAG hybrid search with transformers-based sparse vectors. CDC tech stack for event-driven architectures.

This week’s topics:

CDC tech stack for event-driven architectures

Real-time feature pipelines with CDC

RAG hybrid search with transformers-based sparse vectors

CDC tech stack for event-driven architectures

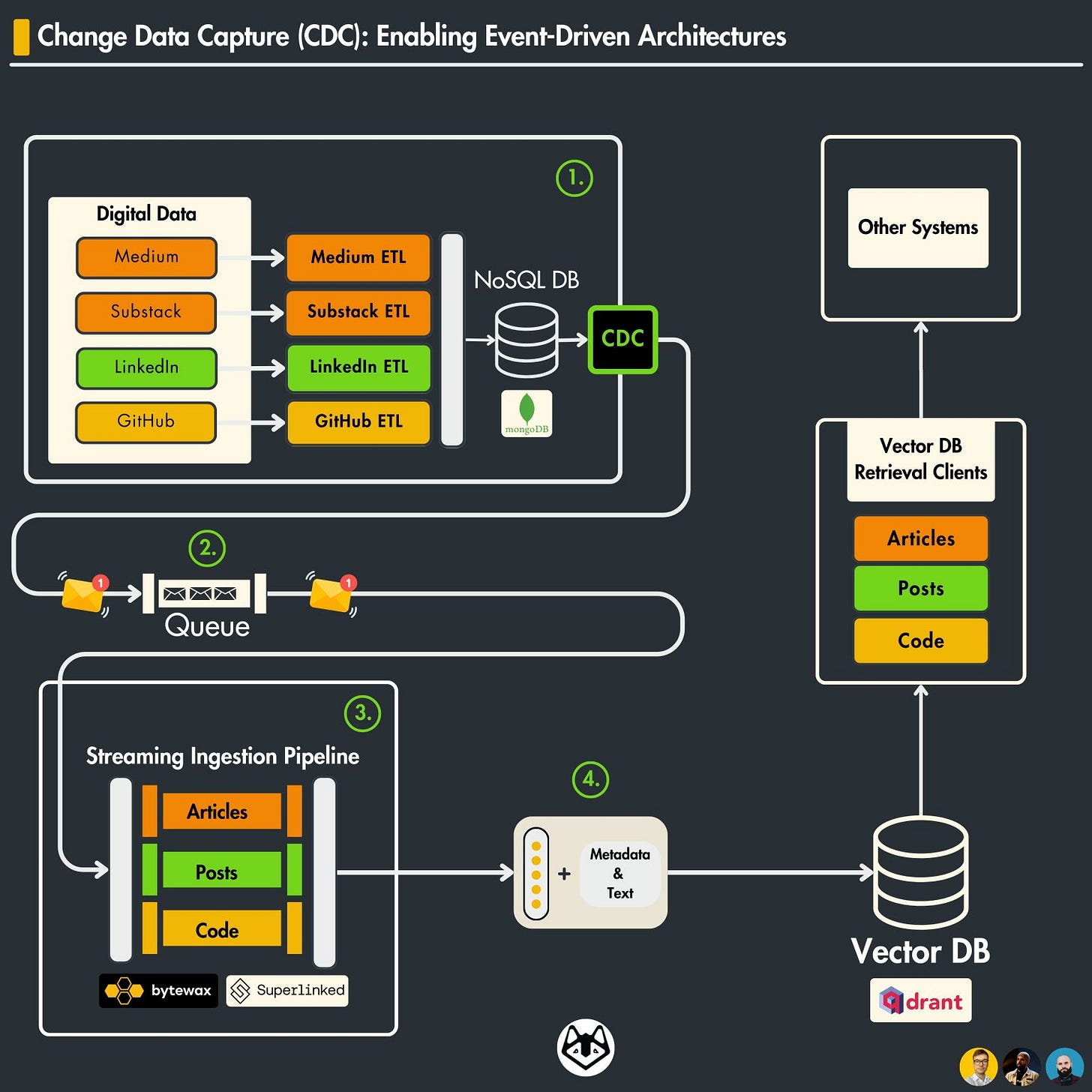

Here is the 𝘁𝗲𝗰𝗵 𝘀𝘁𝗮𝗰𝗸 used to 𝗯𝘂𝗶𝗹𝗱 a 𝗖𝗵𝗮𝗻𝗴𝗲 𝗗𝗮𝘁𝗮 𝗖𝗮𝗽𝘁𝘂𝗿𝗲 (𝗖𝗗𝗖) 𝗰𝗼𝗺𝗽𝗼𝗻𝗲𝗻𝘁 for implementing an 𝗲𝘃𝗲𝗻𝘁-𝗱𝗿𝗶𝘃𝗲𝗻 𝗮𝗿𝗰𝗵𝗶𝘁𝗲𝗰𝘁𝘂𝗿𝗲 in our 𝗟𝗟𝗠 𝗧𝘄𝗶𝗻 𝗰𝗼𝘂𝗿𝘀𝗲

𝗪𝗵𝗮𝘁 𝗶𝘀 𝗖𝗵𝗮𝗻𝗴𝗲 𝗗𝗮𝘁𝗮 𝗖𝗮𝗽𝘁𝘂𝗿𝗲 (𝗖𝗗𝗖)?

The purpose of CDC is to capture insertions, updates, and deletions applied to a database and to make this change data available in a format easily consumable by downstream applications.

𝗪𝗵𝘆 𝗱𝗼 𝘄𝗲 𝗻𝗲𝗲𝗱 𝗖𝗗𝗖 𝗽𝗮𝘁𝘁𝗲𝗿𝗻?

- Real-time Data Syncing

- Efficient Data Pipelines

- Minimized System Impact

- Event-Driven Architectures

𝗪𝗵𝗮𝘁 𝗱𝗼 𝘄𝗲 𝗻𝗲𝗲𝗱 𝗳𝗼𝗿 𝗮𝗻 𝗲𝗻𝗱-𝘁𝗼-𝗲𝗻𝗱 𝗶𝗺𝗽𝗹𝗲𝗺𝗲𝗻𝘁𝗮𝘁𝗶𝗼𝗻 𝗼𝗳 𝗖𝗗𝗖?

We will take the tech stack used in our LLM Twin course as an example, where...

... we built a feature pipeline to gather cleaned data for fine-tuning and chunked & embedded data for RAG

𝗘𝘃𝗲𝗿𝘆𝘁𝗵𝗶𝗻𝗴 𝘄𝗶𝗹𝗹 𝗯𝗲 𝗱𝗼𝗻𝗲 𝗼𝗻𝗹𝘆 𝗶𝗻 𝗣𝘆𝘁𝗵𝗼𝗻!

𝘏𝘦𝘳𝘦 𝘵𝘩𝘦𝘺 𝘢𝘳𝘦

↓↓↓

1. 𝗧𝗵𝗲 𝘀𝗼𝘂𝗿𝗰𝗲 𝗱𝗮𝘁𝗮𝗯𝗮𝘀𝗲: MongoDB (it (also works for most databases such as MySQL, PostgreSQL, Oracle, etc.)

2. 𝗔 𝘁𝗼𝗼𝗹 𝘁𝗼 𝗺𝗼𝗻𝗶𝘁𝗼𝗿 𝘁𝗵𝗲 𝘁𝗿𝗮𝗻𝘀𝗮𝗰𝘁𝗶𝗼𝗻 𝗹𝗼𝗴: MongoDB Watcher (also Debezium is a popular & scalable solution)

3. 𝗔 𝗱𝗶𝘀𝘁𝗿𝗶𝗯𝘂𝘁𝗲𝗱 𝗾𝘂𝗲𝘂𝗲: RabbitMQ (another popular option is to use Kafka, but it was overkill in our use case)

4. 𝗔 𝘀𝘁𝗿𝗲𝗮𝗺𝗶𝗻𝗴 𝗲𝗻𝗴𝗶𝗻𝗲: Bytewax (great streaming engine for the Python ecosystem)

5. 𝗔 𝘀𝗼𝘂𝗿𝗰𝗲 𝗱𝗮𝘁𝗮𝗯𝗮𝘀𝗲: Qdrant (this works with any other database, but we needed a vector DB to store our data for fine-tuning and RAG)

𝘍𝘰𝘳 𝘦𝘹𝘢𝘮𝘱𝘭𝘦, 𝘩𝘦𝘳𝘦 𝘪𝘴 𝘩𝘰𝘸 𝘢 𝘞𝘙𝘐𝘛𝘌 𝘰𝘱𝘦𝘳𝘢𝘵𝘪𝘰𝘯 𝘸𝘪𝘭𝘭 𝘣𝘦 𝘱𝘳𝘰𝘤𝘦𝘴𝘴𝘦𝘥:

1. Write a post to the MongoDB warehouse

2. A "𝘤𝘳𝘦𝘢𝘵𝘦" operation is logged in the transaction log of Mongo

3. The MongoDB watcher captures this and emits it to the RabbitMQ queue

4. The Bytewax streaming pipelines read the event from the queue

5. It cleans, chunks, and embeds it right away - in real time!

6. The cleaned & embedded version of the post is written to Qdrant

Real-time feature pipelines with CDC

𝗛𝗼𝘄 to 𝗶𝗺𝗽𝗹𝗲𝗺𝗲𝗻𝘁 𝗖𝗗𝗖 to 𝘀𝘆𝗻𝗰 your 𝗱𝗮𝘁𝗮 𝘄𝗮𝗿𝗲𝗵𝗼𝘂𝘀𝗲 and 𝗳𝗲𝗮𝘁𝘂𝗿𝗲 𝘀𝘁𝗼𝗿𝗲 using a RabbitMQ 𝗾𝘂𝗲𝘂𝗲 and a Bytewax 𝘀𝘁𝗿𝗲𝗮𝗺𝗶𝗻𝗴 𝗲𝗻𝗴𝗶𝗻𝗲 ↓

𝗙𝗶𝗿𝘀𝘁, 𝗹𝗲𝘁'𝘀 𝘂𝗻𝗱𝗲𝗿𝘀𝘁𝗮𝗻𝗱 𝘄𝗵𝗲𝗿𝗲 𝘆𝗼𝘂 𝗻𝗲𝗲𝗱 𝘁𝗼 𝗶𝗺𝗽𝗹𝗲𝗺𝗲𝗻𝘁 𝘁𝗵𝗲 𝗖𝗵𝗮𝗻𝗴𝗲 𝗗𝗮𝘁𝗮 𝗖𝗮𝗽𝘁𝘂𝗿𝗲 (𝗖𝗗𝗖) 𝗽𝗮𝘁𝘁𝗲𝗿𝗻:

𝘊𝘋𝘊 𝘪𝘴 𝘶𝘴𝘦𝘥 𝘸𝘩𝘦𝘯 𝘺𝘰𝘶 𝘸𝘢𝘯𝘵 𝘵𝘰 𝘴𝘺𝘯𝘤 2 𝘥𝘢𝘵𝘢𝘣𝘢𝘴𝘦𝘴.

The destination can be a complete replica of the source database (e.g., one for transactional and the other for analytical applications)

...or you can process the data from the source database before loading it to the destination DB (e.g., retrieve various documents and chunk & embed them for RAG).

𝘛𝘩𝘢𝘵'𝘴 𝘸𝘩𝘢𝘵 𝘐 𝘢𝘮 𝘨𝘰𝘪𝘯𝘨 𝘵𝘰 𝘴𝘩𝘰𝘸 𝘺𝘰𝘶:

How to use CDC to sync a MongoDB & Qdrant vector DB to streamline real-time documents that must be ready for fine-tuning LLMs and RAG.

MongoDB is our data warehouse.

Qdrant is our logical feature store.

.

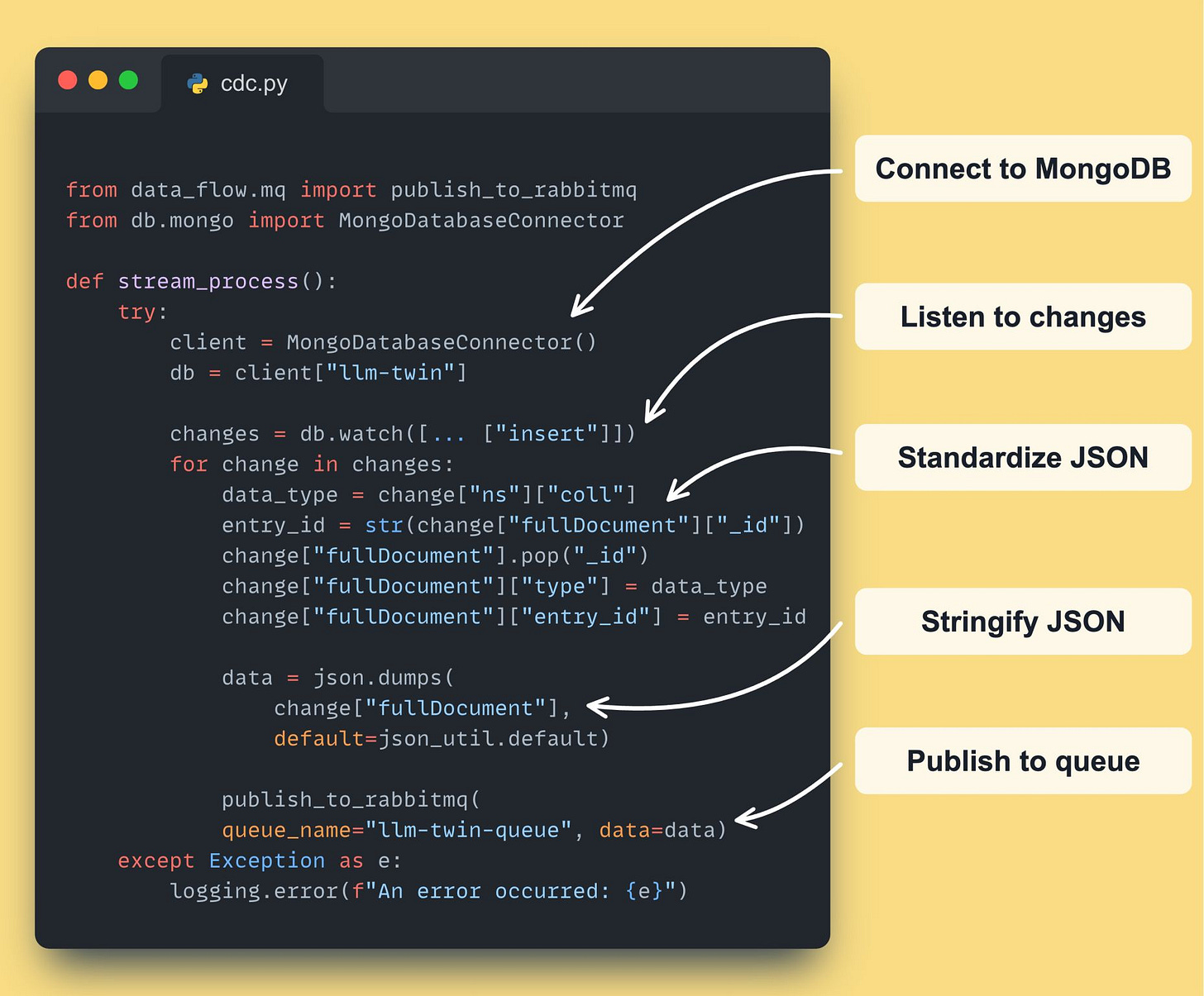

𝗛𝗲𝗿𝗲 𝗶𝘀 𝘁𝗵𝗲 𝗶𝗺𝗽𝗹𝗲𝗺𝗲𝗻𝘁𝗮𝘁𝗶𝗼𝗻 𝗼𝗳 𝘁𝗵𝗲 𝗖𝗗𝗖 𝗽𝗮𝘁𝘁𝗲𝗿𝗻:

1. Use Mongo's 𝘸𝘢𝘵𝘤𝘩() method to listen for CRUD transactions

2. For example, on a CREATE operation, along with saving it to Mongo, the 𝘸𝘢𝘵𝘤𝘩() method will trigger a change and return a JSON with all the information.

3. We standardize the JSON in our desired structure.

4. We stringify the JSON and publish it to the RabbitMQ queue

𝗛𝗼𝘄 𝗱𝗼 𝘄𝗲 𝘀𝗰𝗮𝗹𝗲?

→ You can use Debezium instead of Mongo's 𝘸𝘢𝘵𝘤𝘩() method for scaling up the system, but the idea remains the same.

→ You can swap RabbitMQ with Kafka, but RabbitMQ can get you far.

𝗡𝗼𝘄, 𝘄𝗵𝗮𝘁 𝗵𝗮𝗽𝗽𝗲𝗻𝘀 𝗼𝗻 𝘁𝗵𝗲 𝗼𝘁𝗵𝗲𝗿 𝘀𝗶𝗱𝗲 𝗼𝗳 𝘁𝗵𝗲 𝗾𝘂𝗲𝘂𝗲?

You have a Bytewax streaming pipeline - 100% written in Python that:

5. Listens in real-time to new messages from the RabbitMQ queue

6. It cleans, chunks, and embeds the events on the fly

7. It loads the data to Qdrant for LLM fine-tuning & RAG

Do you 𝘄𝗮𝗻𝘁 to check out the 𝗳𝘂𝗹𝗹 𝗰𝗼𝗱𝗲?

...or even an 𝗲𝗻𝘁𝗶𝗿𝗲 𝗮𝗿𝘁𝗶𝗰𝗹𝗲 about 𝗖𝗗𝗖?

The CDC component is part of the 𝗟𝗟𝗠 𝗧𝘄𝗶𝗻 FREE 𝗰𝗼𝘂𝗿𝘀𝗲, made by Decoding ML.

↓↓↓

🔗 𝘓𝘦𝘴𝘴𝘰𝘯 3: 𝘊𝘩𝘢𝘯𝘨𝘦 𝘋𝘢𝘵𝘢 𝘊𝘢𝘱𝘵𝘶𝘳𝘦: 𝘌𝘯𝘢𝘣𝘭𝘪𝘯𝘨 𝘌𝘷𝘦𝘯𝘵-𝘋𝘳𝘪𝘷𝘦𝘯 𝘈𝘳𝘤𝘩𝘪𝘵𝘦𝘤𝘵𝘶𝘳𝘦𝘴

🔗 𝘎𝘪𝘵𝘏𝘶𝘣

RAG hybrid search with transformers-based sparse vectors

𝗛𝘆𝗯𝗿𝗶𝗱 𝘀𝗲𝗮𝗿𝗰𝗵 is standard in 𝗮𝗱𝘃𝗮𝗻𝗰𝗲𝗱 𝗥𝗔𝗚 𝘀𝘆𝘀𝘁𝗲𝗺𝘀. The 𝘁𝗿𝗶𝗰𝗸 is to 𝗰𝗼𝗺𝗽𝘂𝘁𝗲 the suitable 𝘀𝗽𝗮𝗿𝘀𝗲 𝘃𝗲𝗰𝘁𝗼𝗿𝘀 for it. Here is an 𝗮𝗿𝘁𝗶𝗰𝗹𝗲 that shows 𝗵𝗼𝘄 to use 𝗦𝗣𝗟𝗔𝗗𝗘 to 𝗰𝗼𝗺𝗽𝘂𝘁𝗲 𝘀𝗽𝗮𝗿𝘀𝗲 𝘃𝗲𝗰𝘁𝗼𝗿𝘀 using 𝘁𝗿𝗮𝗻𝘀𝗳𝗼𝗿𝗺𝗲𝗿𝘀 and integrate them into a 𝗵𝘆𝗯𝗿𝗶𝗱 𝘀𝗲𝗮𝗿𝗰𝗵 𝗮𝗹𝗴𝗼𝗿𝗶𝘁𝗵𝗺 using Qdrant.

𝙒𝙝𝙮 𝙗𝙤𝙩𝙝𝙚𝙧 𝙬𝙞𝙩𝙝 𝙨𝙥𝙖𝙧𝙨𝙚 𝙫𝙚𝙘𝙩𝙤𝙧𝙨 𝙬𝙝𝙚𝙣 𝙬𝙚 𝙝𝙖𝙫𝙚 𝙙𝙚𝙣𝙨𝙚 𝙫𝙚𝙘𝙩𝙤𝙧𝙨 (𝙚𝙢𝙗𝙚𝙙𝙙𝙞𝙣𝙜𝙨)?

Sparse vectors represent data by highlighting only the most relevant features (like keywords), significantly reducing memory usage compared to dense vectors.

Also, sparse vectors work great in finding specific keywords, which is why they work fantastic in combination with dense vectors used for finding similarities in semantics but not particular words.

𝗧𝗵𝗲 𝗮𝗿𝘁𝗶𝗰𝗹𝗲 𝗵𝗶𝗴𝗵𝗹𝗶𝗴𝗵𝘁𝘀:

- 𝘚𝘱𝘢𝘳𝘴𝘦 𝘷𝘴. 𝘥𝘦𝘯𝘴𝘦 𝘷𝘦𝘤𝘵𝘰𝘳𝘴

- 𝘏𝘰𝘸 𝘚𝘗𝘓𝘈𝘋𝘌 𝘸𝘰𝘳𝘬𝘴: The SPLADE model leverages sparse vectors to perform better than traditional methods like BM25 by computing it using transformer architectures.

- 𝘞𝘩𝘺 𝘚𝘗𝘓𝘈𝘋𝘌 𝘸𝘰𝘳𝘬𝘴: It expands terms based on context rather than just frequency, offering a nuanced understanding of content relevancy.

- 𝘏𝘰𝘸 𝘵𝘰 𝘪𝘮𝘱𝘭𝘦𝘮𝘦𝘯𝘵 𝘩𝘺𝘣𝘳𝘪𝘥 𝘴𝘦𝘢𝘳𝘤𝘩 𝘶𝘴𝘪𝘯𝘨 𝘚𝘗𝘓𝘈𝘋𝘌 with Qdrant: step-by-step code

𝗛𝗲𝗿𝗲 𝗶𝘀 𝘁𝗵𝗲 𝗮𝗿𝘁𝗶𝗰𝗹𝗲

↓↓↓

🔗 𝘚𝘱𝘢𝘳𝘴𝘦 𝘝𝘦𝘤𝘵𝘰𝘳𝘴 𝘪𝘯 𝘘𝘥𝘳𝘢𝘯𝘵: 𝘗𝘶𝘳𝘦 𝘝𝘦𝘤𝘵𝘰𝘳-𝘣𝘢𝘴𝘦𝘥 𝘏𝘺𝘣𝘳𝘪𝘥 𝘚𝘦𝘢𝘳𝘤𝘩

Images

If not otherwise stated, all images are created by the author.