Tame feature engineering pipelines with FaC

Is our fine-tuned LLM performing well? Stop struggling to integrate AI into your daily workflows

This week’s topics:

Tame feature engineering pipelines with Features as Code (FaC)

Stop struggling to integrate AI into your daily workflows

How do we know that our fine-tuned LLM is performing well?

Features as Code: The modern way of structuring your features using MLOps

Defining and computing features for your ML model can quickly become a mess.

Let’s imagine a standard scenario we’ve all encountered, especially when working with models that work with structured data or multiple data sources, such as classic ML models (e.g., decision trees) or deep neural networks (e.g., two-tower networks for recommender systems, multi-modal LLMs):

You start loading your raw data into multiple DataFrames (using Pandas, Polars, or Spark).

You do some cleaning steps.

You start merging them.

You start transforming your data into features.

Before you know it, you have 100+ spaghetti code functions that are hard to track, manage and debug.

Of course, if you are a master in what you are doing, this doesn’t apply to you. But let’s face it: We are not software engineers mastering the art of writing clean code. Thus, most of us will reach that point when starting a new project.

Now, imagine that upper management tells you they must audit all their ML models. Thus, you have to compute the lineage of each feature your model was trained on. For that, you have to track the origin of each data source, together with its versions, configurations and code.

This one is a complex problem to solve without starting with good MLOps practices in mind since the beginning of the project.

From my 4+ years of experience working with MLOps, versioning, lineage and feature tracking have always become a complex problem and a mess if not considered carefully.

But what if there is a new methodology of writing features that bring structure into your code base from day 0, avoiding the pitfalls mentioned above?

If there weren’t, I wouldn’t be writing this to you.

The strategy is called: “Features as Code” (inspired by Infrastructure as Code tools such as Terraform or Pulumi)

The Features as Code (FaC) paradigm was introduced by Tecton, one of the biggest feature platforms. They applied this strategy at scale at companies such as Coinbase, Square and Atlassian, and it worked like a charm!

How does it work?

Conceptually, the pattern is intuitive. It defines each feature (or feature set) as the result of a raw data source and a function. The raw data is the “fuel,” while the function describes how the features look like.

The trick lies in how Tecton manages these functions and data sources. Let’s better understand this with an example.

It all starts by defining one or multiple data sources. For example, let’s consider we have an e-commerce shop with users, items and interactions between them:

from tecton import StreamSource, BatchSource

interactions = StreamSource(

name=”user_item_interactions_stream”,

stream_config=...

batch_config=...

)

users = BatchSource(

name=”users”,

batch_config=...

)

items = BatchSource(

name=”items”,

batch_config=...

)Let’s assume we want to train a two-tower network to compute user and item embeddings within the same vector space to build a recommender system. For that, we need interaction, user and item features from all three sources above.

Let’s see how we can implement that using the FaC pattern:

from tecton import stream_feature_view, Aggregate

@stream_feature_view(

source=interactions,

mode=”pandas”,

features=[

Aggregate(input_column=Field(“clicks”, Int64, function=”sum”, time_window=timedelta(hours=24))

],

batch_schedule=timedelta(days=1)

…

)

def interaction_featuring_engineering(interactions_stream):

# … feature engineering

return interactions_stream[[“user_id”, “item_id”, “clicks_last_24h”, …]]

@batch_feature_view(

source=users,

mode=”pandas”,

batch_schedule=timedelta(days=1)

…

)

def users_featuring_engineering(users):

# … feature engineering

return users[[“user_id”, “feature_1”, …]]

@batch_feature_view(

source=items,

mode=”pandas”,

batch_schedule=timedelta(days=1)

…

)

def items_featuring_engineering(items):

# … feature engineering

return items[[“item_id”, “feature_1”, …]]With just a few lines of code, we clearly defined our features and hooked them to three different data sources.

Now, ask yourself: “How can we track the version and lineage of a feature without actually versioning the data?”

By following the FaC pattern, a feature is defined by its raw data source plus the function that transforms the raw data source into features.

Thus, whenever we change the data source (ingest new data or change the time horizon) or change the function in any way, we can safely assume we have a new version of your features, which will impact the training and serving pipelines.

Now, you can easily track what features you used for training and inference.

Once your data sources and feature functions are set up, you can run `tecton apply`. This command computes and stores all the features in the offline store for training—ensuring time-consistent training data—and in the online store for inference, where fresh feature vectors are served with very low latency. This process is known as "materialization."

This is similar to how Terraform or Pulumi works after you run `terraform apply` and all your defined infrastructure is deployed.

Ok… this is cool, but…

What are the core advantages of the “Features as Code” approach?

Following the Features as Code methodology brings in modularity, allowing us to track each feature's lineage and version easily. It also makes the code easy to follow, extend and debug.

As it treats each feature as a logical unit, you can easily combine them into more complex features.

You might think that following this particular pattern makes the code rigid, but the thing is that some rigidity in structuring your code is healthy, as it standardizes how you and your team write the code, resulting in a clean code base. Even if you use “Features as Code” or other patterns, following standard coding guidelines is a practice that should be followed in any mature project.

To conclude, the FaC paradigm is a solid architectural pattern that makes code easier to write and maintain. We hope to see it in other modern feature platforms or Python packages like Pandas and Polars.

To play around with some real code that uses the “Features as Code” pattern, you can check out Tecton’s free series on building a real-time fraud detection model:

Stop struggling to integrate AI into your daily workflows (Affiliate)

Even as an engineer who’s worked with GenAI for years…

I still struggle with fully integrating AI into my daily workflows.

But it’s not the big, complex models or pipelines that are the issue.

It’s the small, repetitive tasks that slowly drain my time and focus.

I'm talking about:

Managing research notes across different tools and systems.

Writing code snippets and documentation that need to stay in sync.

Building prototypes that leverage GenAI effectively.

Setting up automation that goes beyond basic AI assistance.

AI can handle many of these tasks.

But all are fragmented, which adds friction to your daily workflows.

Hence, integrating everything into a system that works smoothly and consistently is a different beast to conquer.

And this isn’t just about boosting productivity.

It’s about engineering a better way to work.

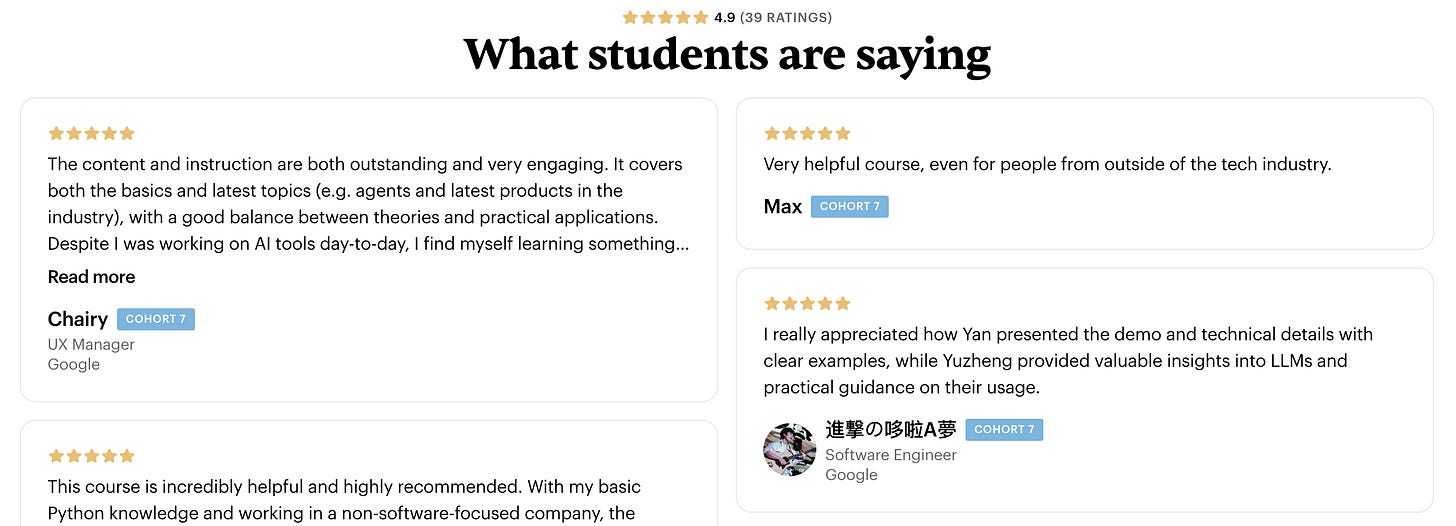

This is why I’m excited about the From Users to Builders course.

It's led by Yuzheng (课代表立正) Sun, a Staff Data Scientist and Staff Applied Scientist with deep industry expertise.

This course is engineered for technical professionals who want to truly build with AI.

What makes it stand out?

Hands-on projects (8 total) that give you practical experience in GenAI engineering.

Research-backed methods - learn not just how things work, but why.

A mindset shift that will prepare you for long-term success in AI, not just short-term solutions.

If you’re a data scientist, engineer, or developer looking to take your GenAI skills to the next level, this course gives you the framework to get there.

You’ll learn how to build AI-powered systems that work for you, not just on the surface but at a deeper, more sustainable level.

We're not chasing the latest AI trend in 2025.

We're creating lasting value in your workflows and projects.

Plus, there are some fantastic perks:

$100 discount with code: PAUL

100% company reimbursement eligibility

If you’ve been stuck navigating the GenAI space or feel like you’re not using AI to its full potential...

This course will give you the roadmap to cut through the noise and get real, tangible results.

Learn more and secure your spot in the next cohort.

How do we know that our fine-tuned LLM is performing well?

How do we know that our fine-tuned LLM is performing well?

(This question is at the heart of optimizing any AI system)

Without measuring the efficiency of your model, it's hard to gauge whether you're on the right track.

That’s where Opik (powered by Comet) comes in...

Opik enables users to implement a robust evaluation pipeline to assess your fine-tuned LLM and RAG system across key metrics:

Heuristic Metrics (e.g., Levenshtein, perplexity) to compare predictions with ground truth.

LLM-as-judges to test for issues like hallucination, moderation, recall, and precision.

Custom business metrics that fit your unique use case, such as writing style and tone consistency.

When using Opik, the first step is to create an evaluation dataset using artifacts stored in Comet.

Next, you can apply scoring metrics to evaluate the LLM.

These metrics help you evaluate critical aspects, such as how well the LLM:

Generates relevant answers to the input

Generates safe responses

Follows custom business needs, such as maintaining a suitable writing style for specific content types

The secret sauce, however, is the LLM-as-judges.

Opik comes with well-crafted and battle-tested evaluation prompts.

For example, the Moderation metric examines if the output violates content policies like violence or hate speech, giving a safety score between 0 and 1.

Opik allows users to:

Compare multiple experiments side by side

Zoom in on individual samples

Analyze specific outputs

This level of granularity is essential when debugging generative models, where traditional metrics may not tell the whole story.

To see an end-to-end implementation of evaluating LLM & RAG applications using Opik (by Comet) on:

Hallucination

Moderation

Custom business metrics

Context recall and precision

📌 Read Lesson 8 from the LLM Twin course:

Whenever you’re ready, there are 3 ways we can help you:

Perks: Exclusive discounts on our recommended learning resources

(live courses, self-paced courses, learning platforms and books).

The LLM Engineer’s Handbook: Our bestseller book on mastering the art of engineering Large Language Models (LLMs) systems from concept to production.

Free open-source courses: Master production AI with our end-to-end open-source courses, which reflect real-world AI projects, covering everything from system architecture to data collection, training and deployment.

Images

If not otherwise stated, all images are created by the author.