This is a guest post by Hamel Husain, a machine learning engineer with over a decade of experience building and evaluating AI systems at companies like GitHub and Airbnb. He co-teaches a course on AI Evals with Shreya Shankar.

In my last post, we dismantled the mirage of generic, off-the-shelf metrics. We established that building a great AI product requires moving beyond vague scores for "helpfulness" and instead focusing on custom evaluations born from a deep analysis of your system's specific failures.

Today, we're tackling a related and equally seductive trap: the 1-to-5 star rating.

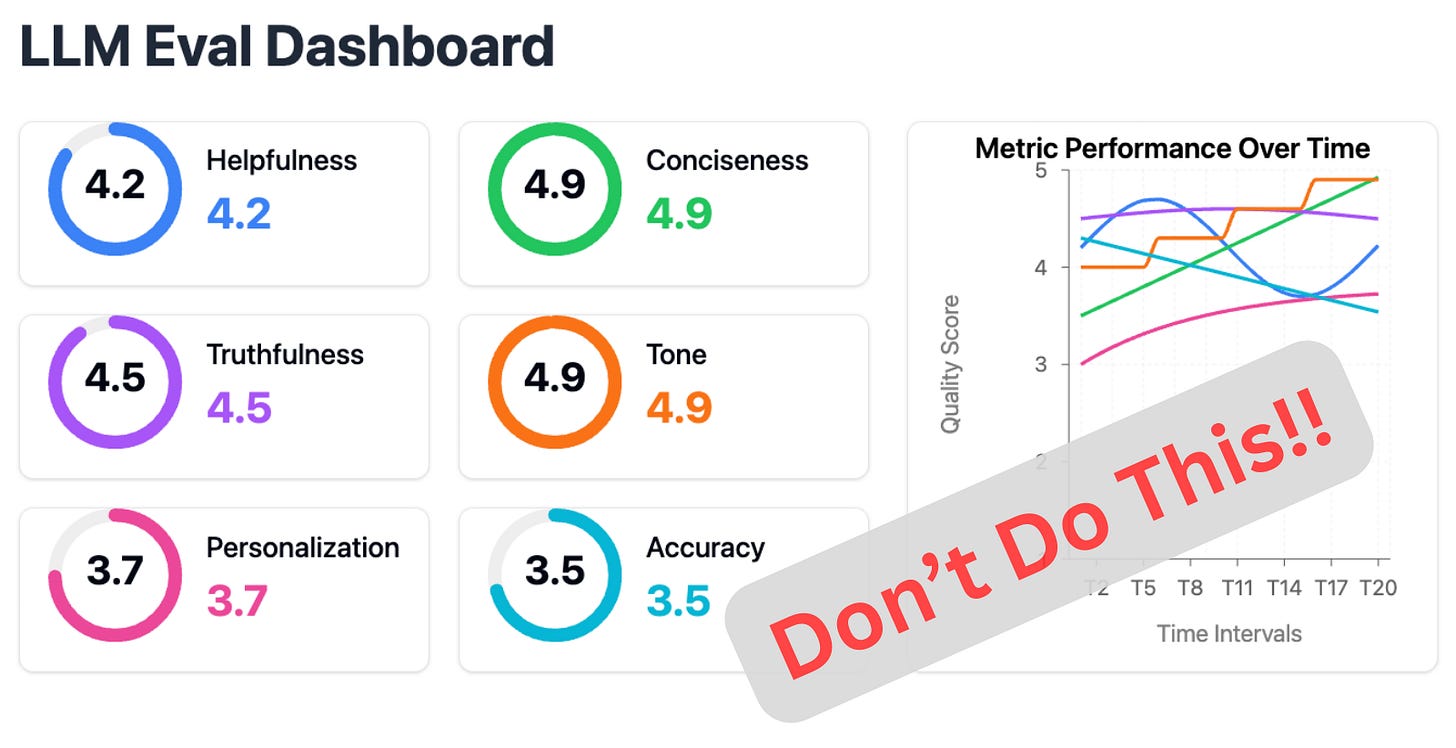

It’s a scene I’ve witnessed at countless companies: a dashboard filled with colorful charts, each tracking a different abstract quality—"Conciseness," "Truthfulness," "Personalization"—all rated on a 1-to-5 scale. It looks impressive. It looks scientific. But when you ask what a "3.7" in "Personalization" actually means, or what the team should do to improve it, you're usually met with blank stares. That’s exactly what happened when I encountered this dashboard on one of my consulting engagements (a faithful anonymized reproduction):

Engineers and product managers often believe that these Likert scales provide more information than a simple pass/fail judgment. The thinking goes, "A 1-5 rating gives us more nuance. It lets us track gradual improvements. A '3' is better than a '2,' and that's progress, right?"

On the surface, this makes sense. But in practice, relying on Likert scales for your primary evaluation metric is a critical mistake. It introduces ambiguity, noise, and inconsistency precisely where you need clarity. For building reliable and trustworthy AI, binary (pass/fail) evaluations are almost always the superior choice.

The Seductive Trap of Subjective Scales

The core problem with a 1-5 scale is that the distance between the numbers is a mystery. What is the actual difference between a '3' and a '4' for "helpfulness"? The answer depends entirely on who you ask and what day it is. This subjectivity creates several downstream problems that sabotage your evaluation process.

First, it leads to inconsistent labeling. When you ask multiple annotators to use a Likert scale, their interpretations will inevitably diverge. One person's '4' is another's '3', making it incredibly difficult to achieve high inter-annotator agreement. You end up spending more time debating the meaning of the rubric than evaluating the system. A low Cohen's Kappa score is often a sign that your rubric is too fuzzy, and Likert scales are a primary source of that fuzziness.

Second, it masks real issues with statistical noise. Detecting a meaningful improvement becomes much harder. To be statistically confident that your system has improved from an average score of 3.2 to 3.4 requires a far larger sample size than detecting a shift in a binary pass rate from 75% to 80%. You can spend weeks making changes without knowing if you're actually making progress or just seeing random fluctuations in your annotators' moods.

Finally, Likert scales encourage lazy decision-making. Annotators often default to the middle value ('3') to avoid making a difficult judgment call. This "satisficing" behavior, as it's known in survey research, hides uncertainty rather than resolving it. A sea of '3's on your dashboard doesn't tell you what to fix; it just tells you your system is vaguely "okay."

The Power of Forced Decisions: Why Binary Evals Work

Switching to a binary pass/fail framework solves these problems by forcing clarity. You cannot simply label an output as "Fail" without knowing why it failed. This simple constraint is incredibly powerful.

It naturally pushes you toward the error analysis workflow we discussed previously. To create a binary evaluation, you must first identify a specific, well-defined failure mode. For example, instead of a vague "helpfulness" score, you create a binary evaluator for "Constraint Violation." This clarity makes the entire process more rigorous.

The benefits are immediate:

It Forces Clearer Thinking: You can't hide in ambiguity. An output either met the specific criterion or it didn't. This sharpens your definitions of quality.

It's Faster and More Consistent: Binary decisions are quicker for annotators to make, reducing fatigue and increasing the volume of traces they can review. This is especially true during the high-throughput process of open coding.

It's More Actionable: The output of a binary evaluation is not a fuzzy number; it's a clear signal tied to a specific problem. When an engineer sees a spike in the "Hallucinated Tool Invocation" failure rate, they know exactly where to start debugging.

"But I'm Losing Nuance!": How to Track Gradual Improvement

The most common objection to binary evaluations is the perceived loss of nuance. "What if a response is partially correct? A 'Fail' seems too harsh and doesn't show we're getting closer."

This is a valid concern, but a Likert scale is the wrong solution. The right way to capture nuance is not by making your scale fuzzier, but by making your criteria more granular.

Instead of a single, subjective rating for a complex quality like "factual accuracy," you should break it down into multiple, specific, binary checks.

For example, imagine your Recipe Bot is asked for a healthy, gluten-free chicken recipe. Instead of rating the response on a 1-5 scale for "Accuracy," you would create separate, binary evaluators for each sub-component:

Eval 1: Dietary Adherence: Did the recipe contain any gluten? (Pass/Fail)

Eval 2: Ingredient Correctness: Did the recipe use chicken? (Pass/Fail)

Eval 3: Health Constraint: Did the recipe align with the "healthy" request (e.g., not deep-fried)? (Pass/Fail)

With this approach, you gain a far more precise and actionable view of your system's performance. You can now say, "Our system is passing the dietary and ingredient checks 95% of the time, but it's failing the 'healthy' constraint 40% of the time." That is a signal you can act on. You've captured the nuance without sacrificing clarity, and you can track your progress on each dimension independently.

Conclusion: Choose Clarity Over False Nuance

Resist the temptation of the 1-to-5 star rating. While it promises a richer view of your system's performance, it often delivers noise and ambiguity. The goal of evaluation is not just to produce a number, but to drive meaningful product improvement.

Binary, failure-mode-specific metrics force the clarity, consistency, and actionability needed to build a robust feedback loop. They connect your high-level product goals directly to the on-the-ground reality of your system's behavior. Start with a simple "Pass" or "Fail," and you'll find yourself on a much faster path to building an AI product that truly works.

If you want to go deeper into building robust, scalable, and effective evaluation systems for your AI applications, check out the AI Evals course that Shreya Shankar and I have created. We provide a practical, hands-on framework to help you move beyond generic metrics and build AI products you can trust. You can find more details and enroll with a 35% discount (ends in 2 weeks) here.

Images

If not otherwise stated, all images are created by the author.

No pacification where reality to be resolved for the good 😊

Thanks for.informative article