#1. Your Best Friend - ML Monitoring

Part 1: Introduction to ML Monitoring: Why, When, and How.

You finally deployed your model. Yay!

Now, you can sit and relax.

Unfortunately, not so fast!

After you deploy your model, it is subject to 4 main points of failure:

service health

model performance

data quality and integrity

data and concept drift

In this newsletter, we will focus on model performance and, data & concept drift.

Let’s start with the beginning.

To compute model performance, aka metrics, the first step is to acquire your ground truth.

While your model is in production, a common issue is that you don't have your ground truth immediately or at all.

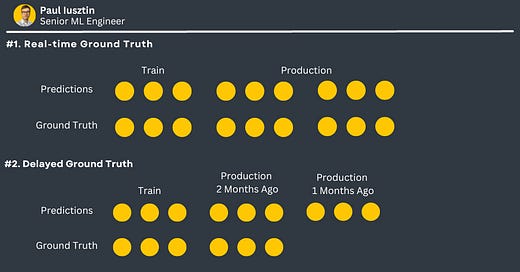

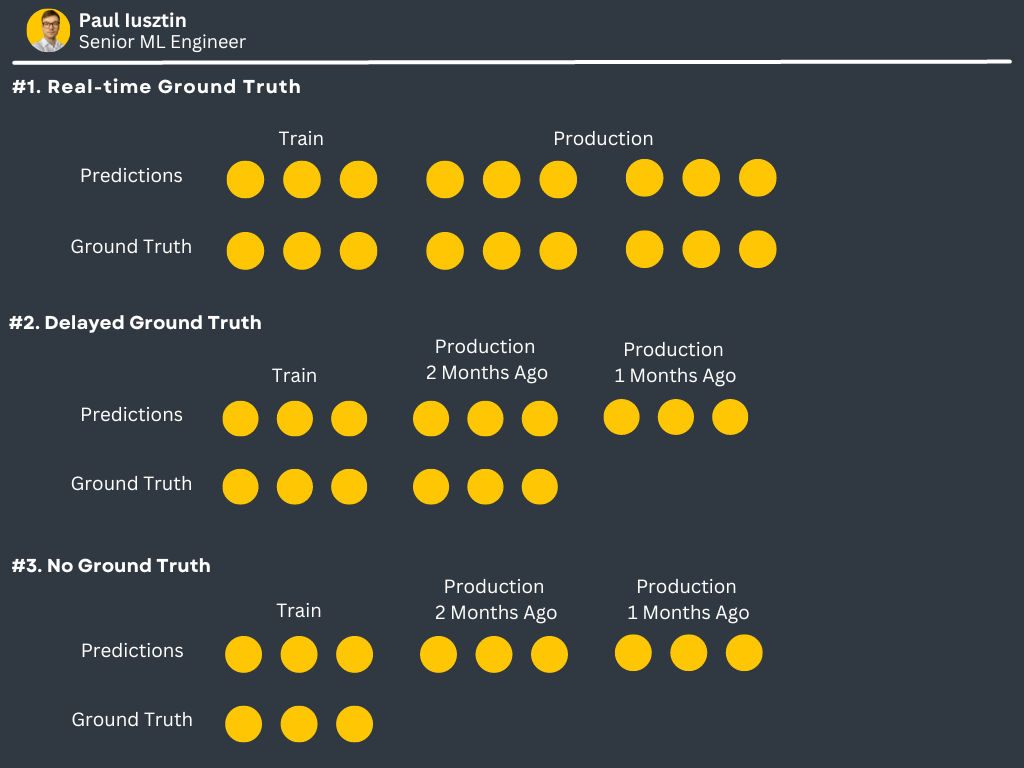

Let's investigate 3 types of ground truths you encounter while in production 👇

1. Real-time Ground Truth

This is the ideal scenario where you can easily access your actuals.

For example, when you recommend an ad and the consumer either clicks it or not.

Or when you estimate food delivery times. When the food arrives, you can quickly calculate the performance.

2. Delayed Ground Truth

In this case, eventually, you will access the ground truths. But, unfortunately, it will be too late to react in time adequately.

For example, you want to predict if a person can access a loan, and you will know the actual outcome only when the loan is paid.

3. No Ground Truth

This is the worst scenario, as you can't automatically collect any GT. Usually, in these cases, you have to hire human annotators if you need any actuals.

For example, you have an object detector running in production. No feedback from the environment will provide you with the GT at any point.

☢️ Be careful at bias in your GT.

Let me explain. In the example where you predict if someone can receive a loan, you will collect GT only from people your model considers credit-worthy.

Thus, your ground truths will likely be very optimistic relative to giving credit to people.

Do you see the importance of the data you use to train your model?

How to handle cases when you don't have GT?

This is where data and concept drift kicks in.

You can use drifts as a proxy for your model performance.

Thus, you can always collect real-time data to know when to send warnings and alerts.

Computing drifts is the key to a robust ML monitoring system. Let’s dig deeper 👇

Data and Concept Drifts

Using data and concept drift, we can detect events such as:

outside world drastically changes (e.g., the COVID-19 pandemic)

the meaning of the field changes

the system naturally evolves, and feature shifts

a drastic increase in volume

features got switched, etc.

Intuitively when a drift happens, the world (data) your model is used to change. Thus, it will behave weirdly if it doesn't adapt to the change (e.g., retrain).

How do we compute data and concept drift?

Mainly using statistical distance checks such as:

PSI

KL Divergence

JS Divergence

KS Tests

EMD

Using these methods, we can analyze for changes (aka drifts) the:

model inputs

model outputs

actuals (aka ground truth)

How do we pick the reference distribution relative to which you watch for drifts?

Fixed Window

It can include a slice from your training, validation, and test data.

Moving Window

Your reference distribution can be a window from the past, e.g., last week.

Now let's take a deeper look at what we can analyze:

1. Model Inputs

They can drift: suddenly, gradually, or on a recurring basis.

You can analyze every feature, such as:

The training vs. production distribution

Production time window A vs. B

⚠️ Note that feature drifts are not always correlated with performance loss.

2. Model Outputs

Again, you can analyze the following:

the training vs. production prediction distribution

prediction distribution between production window A and B

⚡ Note that the analysis is done on the predictions, not the ground truth.

3. Actuals / Ground Truth

Analyze:

Actuals distribution for training vs. production

Prediction vs. actual distributions in productions

⚡ Note that I never mentioned the word metric here.

The 5 methods you need to know to measure data & concept drift in your ML monitoring system.

One of the main methods to detect drifts is to use statistical distances.

Statistical distances are used to quantify the distance between two distributions.

In ML observability, you usually measure the distribution distance between:

training <-> production

past production <-> present production

⚡ When picking a method, look at the following:

< the sample size it works best with >

< the level of sensitivity it has >

Now let's see which are the top 5 most used statistical distances 👇

1. PSI

supports both numeric and categorical features

popular in the finance industry for measuring the inputs of the model

its value ranges from 0 to +Inf

it has a set of popular thresholds:

< 0.1 -> OK

< 0.2 -> Investigate

>= 0.2 -> Alert

PSI has low sensitivity / detects only major changes

you have to define bins -> the sample size does not affect it

it is symmetric

2. KL Divergence

supports both numeric and categorical features

it is the relative entropy between a distribution and the reference

its value ranges from 0 to +Inf

the higher the score, the more different they are

KL has low sensitivity / detects only significant changes

you have to define bins -> the sample size does not affect it

it is not symmetric

3. JS Divergence

supports both numeric and categorical features

it is based on KL Divergence

its value ranges from 0 to 1

it is symmetric

it is slightly more sensitive than PSI and KL divergence

you have to define bins -> the sample size does not affect it

it can easily be interpreted as it ranges between [0, 1] and is symmetric

4. EMD

supports only numerical features

it shows the absolute value of the drift (the other methods cancel out drifts in different directions).

its value is feature dependent. Thus it is good practice to normalize the values with the STD

its value ranges from 0 to +Inf

works well with large sample sizes

tends to be more sensitive than PSI and JS but not too sensitive

5. KS Test

it works with numerical features

this one is different, as it is a nonparametric statistical test

the null hypothesis is that the two samples come from the same distribution

thus a common approach is to check if p-value < 0.05 -> you detected drift

it is very sensitive

its sensitivity increases with the sample size

usually works well with samples sizes < 1000 where you want to detect any slight deviation

⚡ One final note is that drifts are not always correlated with performance loss.

Thus, it is good practice to have two thresholds:

one for warnings -> investigate

one for alarms -> retrain

If retraining is costly, you should be careful when picking the alarm threshold.

Conclusion

Thank you for reading my first newsletter! This means a lot to me.

This week you learned the following:

why you need to monitor your model after it is deployed.

what types of ground truth do you have to compute metrics

how to use data & concept drifts when you don’t have access to ground truth

how to compute data & concept drifts for structured data.

What challenges have you encountered after deploying your model in production? Leave your thoughts in the comments 👇

Next week we will discuss monitoring unstructured data, such as images or text.

Thus, check your inbox on Thursday at 9:00 am CET to learn more.

Have a great weekend!

💡 My goal is to help machine learning engineers level up in designing and productionizing ML systems. Follow me on LinkedIn and Medium for more insights!

Creating content takes me a lot of time. To support me, you can:

Join Medium through my referral link - It won't cost you any additional $$$.

Thank you ✌🏼 !