This week’s topics:

Architecting the observability pipeline of an AI agent

Pre-AI Agents Learning Roadmap

3 things NOT to learn as an AI Engineer

Architecting the observability pipeline of an AI agent

Here's what I've learned from building 5 LLM-related AI projects in 12 months:

Architecting the observability pipeline is harder than building the product.

But it’s absolutely critical.

When you tweak a prompt, add a new feature, or fix a bug, you need to be certain you haven’t broken anything else.

Constantly testing this manually is a huge waste of time and energy.

So, what's the workaround?

In the PhiloAgents project, we tackled this challenge by building a robust observability pipeline that unifies monitoring and evaluation into a single, cohesive system.

We use Opik (by Comet) to:

Monitor prompt usage and versioning live

Capture detailed traces of user inputs, agent actions, tool calls, and outputs

Track key latency metrics like Time to First Token and Tokens per Second

Run offline evaluations with LLM-as-a-Judge on curated datasets

Store evaluation metadata for tracking performance over time

The pipeline consists of two main parts:

Online pipeline: Gives real-time visibility into agent behavior, helping us quickly identify bugs, regressions, or performance issues during production.

Offline pipeline: Runs systematic, reproducible tests to measure overall agent quality, track trends, and validate improvements before deployment.

Together, these pipelines create a feedback loop that supports both immediate debugging and long-term continuous improvement.

If you want to build AI agents that survive beyond proofs of concept and scale reliably in production, mastering observability is non-negotiable.

For a practical deep dive into how we architected this system, check out Lesson 5 of the PhiloAgents course ↓↓↓

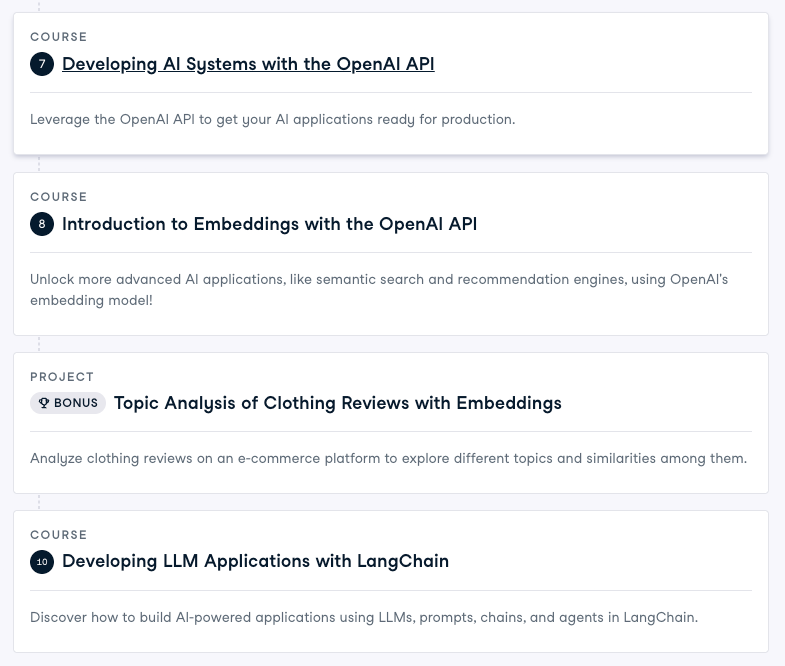

Pre-AI Agents Learning Roadmap (Affiliate)

If you need to fill in some gaps before getting into building production-ready AI agents or workflows, such as leveling up your Python, Deep Learning, MLOps, or LLM skills, we recommend DataCamp as your go-to learning platform.

At Decoding ML, we recommend only what we have personally used or that our close friends have used, ensuring that the products we promote are of the highest quality.

Thus, we can guarantee the professionalism and quality of DataCamp. We found their learning platform to be a perfect balance between theory and practice, providing an engaging learning experience.

They respect your time. Thus, they provide targeted learning roadmaps on multiple layers. For example, the ones that we recommend the most are:

If you're unsure, you can explore the first chapter of each track at no cost.

If any resource is for you, consider getting a DataCamp subscription ↓

3 things NOT to learn as an AI Engineer

If you're becoming an AI Engineer, here are 3 things NOT to focus on:

(I wasted months on each of them)

Deep research on LLM architectures

Advanced math

Chasing tools

Back then, it felt like good advice...

Now I know better.

Let me go into more detail about each one (in no particular order):

1. Deep research on LLM architectures

You don’t need to dive into the bleeding-edge stuff.

Understanding the vanilla transformer architecture is enough to grasp the latest inference optimization techniques (required to fine-tune or deploy LLMs at scale).

Just go through the “Attention Is All You Need” paper inside-out.

Leave the complicated stuff to the researchers and fine-tuning guys.

2. Too much math

Yes, I don’t think that studying advanced algebra, geometry, or mathematical analysis will help you a lot.

Just have fundamental knowledge on statistics (e.g., probabilities, histograms, and distributions).

(This will solve 80% of your AI engineering problems)

3. Focusing too much on tooling

Principles > tools.

Most of the time, you’ll work with vendor solutions like AWS, GCP, or Databricks.

Don’t waste your energy chasing the newest framework every week.

Stick with proven open-source tools like Docker, Grafana, Terraform, Metaflow, Airflow - and build systems, not toolchains.

Takeaway:

AI Engineering is not ML research.

It’s product-grade software with ML under the hood.

Learn what matters. Skip what doesn’t.

P.S. Have you made any of these mistakes? Leave your thoughts in the comments.

Whenever you’re ready, there are 3 ways we can help you:

Perks: Exclusive discounts on our recommended learning resources

(books, live courses, self-paced courses and learning platforms).

The LLM Engineer’s Handbook: Our bestseller book on teaching you an end-to-end framework for building production-ready LLM and RAG applications, from data collection to deployment (get up to 20% off using our discount code).

Free open-source courses: Master production AI with our end-to-end open-source courses, which reflect real-world AI projects and cover everything from system architecture to data collection, training and deployment.

Images

If not otherwise stated, all images are created by the author.