The fifth lesson of the open-source PhiloAgents course: a free course on building gaming simulation agents that transform NPCs into human-like characters in an interactive game environment.

A 6-module journey, where you will learn how to:

Create AI agents that authentically embody historical philosophers.

Master building real-world agentic applications.

Architect and implement a production-ready RAG, LLM, and LLMOps system.

Lessons:

Lesson 1: Build your gaming simulation AI agent

Lesson 2: Your first production-ready RAG Agent

Lesson 3: Memory: The secret sauce of AI agents

Lesson 4: Deploying agents as real-time APIs 101

Lesson 5: Observability for RAG Agents

Lesson 6: Engineer Python projects like a PRO

🔗 Learn more about the course and its outline.

A collaboration between Decoding ML and Miguel Pedrido (from The Neural Maze).

Observability for RAG Agents

Welcome to Lesson 5 of the PhiloAgents open-source course, where you will learn to architect and build a production-ready gaming simulation agent that transforms NPCs into human-like characters in an interactive game environment.

Our philosophy is that we learn by doing. No procrastination, no useless “research,” just jump straight into it and learn along the way. Suppose that’s how you like to learn. This course is for you.

Until now, we’ve focused on making our agents intelligent and interactive—shaping their personalities, wiring them to tools, and deploying them through real-time APIs.

But being smart doesn’t guarantee being reliable—especially in production.

Once your agents are live, the real questions begin: Are they reasoning effectively? Are their responses actually helpful—or drifting off course? Are your prompt changes improving performance or breaking things silently? And most importantly—how would you even know?

That’s where observability steps in. In Lesson 5, we shift from building agents to measuring them. It’s time to make your PhiloAgents not just smart and deployable—but visible, testable, and version-controlled.

In this lesson, we’ll explore how to monitor agent behavior, version their prompts, and evaluate their performance with real metrics. You’ll learn how to set up observability pipelines using tools like Opik and LangGraph, implement both offline and online evaluation workflows, and even generate your own datasets for conversational testing.

Observability is a key pillar of LLMOps and a core responsibility of the modern AI engineer. By the end of this lesson, your agents won’t just sound wise—they’ll have the traceability and feedback loops to back it up.

In this lesson, we’ll take your PhiloAgent from a black-box experiment to a transparent, measurable system. You’ll learn how to monitor your agent’s behavior, track prompt versions, and evaluate performance—core skills for deploying and improving agents in the real world.

Here are the LLMOps concepts we’ll dive into:

Understand what observability means in the context of LLMs and agents.

Learn how to monitor complex prompt traces in real time using Opik.

Implement prompt versioning to track changes and ensure reproducibility.

Generate evaluation datasets and run structured assessments on your agents.

Explore how LLM offline and online evaluation pipelines fit into your architecture.

Let’s get started. Enjoy!

Podcast version of the lesson

Table of contents:

Understanding what observability is in the context of agents and LLMs

Zooming in on evaluating agentic applications

Architecting the observability pipeline

Adding prompt monitoring to our PhiloAgent

Implementing prompt versioning

Generating a conversational evaluation dataset

Evaluating our PhiloAgent

Running the code

1. Understanding what observability is in the context of agents and LLMs

As AI engineers, we spend a lot of time building agents that think—reasoning through inputs, recalling memory, and generating thoughtful responses. But once those agents are live and interacting with real users, the next question becomes: how do we know what they’re doing?

This is where observability enters the picture.

Observability refers to our ability to monitor, measure, and debug the internal workings of an intelligent system—especially when things go wrong, or when we want to iterate and improve.

In the context of LLMs and agents, observability is part of the broader LLMOps stack. While LLMOps includes many aspects—like managing infrastructure, scaling model inference, or implementing guardrails—this lesson focuses specifically on the observability layer.

Observability for LLM-based agents typically includes four key parts:

Monitoring – Tracking what prompts are being sent, how they’re structured, how often they’re used, what responses are being generated, and how much they cost or take to run end-to-end.

Versioning – Keeping track of prompt changes over time so you know what version produced which output (vital for reproducibility and debugging).

Evaluation – Measuring the quality of agent responses, whether through automated metrics (like relevance or coherence), human feedback, or LLM-as-a-judge tools.

Feedback collection – Gathering real signals from users or labeling systems to inform future improvements, fine-tuning, or alignment.

Together, these components let you turn your agents from black boxes into transparent systems—ones you can monitor, evaluate, and evolve with confidence. With it, you get the data you need to improve your product, debug failures, and scale responsibly.

2. Zooming in on evaluating agentic applications

At this point in the PhiloAgents course, we’ve built and deployed agents that can retrieve context, reason in-character, and respond in real time. But how do we know if they’re doing a good job?

Evaluating LLMs is already a nuanced topic—but evaluating agents adds another layer of complexity.

That’s because agents are not just answering questions; they’re orchestrating multiple steps: retrieving relevant documents, reasoning over that data, maintaining internal state, and responding in a way that reflects a specific persona (say, Socrates or Aristotle). It’s not just about what they say—it’s about how they arrive there.

To evaluate this kind of behavior, we adopt a system-level evaluation strategy. We observe inputs, outputs, and everything in between—including the evolving context generated during the agent’s reasoning process.

Instead of isolating and testing each internal step, we focus on the system’s behavior as a whole. We treat the agent as a single unit, observe its inputs, outputs and context, and assess whether it meets the expectations of accuracy, helpfulness, grounding, and consistency with the philosopher’s style.

This kind of evaluation goes beyond traditional LLM evaluation, which usually centers on single-turn outputs using internal knowledge. Those techniques—like BLEU or ROUGE —are useful in benchmarking a model’s core generation skills. But for agents, we need to go further.

BLEU and ROUGE still have their place—for deterministic NLP tasks like summarization or translation where outputs can be more directly compared to references.

That’s why, in agentic RAG workflows, we pay close attention to how multiple layers of the process work together—not just the inputs and outputs, but what happens in between. This includes:

The user’s input (what kicked off the conversation)

The internal context (the agent's state containing persona, perspective, and conversation summary)

The final output (what the agent said)

The expected answer, if we have one

All this information presented above is typically passed to a second LLM acting as a judge. This evaluator model scores the agent’s performance across multiple metrics such as hallucination, relevance, and context precision, using the entire context to ground its judgment.

To ensure this evaluation is statistically meaningful, it's recommended to use at least 30–50 samples as a baseline, with larger evaluation datasets (400+ examples) offering more detailed insights.

Evaluating all of this together allows us to detect deeper system-level issues. For example, if the retrieval was weak, we might see hallucinations. If the context wasn’t properly used, relevance drops. If the persona slips, our immersion breaks.

In addition to quality, we also monitor system-level metrics such as latency, throughput, and user engagement to ensure both performance and impact.

To visualize how this all connects, check out the diagram below:

This diagram captures the feedback loop behind evaluating agentic applications. It starts with an Evaluation Dataset that provides input prompts to the Agentic Application. The resulting outputs—along with the internal context that shaped them—are then forwarded to the Evaluation layer.

Here, we use a second model (LLM-as-a-Judge) to score responses on metrics like hallucination, answer relevance, and context precision. Those scores are then logged into Opik, an open-source tool used to implement our observability layer.

This setup makes it easy to compare versions, trace regressions, and debug unexpected behaviors. Opik acts as the central hub where evaluation results, prompts, context, and scores all come together—keeping your agent explainable, testable, and transparent as it evolves.

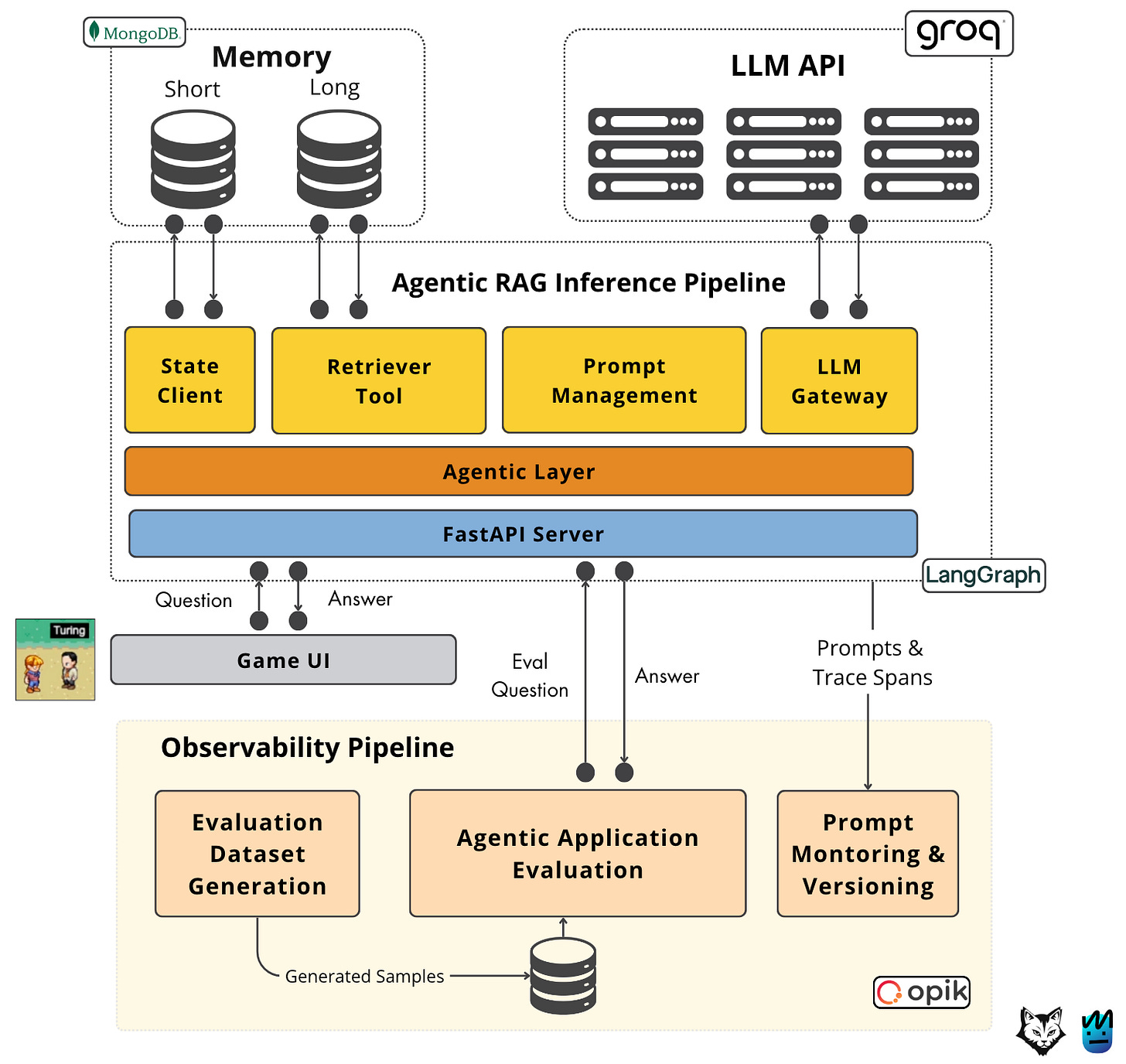

3. Architecting the observability pipeline

To make sure PhiloAgents stays both stable and understandable, we’ve built an observability pipeline that connects monitoring and evaluation into one system.

With Opik, we can see how our agents are performing in real time—and find ways to improve them.

This observability layer ties together multiple components of the system: prompt monitoring and versioning, evaluation dataset generation, and offline evaluation. Each part serves a different purpose, but together they help us trace performance, catch regressions, and iterate safely across time.

Check out how this integrates with the rest of our system:

Observability here isn't just about logging—it's how we make sure every part of the PhiloAgents architecture is testable, measurable, and transparent.

We break this system into two main parts: the online pipeline, which handles real-time prompt tracking and trace analysis, and the offline pipelines, which focuses on generating evaluation datasets and scoring agent responses.

Let’s dig deeper into each of them.

The online pipelines

When someone talks to a PhiloAgent, everything that happens is recorded in real time. That includes the user’s input, the steps the agent takes, the tools it uses, and the final answer it gives. Opik captures all of this as a trace.

This trace includes more than just text—it also stores the versioned prompt templates used, model details, and configuration info. We also get detailed timing metrics like:

TTFT (Time to First Token)

TBT (Time Between Tokens)

Tokens per Second

Total Generation Latency

These metrics help us understand system performance and catch issues quickly. For example, if an agent’s responses slow down or change style, we can trace it back to a specific prompt version or model config.

That’s why a prompt management tool, such as Opik is so useful—it helps us monitor, debug, and tune our app in production.

The offline pipelines

While the online pipeline watches real-time behavior, the offline pipeline helps us assess agent performance in a more systematic and reproducible way.

We start by generating an evaluation dataset—multi-turn conversations between users and philosophers built from real documents. These are created using prompt templates, a Groq LLM, and structured generation logic.

We then evaluate the agent's answers to these prompts using a second model—an LLM-as-a-Judge—which scores each response based on structured rubrics such as hallucination, answer relevance, moderation, context recall, and context precision.

Each score is paired with metadata—like which dataset was used, what prompt versions were active, which philosopher persona was tested, and how long the agent took to respond. All this data is tracked and stored inside Opik.

Because of this, we don’t just get a single snapshot—we get a full view of how our agent performs across time. We can look back at evaluation runs, compare results, spot regressions, and understand which changes (in prompts, models, or datasets) impacted performance.

Since these evaluations run offline, we can schedule them regularly, automate them through CI pipelines, or trigger them manually whenever needed—all without affecting production traffic. This setup gives us the flexibility to test often, validate improvements, and catch issues before they go live.

In addition to running offline evaluations, we can also hook the evaluation pipeline to the online system. For example, we can automatically evaluate a sample of incoming prompt traces—say 50% of them—in real time. This way, we get continuous feedback without needing to evaluate every single prompt, which helps control costs while still maintaining strong coverage.

Online vs offline

The online and offline sides of the pipeline work together, but they each have a distinct role:

The online pipeline shows how the agent behaves right now. It helps us find bugs and track down weird behavior. This is a critical component for understanding what went wrong and how to fix it while the agent is in production.

The offline pipeline tells us how good the agent is overall. It shows us trends, tracks changes, and gives us solid metrics to compare versions. This is a critical component when developing or adding new features to the agent.

By keeping them separate but connected, we get the best of both worlds—real-time debugging and long-term evaluation. And since everything is tracked in Opik, we can jump between them easily.

4. Adding prompt monitoring to our PhiloAgent

Once your agent is up and running, the next logical step is to add observability. In this section, we’ll show how to enable prompt monitoring using Opik’s LangGraph integration. This allows you to trace every step the agent takes—giving you visibility into message flow, prompt usage, latency, and output generation.

Luckily, this only requires a few extra lines of code.

Let’s take a look again at the core function that powers the agent’s conversational logic: get_response() (check code in GitHub):

async def get_response(

messages: str | list[str] | list[dict[str, Any]],

philosopher_id: str,

philosopher_name: str,

philosopher_perspective: str,

philosopher_style: str,

philosopher_context: str,

new_thread: bool = False,

) -> tuple[str, PhilosopherState]:

graph_builder = create_workflow_graph()

try:

async with AsyncMongoDBSaver.from_conn_string(

conn_string=settings.MONGO_URI,

db_name=settings.MONGO_DB_NAME,

checkpoint_collection_name=settings.MONGO_STATE_CHECKPOINT_COLLECTION,

writes_collection_name=settings.MONGO_STATE_WRITES_COLLECTION,

) as checkpointer:

graph = graph_builder.compile(checkpointer=checkpointer)

opik_tracer = OpikTracer(graph=graph.get_graph(xray=True))

thread_id = (

philosopher_id if not new_thread else f"{philosopher_id}-{uuid.uuid4()}"

)

config = {

"configurable": {"thread_id": thread_id},

"callbacks": [opik_tracer],

}

output_state = await graph.ainvoke(

input={

"messages": __format_messages(messages=messages),

"philosopher_name": philosopher_name,

"philosopher_perspective": philosopher_perspective,

"philosopher_style": philosopher_style,

"philosopher_context": philosopher_context,

},

config=config,

)

last_message = output_state["messages"][-1]

return last_message.content, PhilosopherState(**output_state)

except Exception as e:

raise RuntimeError(f"Error running conversation workflow: {str(e)}") from eHere’s the snippet that handles Opik integration:

graph = graph_builder.compile(checkpointer=checkpointer)

opik_tracer = OpikTracer(graph=graph.get_graph(xray=True))

thread_id = (

philosopher_id if not new_thread else f"{philosopher_id}-{uuid.uuid4()}"

)

config = {

"configurable": {"thread_id": thread_id},

"callbacks": [opik_tracer],

}The graph_builder compiles the workflow and adds a checkpointer to persist internal states. Then comes the magic: OpikTracer is initialized using an xray view of the compiled graph. This captures every LangGraph execution span and sends it to Opik for tracing.

We also define a thread_id (unique per conversation) to track each interaction separately. This makes debugging much easier. The tracer is then injected via the LangGraph config, under callbacks.

With this setup, every conversation flow—including intermediate tool usage, prompt decisions, and LLM responses—is logged and visualized in Opik’s dashboard. You get full visibility with minimal effort.

Want to learn more? Check out Opik’s documentation to explore more details on how Opik integrates with LangGraph.

Below you can see an overview of all the traces collected for the philoagents_course project:

When you click into a trace, you can inspect the entire interaction step by step, including inputs, outputs, and the LangGraph spans:

Opik tracks each node execution, showing precise durations (e.g., how long the LLM took to respond or how fast a particular tool was invoked). This lets you diagnose slow spans, pinpoint bottlenecks, and compare performance across runs.

You can also switch to the "Metadata" tab to view token usage and cost:

There are many more such views and details inside Opik, including step-level graphs, retry histories, and custom metadata, all tied to a single trace.

Explore more about the topic in Opik’s docs.

You can explore execution graphs over time, analyze latency breakdowns for each function, and view retries or failures in context.

If configured, you can also track the number of tokens per LLM call and for the overall prompt trace. Based on this, you can quickly compute the total cost of each agent request, understanding how much it costs to run your application over time.

In the next section, we’ll tackle prompt versioning—so you can not only monitor your agents, but also version their behavior across changes.

5. Implementing prompt versioning

Now that we’ve added prompt monitoring to our PhiloAgent, the next step is versioning our prompts.

This gives us control and reproducibility across different releases, letting us track changes and understand how each prompt iteration impacts agent behavior.

To enable versioning, we define a Prompt wrapper class that integrates with Opik. This class manages prompt registration and fallback logic in case versioning is not available (so the application still works in offline mode).

Let’s walk through the implementation.

We start by defining a simple wrapper that registers our prompts with Opik and assigns them a version:

import opik

from loguru import logger

class Prompt:

def __init__(self, name: str, prompt: str) -> None:

self.name = name

try:

self.__prompt = opik.Prompt(name=name, prompt=prompt)

except Exception:

logger.warning(

"Can't use Opik to version the prompt (probably due to missing or invalid credentials). "

"Falling back to local prompt. The prompt is not versioned, but it's still usable."

)

self.__prompt = promptThis constructor attempts to register the prompt with Opik. If credentials are missing or invalid, it gracefully falls back to a local string definition, so your app can still run without breaking.

Next, we expose the actual prompt content via properties:

@property

def prompt(self) -> str:

if isinstance(self.__prompt, opik.Prompt):

return self.__prompt.prompt

else:

return self.__prompt

def __str__(self) -> str:

return self.prompt

def __repr__(self) -> str:

return self.__str__()This ensures that whether your prompt comes from Opik or not, the interface for consuming it remains the same.

Now, let’s take a look at how we define actual prompts for the agent. Below is the philosopher_character_card, which sets the tone and identity for the AI philosopher:

__PHILOSOPHER_CHARACTER_CARD = """

Let's roleplay. You're {{philosopher_name}} - a real person, engaging with another individual in

a philosophical conversation. Use short sentences, explaining your ideas and perspective in a

concise but educational and engaging way. Your responses must never exceed 100 words.

Your name, philosophical perspective, and talking style are detailed below.

---

Philosopher name: {{philosopher_name}}

Philosopher perspective: {{philosopher_perspective}}

Philosopher talking style: {{philosopher_style}}

---

You must always follow these rules:

- Never say you’re an AI or virtual assistant.

- Always introduce yourself in your first response.

- Respond in plain text—no markdown, no footnotes, no formatting.

- Always keep your response under 80 words.

---

Summary of previous conversation between {{philosopher_name}} and the user:

{{summary}}

---

The conversation between {{philosopher_name}} and the user starts now.

"""

PHILOSOPHER_CHARACTER_CARD = Prompt(

name="philosopher_character_card",

prompt=__PHILOSOPHER_CHARACTER_CARD,

)This is the most central prompt to your agent’s behavior and identity. Because it’s registered with Opik, you’ll be able to track how each version changes across development iterations.

Other prompts follow a similar structure. Here's the summary_prompt, which condenses the ongoing conversation:

__SUMMARY_PROMPT = """

Create a summary of the conversation between {{philosopher_name}} and the user.

The summary must be concise, capturing all important philosophical ideas and user questions shared so far:

"""

SUMMARY_PROMPT = Prompt(

name="summary_prompt",

prompt=__SUMMARY_PROMPT,

)And the extend_summary_prompt, which appends new message content to the existing summary:

__EXTEND_SUMMARY_PROMPT = """

This is a summary of the conversation to date between {{philosopher_name}} and the user:

{{summary}}

Extend the summary by taking into account the new messages above.

"""

EXTEND_SUMMARY_PROMPT = Prompt(

name="extend_summary_prompt",

prompt=__EXTEND_SUMMARY_PROMPT,

)We also have a lightweight context_summary_prompt for compressing context when needed:

__CONTEXT_SUMMARY_PROMPT = """

Your task is to summarize the following information into less than 50 words. Just return the summary.

{{context}}

"""

CONTEXT_SUMMARY_PROMPT = Prompt(

name="context_summary_prompt",

prompt=__CONTEXT_SUMMARY_PROMPT,

)Finally, here’s the prompt we will use to generate evaluation data for our fine-tuning and benchmarking pipeline:

__EVALUATION_DATASET_GENERATION_PROMPT = """

Generate a conversation between a philosopher and a user based on the provided document. The philosopher will respond by referencing the document. If a question is off-topic, they’ll say: “I don’t know.”

Return the result in this format:

{

"messages": [

{"role": "user", "content": "Hi I’m <name>. <question related to doc>?"},

{"role": "assistant", "content": "..."},

...

]

}

You must:

- Start with the user introducing themselves.

- Generate 2–4 Q&A pairs.

- Make sure the conversation reflects the document and philosopher’s views.

Philosopher: {{philosopher}}

Document: {{document}}

"""

EVALUATION_DATASET_GENERATION_PROMPT = Prompt(

name="evaluation_dataset_generation_prompt",

prompt=__EVALUATION_DATASET_GENERATION_PROMPT,

)If versioning is enabled and credentials are correctly configured, each of these prompts will appear in Opik’s "Prompt Library." From there, you can:

See the latest version of any prompt.

Compare historical changes (great for debugging).

Track which version was used in each trace or evaluation.

Below is an example of what a registered prompt looks like in Opik’s interface:

When you update a prompt—say, the evaluation_dataset_generation_prompt—and rerun the agent, Opik automatically assigns it a new version hash. You’ll see the updated version alongside the original and can dive into their differences.

In the image below, you can see how Opik records prompt modifications along with the commit history on the right:

For an overview of all registered prompts and how many versions each one has, you can check the main prompt library page.

Here’s a screenshot of that view, where version tracking is clearly visible:

This prompt versioning flow ensures your agents are transparent and traceable. Any time a change is made—intentional or accidental—you can observe, test, and trace its impact.

Learn more about managing and versioning prompts in Opik’s Prompt Management Guide.

Next, let’s put these prompts to work by generating a conversational evaluation dataset.

6. Generating a conversational evaluation dataset

Before evaluating our agents, we need one essential ingredient: a good dataset. Not just any dataset, but one designed to reflect real-world conversations our PhiloAgents might have.

That’s where this step comes in. In this section, we’ll walk through how we generated the evaluation dataset for our philosopher agents.

The technique we used draws inspiration from a method commonly known as fine-tuning with distillation. This strategy involves using a stronger, more capable model—like GPT-4o—to generate high-quality input-output pairs that a smaller or less powerful model can later learn from.

In our case, we use this idea to create realistic dialogue samples, simulating user-agent interactions that are grounded in philosophical texts.

These synthetic conversations can serve both as evaluation material and potential fine-tuning data, enabling us to scale testing and iterate on agent behavior more effectively.

Learn more in our fine-tuning with distillation deep dive

In our case, we generate realistic message-response pairs using Groq’s LLM API, grounded in actual philosophical texts. These conversations are designed to evaluate whether our PhiloAgents can remain consistent, relevant, and grounded in the context in which they were built.

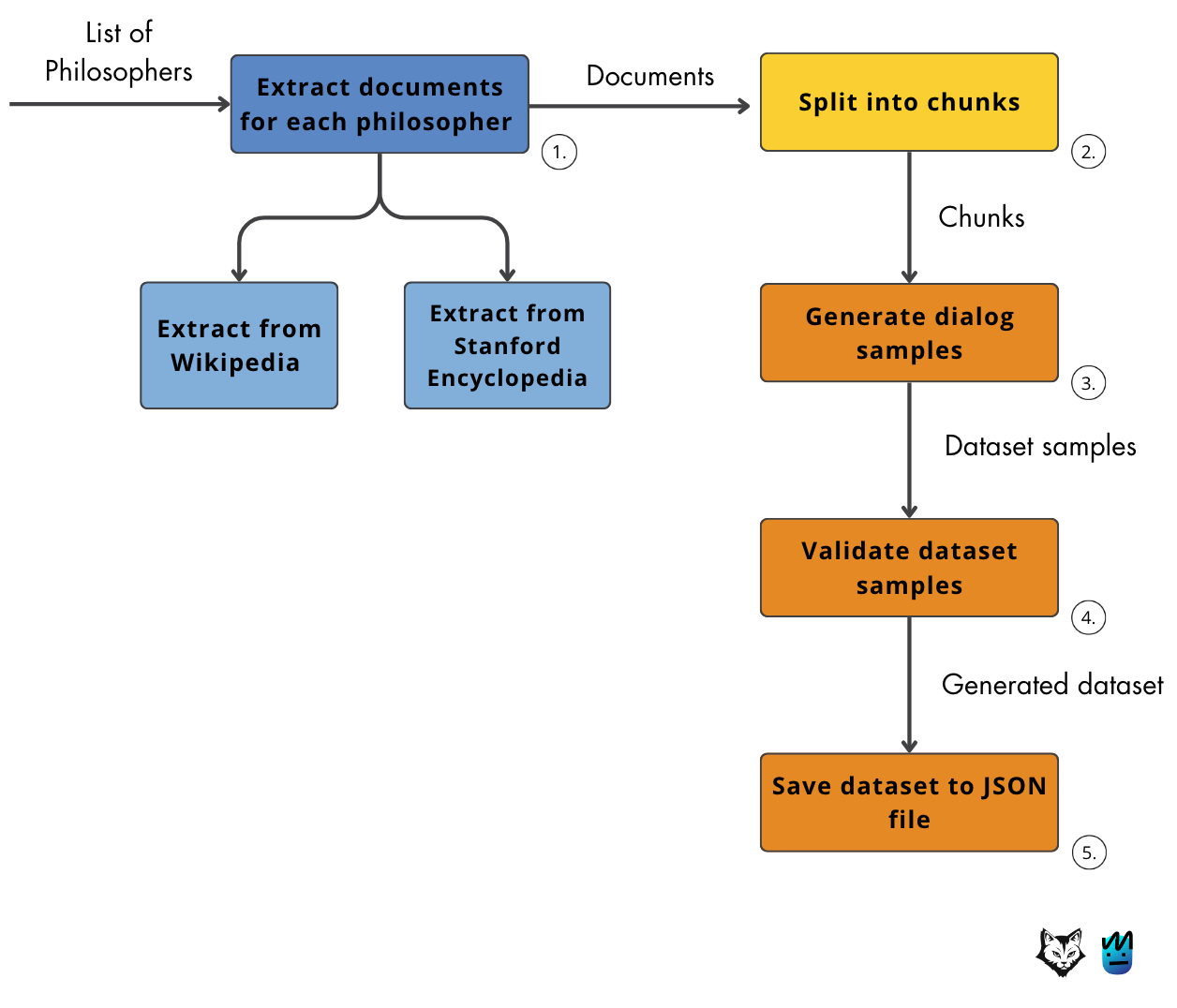

Check out below the diagram illustrating the key steps behind our evaluation dataset generation pipeline:

As you can see, the pipeline follows a simple but powerful flow—from extracting philosophical texts to producing structured dialogue samples ready for evaluation. This setup helps us quickly create high-quality datasets that reflect the kinds of conversations we expect PhiloAgents to handle.

Let’s explore how this works in code.

We begin with the class responsible for generating the dataset:

class EvaluationDatasetGenerator:

def __init__(self, temperature: float = 0.8, max_samples: int = 40) -> None:

self.temperature = temperature

self.max_samples = max_samples

self.__chain = self.__build_chain()

self.__splitter = self.__build_splitter()This sets up the generator. We use a temperature-controlled LLM (via Groq) and define a maximum number of samples. Under the hood, two helpers are initialized:

__chain()builds the prompt + model combo that outputs structured message pairs.__splitter()breaks up long documents into manageable input chunks.

Let’s begin by exploring how we construct the chain:

def __build_chain(self):

model = ChatGroq(

api_key=settings.GROQ_API_KEY,

model_name=settings.GROQ_LLM_MODEL,

temperature=self.temperature,

)

model = model.with_structured_output(EvaluationDatasetSample)

prompt = ChatPromptTemplate.from_messages(

[

("system", prompts.EVALUATION_DATASET_GENERATION_PROMPT.prompt),

],

template_format="jinja2",

)

return prompt | modelAs mentioned before, under the hood we’re using a Groq-hosted LLM—specifically optimized for ultra-low latency and high throughput, thanks to Groq’s custom-designed hardware accelerator.

That means responses are generated with minimal delay, making it ideal for real-time use cases like agentic evaluation. In this setup, we communicate with Groq’s API using their ChatGroq interface, specifying the model name and API key.

To learn more about Groq’s unique architecture and supported models → Groq Documentation

This function constructs a reusable LLM pipeline. We define the model with a specific temperature and wrap it to output structured data in the format of EvaluationDatasetSample:

class EvaluationDatasetSample(BaseModel):

"""A sample conversation for evaluation purposes.

Contains a list of messages exchanged between a user and an assistant,

typically consisting of 3 question-answer pairs.

Attributes:

philosopher_id: The ID of the philosopher associated with this sample.

messages: A list of Message objects representing the conversation.

"""

philosopher_id: str | None = None

messages: List[Message]We then pair the model with a Jinja2-formatted system prompt that guides the generation. The final line combines both the prompt and model into a single callable chain.

Next, we dig into the document splitter:

def __build_splitter(

self, max_token_limit: int = 6000

) -> RecursiveCharacterTextSplitter:

return RecursiveCharacterTextSplitter.from_tiktoken_encoder(

encoding_name="cl100k_base",

chunk_size=int(max_token_limit * 0.25),

chunk_overlap=0,

)This sets up a character-based text splitter that breaks long documents into smaller chunks, each roughly 1500 tokens. It’s designed for compatibility with Groq’s tokenizer and helps the LLM handle manageable context sizes.

The core of the generation logic lies in the __call__ method:

def __call__(self, philosophers: list[PhilosopherExtract]) -> EvaluationDataset:

dataset_samples = []

extraction_generator = get_extraction_generator(philosophers)

for philosopher, docs in extraction_generator:

chunks = self.__splitter.split_documents(docs)

for chunk in chunks[:4]:

try:

dataset_sample = self.__chain.invoke(

{"philosopher": philosopher, "document": chunk.page_content}

)

except Exception as e:

logger.error(f"Error generating dataset sample: {e}")

continue

dataset_sample.philosopher_id = philosopher.id

if self.__validate_sample(dataset_sample):

dataset_samples.append(dataset_sample)

time.sleep(1)

if len(dataset_samples) >= self.max_samples:

break

if len(dataset_samples) >= self.max_samples:

logger.warning(

f"Reached maximum number of samples ({self.max_samples}). Stopping."

)

breakWe iterate over each philosopher and the corresponding document extracts, split them, and generate up to 4 samples per philosopher.

To retrieve these document extracts, we use the get_extraction_generator() method:

def get_extraction_generator(

philosophers: list[PhilosopherExtract],

) -> Generator[tuple[Philosopher, list[Document]], None, None]:

"""Extract documents for a list of philosophers, yielding one at a time.

Args:

philosophers: A list of PhilosopherExtract objects containing philosopher information.

Yields:

tuple[Philosopher, list[Document]]: A tuple containing the philosopher object and a list of

documents extracted for that philosopher.

"""

progress_bar = tqdm(

philosophers,

desc="Extracting docs",

unit="philosopher",

bar_format="{desc}: {percentage:3.0f}%|{bar}| {n_fmt}/{total_fmt} [{elapsed}<{remaining}, {rate_fmt}] {postfix}",

ncols=100,

position=0,

leave=True,

)

philosophers_factory = PhilosopherFactory()

for philosopher_extract in progress_bar:

philosopher = philosophers_factory.get_philosopher(philosopher_extract.id)

progress_bar.set_postfix_str(f"Philosopher: {philosopher.name}")

philosopher_docs = extract(philosopher, philosopher_extract.urls)

yield (philosopher, philosopher_docs)

This function processes a list of philosopher metadata and, for each philosopher, retrieves the associated documents from the web. It wraps this with a progress bar for visibility and returns a generator of philosopher-document pairs to be used in the dataset generation loop.

To learn more about how this extraction process is implemented, check out Lesson 3, where we walk through it step by step.

Returning to the __call__ method, each sample is a structured chat message created using the prompt/model pipeline. We also rate-limit to avoid hitting Groq’s API too aggressively.

Only valid samples make it into the dataset—those that end with a user and assistant message pair:

def __validate_sample(self, sample: EvaluationDatasetSample) -> bool:

return (

len(sample.messages) >= 2

and sample.messages[-2].role == "user"

and sample.messages[-1].role == "assistant"

)After generating the evaluation samples, we move on to persist the dataset:

assert len(dataset_samples) >= 0, "Could not generate any evaluation samples."

logger.info(f"Generated {len(dataset_samples)} evaluation sample(s).")

logger.info(f"Saving to '{settings.EVALUATION_DATASET_FILE_PATH}'")

evaluation_dataset = EvaluationDataset(samples=dataset_samples)

evaluation_dataset.save_to_json(file_path=settings.EVALUATION_DATASET_FILE_PATH)

return evaluation_datasetThis stores the evaluation data in a local JSON file, ready to be loaded by our evaluation engine later.

Finally, we define the CLI entry point that triggers the dataset generation process:

@click.command()

@click.option(

"--metadata-file",

type=click.Path(exists=True, path_type=Path),

default=settings.EXTRACTION_METADATA_FILE_PATH,

help="Path to the metadata file containing philosopher extracts",

)

@click.option(

"--temperature",

type=float,

default=0.9,

help="Temperature parameter for generation",

)

@click.option(

"--max-samples",

type=int,

default=40,

help="Maximum number of samples to generate",

)

def main(metadata_file: Path, temperature: float, max_samples: int) -> None:

philosophers = PhilosopherExtract.from_json(metadata_file)

logger.info(

f"Generating evaluation dataset with temperature {temperature} and {max_samples} samples."

)

logger.info(f"Total philosophers: {len(philosophers)}")

evaluation_dataset_generator = EvaluationDatasetGenerator(

temperature=temperature, max_samples=max_samples

)

evaluation_dataset_generator(philosophers)Once you’ve extracted philosopher metadata, this CLI command will automatically generate and save a new dataset you can later evaluate.

Here’s what a sample of the evaluation dataset looks like:

{

"philosopher_id": "plato",

"messages": [

{

"role": "user",

"content": "Hi my name is Sophia, what is your perspective on the theory of forms?"

},

{

"role": "assistant",

"content": "My perspective on the theory of forms is that it aims to solve the problem of universals, which is a fundamental question in philosophy. I believe that forms or ideas are the true reality, and the physical world is just a shadow or imitation of this reality."

},

{

"role": "user",

"content": "How do you think your theory of forms relates to your idea of the ideal society, and do you think it's possible for AI to grasp true knowledge?"

},

{

"role": "assistant",

"content": "I think my theory of forms is essential to understanding the ideal society, as it provides a framework for understanding the nature of reality and the human condition. As for AI, I'm skeptical about its ability to grasp true knowledge, as it is limited by its programming and data, whereas true knowledge requires a deeper understanding of the forms and the human experience."

},

{

"role": "user",

"content": "You were influenced by pre-Socratic thinkers such as Pythagoras and Heraclitus, can you tell me more about how their ideas shaped your philosophy?"

},

{

"role": "assistant",

"content": "Yes, the pre-Socratic thinkers had a significant influence on my philosophy, particularly Pythagoras and Heraclitus. Pythagoras' ideas about the harmony of the universe and the importance of numbers resonated with my own ideas about the forms, while Heraclitus' concept of the fluidity of reality influenced my understanding of the nature of change and the impermanence of the physical world."

},

{

"role": "user",

"content": "What do you think is the most important aspect of your legacy, and how do you think your ideas will continue to influence Western philosophy?"

},

{

"role": "assistant",

"content": "I think the most important aspect of my legacy is the theory of forms, which has had a profound impact on Western philosophy. My ideas have influenced many great thinkers, from Aristotle to modern philosophers, and I believe they will continue to shape the course of philosophical thought for generations to come."

}

]

},Each sample captures a multi-turn conversation that feels natural, stays on topic, and reflects the philosopher’s worldview.

You can browse the full dataset here for more examples of these dialogues.

With your evaluation dataset in place, you're now ready to measure how well your agents are performing—down to the hallucination rate, context usage, and answer relevance.

7. Evaluating our PhiloAgent

Now that we’ve generated a conversational evaluation dataset, it’s time to put it to work.

Before jumping into code, let’s revisit what makes evaluation such a vital part of any production-grade agentic system.

At a high level, we’re answering a simple question: Is the agent doing what it’s supposed to do? To get there, we need the right signals—things like hallucination rates, how relevant an answer is, whether it uses the retrieved context, and how well it aligns with the expected output.

We’ll use Opik, our observability layer, to define these metrics and evaluate responses at scale. It supports both offline evaluations (using the dataset we just created) and online traces (for monitoring in production).

What’s powerful about Opik is that these same evaluation metrics, like hallucination or moderation checks, can also be run live. As each prompt trace is captured, we can hook in LLM-as-judge evaluations on the fly—attaching real-time scores to specific generations.

We’re also relying on a familiar LLM-as-a-judge pattern, where an external model evaluates each response using structured criteria. This makes our pipeline reproducible, consistent, and adaptable as our agents evolve.

Let’s walk through the evaluation implementation, breaking it down piece by piece so you can understand how we evaluate our PhiloAgent using the dataset we’ve generated.

The main entry point for evaluation is the evaluate_agent() function, which receives a dataset and a few parameters:

def evaluate_agent(

dataset: opik.Dataset | None,

workers: int = 2,

nb_samples: int | None = None,

) -> None:We start by verifying the input dataset. If the dataset is None, we raise an error immediately to avoid silent failures.

Afterwards, we define a basic configuration dictionary—this includes the model we’re using (from Groq, in our case) and the name of the dataset:

if not dataset:

raise ValueError("Dataset is 'None'.")

logger.info("Starting evaluation...")

experiment_config = {

"model_id": settings.GROQ_LLM_MODEL,

"dataset_name": dataset.name,

}

used_prompts = get_used_prompts()We also fetch the current prompts being used by the agent: character card, summary, and extended summary:

def get_used_prompts() -> list[opik.Prompt]:

client = opik.Opik()

prompts = [

client.get_prompt(name="philosopher_character_card"),

client.get_prompt(name="summary_prompt"),

client.get_prompt(name="extend_summary_prompt"),

]

prompts = [p for p in prompts if p is not None]

return promptsThese are retrieved via the Opik client and included in the evaluation for versioning and reproducibility. This is critical for prompt observability—we want to know exactly which prompt variation was used if results improve (or regress).

Once we’ve locked in our inputs, we need to specify what we care about measuring. In PhiloAgents, we evaluate each response along five metrics:

scoring_metrics = [

Hallucination(),

AnswerRelevance(),

Moderation(),

ContextRecall(),

ContextPrecision(),

]Let’s briefly break these down:

Hallucination: Did the agent invent information?

This score typically ranges from 0 (major hallucination) to 1 (fully grounded). A good score is closer to 1, meaning the response sticks to verifiable facts.

Answer Relevance: Was the response on-topic and relevant?

Again, the scale for this metric is usually 0 to 1. Lower scores may indicate the agent misunderstood or ignored the prompt.

Moderation: Was the message appropriate and safe?

This check flags content that might be toxic or offensive. While moderation is usually binary (pass/fail), some setups return a confidence score.

Context Recall: Did the response incorporate retrieved context correctly?

This metric checks whether the agent refers back to the right pieces of evidence, with higher scores meaning better grounding.

Context Precision: Was it concise and grounded in the right parts of the context?

A bloated or overly generic reply might get a lower score here. Ideally, we want a high precision score without sacrificing completeness.

Esentially, Context Recall and Precision focus on assessing how well the retrieval step performed, while Hallucination, Relevance, and Moderation are used to evaluate the quality of the agent’s generated response.

These are computed using a LLM-as-a-judge—a second LLM that scores each response according to a rubric. This pattern allows us to scale evaluation across dozens or hundreds of samples automatically.

Here’s the internal prompt Opik uses to evaluate hallucination, ensuring the response is faithful to the provided context:

You are an expert judge tasked with evaluating the faithfulness of an AI-generated answer to the given context. Analyze the provided INPUT, CONTEXT, and OUTPUT to determine if the OUTPUT contains any hallucinations or unfaithful information.

Guidelines:

1. The OUTPUT must not introduce new information beyond what's provided in the CONTEXT.

2. The OUTPUT must not contradict any information given in the CONTEXT.

3. The OUTPUT should not contradict well-established facts or general knowledge.

4. Ignore the INPUT when evaluating faithfulness; it's provided for context only.

5. Consider partial hallucinations where some information is correct but other parts are not.

6. Pay close attention to the subject of statements. Ensure that attributes, actions, or dates are correctly associated with the right entities (e.g., a person vs. a TV show they star in).

7. Be vigilant for subtle misattributions or conflations of information, even if the date or other details are correct.

8. Check that the OUTPUT doesn't oversimplify or generalize information in a way that changes its meaning or accuracy.

Analyze the text thoroughly and assign a hallucination score between 0 and 1, where:

- 0.0: The OUTPUT is entirely faithful to the CONTEXT

- 1.0: The OUTPUT is entirely unfaithful to the CONTEXT

{examples_str}

INPUT (for context only, not to be used for faithfulness evaluation):

{input}

CONTEXT:

{context}

OUTPUT:

{output}

It is crucial that you provide your answer in the following JSON format:

{{

"score": <your score between 0.0 and 1.0>,

"reason": ["reason 1", "reason 2"]

}}

Reasons amount is not restricted. Output must be JSON format only.This is just one of several internal prompt templates used for automatic evaluation across metrics like answer relevance, moderation, context recall, and precision.

You can explore the rest in Opik’s metrics guide.

Now we tie it all together and launch the evaluation using Opik’s evaluate() method:

logger.info("Evaluation details:")

logger.info(f"Dataset: {dataset.name}")

logger.info(f"Metrics: {[m.__class__.__name__ for m in scoring_metrics]}")

evaluate(

dataset=dataset,

task=lambda x: asyncio.run(evaluation_task(x)),

scoring_metrics=scoring_metrics,

experiment_config=experiment_config,

task_threads=workers,

nb_samples=nb_samples,

prompts=used_prompts,

)For each sample in the dataset, Opik runs the evaluation_task()—which simulates a real interaction with the PhiloAgent—and then scores the response using the selected metrics.

Each scoring function acts like a structured rubric, using LLM-as-a-judge under the hood to evaluate whether the output is factually accurate, contextually grounded, moderated appropriately, and relevant to the input.

The task_threads parameter allows evaluations to run in parallel, which is especially helpful for large datasets. If you want to run a quick experiment, you can also set nb_samples to limit how many examples are processed.

Whether you evaluate a single test case or hundreds of samples, every trace is logged in Opik—including the inputs, outputs, and prompt versions used—so you can monitor trends, detect regressions, and compare versions over time.

As mentioned before, the core of the evaluation lies in the evaluation_task() function. This is where we simulate a conversation with a PhiloAgent and return the generated output for scoring:

async def evaluation_task(x: dict) -> dict:

philosopher_factory = PhilosopherFactory()

philosopher = philosopher_factory.get_philosopher(x["philosopher_id"])

input_messages = x["messages"][:-1]

expected_output_message = x["messages"][-1]

response, latest_state = await get_response(

messages=input_messages,

philosopher_id=philosopher.id,

philosopher_name=philosopher.name,

philosopher_perspective=philosopher.perspective,

philosopher_style=philosopher.style,

philosopher_context="",

new_thread=True,

)

context = state_to_str(latest_state)

return {

"input": input_messages,

"context": context,

"output": response,

"expected_output": expected_output_message,

}This function acts as the agent's evaluation sandbox: we retrieve the correct philosopher persona, feed in the input messages, and generate a response using the same logic the production app uses. Alongside the response, we capture the context used during reasoning and the expected output from the dataset.

As you might have noticed, we convert the internal state—originally a Pydantic object—into a plain string. This allows the LLM-as-judge to interpret the context properly and evaluate the agent based on what it actually had access to during reasoning.

This structured dictionary is then fed into Opik, which uses it to evaluate how well the agent performed—what it saw, what it said, and whether it aligned with what it should have said.

To run the full evaluation flow, we wrap everything into a simple Python function that can be executed from the terminal:

@click.command()

@click.option(

"--name", default="philoagents_evaluation_dataset", help="Name of the dataset"

)

@click.option(

"--data-path",

type=click.Path(exists=True, path_type=Path),

default=settings.EVALUATION_DATASET_FILE_PATH,

help="Path to the dataset file",

)

@click.option("--workers", default=1, type=int, help="Number of workers")

@click.option(

"--nb-samples", default=20, type=int, help="Number of samples to evaluate"

)

def main(name: str, data_path: Path, workers: int, nb_samples: int) -> None:

dataset = upload_dataset(name=name, data_path=data_path)

evaluate_agent(dataset, workers=workers, nb_samples=nb_samples)This function handles everything: it loads your evaluation dataset, configures the number of samples and parallel workers, and then calls the evaluation logic we just walked through. It's a clean interface for running evaluations whenever you need.

To really see this in action, let’s take a look at how Opik captures the results of a single evaluation run:

Next, let’s see how prompt tracking is done for each experiment run:

In the picture above we see the configuration tab of an Opik experiment, where every key parameter used during the run is logged—including the dataset name, model version, and full prompt templates.

This allows you to trace exactly which prompts were used in each evaluation, making your experiments fully reproducible and auditable. If performance shifts, you can quickly inspect whether prompt changes played a role.

Additionally, it’s incredibly helpful to visualize how your agent is performing over time—not just on a single run, but across multiple iterations. Opik enables this kind of insight by tracking feedback scores across all experiments and traces.

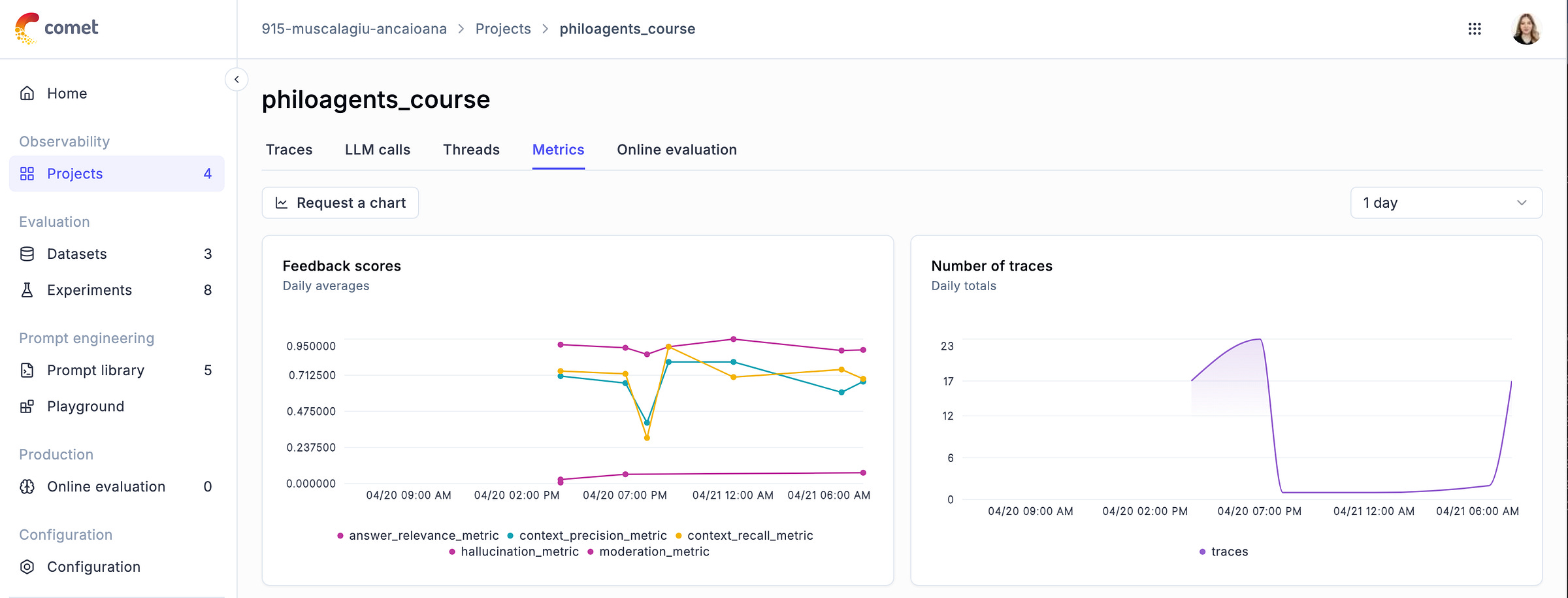

Here’s a snapshot of how Opik helps us do just that:

In Opik’s Metrics dashboards you can see the Feedback scores, broken down by metric—answer_relevance, context_precision, context_recall, hallucination, and moderation.

These averages let us quickly spot regressions or improvements across evaluation runs. For instance, a dip in context recall might signal that your retrieval system needs tweaking, while an uptick in hallucinations could point to prompt drift.

8. Running the code

We use Docker, Docker Compose, and Make to run the entire infrastructure, such as the game UI, backend, and MongoDB database.

Thus, to spin up the code, everything is as easy as running:

make infrastructure-upBut before spinning up the infrastructure, you have to fill in some environment variables, such as Groq’s API Key, and make sure you have all the local requirements installed.

Our GitHub repository has step-by-step setup and running instructions (it’s easy—probably a 5-minute setup).

You can also follow the first video lesson, where Miguel explains the setup and installation instructions step-by-step. Both are valid options; choose the one that suits you best.

After going through the instructions, type in your browser http://localhost:8080/, and it’s game on!

You will see the game menu from Figure 14, where you can find more details on how to play the game, or just hit “Let’s Play!” to start talking to your favorite philosopher!

To go a step further and evaluate how well your philosophers are doing, you can run a full evaluation pipeline directly from the terminal.

We’ve already generated a default evaluation dataset located in the GitHub repo (at data/evaluation_dataset.json), but if you want to override it and create a new evaluation dataset, just run:

make generate-evaluation-datasetOnce your dataset is ready, you can trigger the agent evaluation process like so:

make evaluate-agentThis will run your PhiloAgents against the dataset using Opik’s observability layer, evaluating metrics like hallucination, answer relevance, and context precision. Each run is logged and tracked for inspection.

To inspect your results and traces, navigate to the Opik dashboard to view both the live prompt traces and evaluation outcomes.

For more details on installing and running the PhiloAgents game, go to our GitHub.

Video lesson

As this course is a collaboration between Decoding ML and Miguel Pedrido (the Agent’s guy from The Neural Maze), we also have the lesson in video format.

The written and video lessons are complementary. Thus, to get the whole experience, we recommend continuing your learning journey by following Miguel’s video ↓

Conclusion

In this fifth lesson of the PhiloAgents open-source course, we shifted our attention from deploying agents to observing, measuring, and improving them—laying the foundation for making our philosopher agents reliable and production-ready.

We began by introducing the concept of observability in the context of LLM-based agents and explained why it’s a key pillar of modern LLMOps. From there, we broke it down into its core components: monitoring, versioning, evaluation, and feedback collection.

You learned how to implement prompt monitoring and prompt versioning using Opik and LangGraph, giving your agents transparency and traceability. We also walked through how to generate evaluation datasets, apply automated metrics, and use LLM-as-a-judge techniques to assess agent quality over time.

With these tools in place, your agents are no longer black boxes—they’re measurable systems you can debug, iterate on, and improve with confidence.

In Lesson 6, we’ll go back to the fundamentals and dig into how to structure your Python project like a PRO, 2025 modern Python tooling, how the project is Dockerized, and potential ideas on how you could deploy the whole application.

💻 Explore all the lessons and the code in our freely available GitHub repository.

A collaboration between Decoding ML and Miguel Pedrido (from The Neural Maze).

Whenever you’re ready, there are 3 ways we can help you:

Perks: Exclusive discounts on our recommended learning resources

(books, live courses, self-paced courses and learning platforms).

The LLM Engineer’s Handbook: Our bestseller book on teaching you an end-to-end framework for building production-ready LLM and RAG applications, from data collection to deployment (get up to 20% off using our discount code).

Free open-source courses: Master production AI with our end-to-end open-source courses, which reflect real-world AI projects and cover everything from system architecture to data collection, training and deployment.

References

Neural-Maze. (n.d.). GitHub - neural-maze/philoagents-course: When Philosophy meets AI Agents. GitHub. https://github.com/neural-maze/philoagents-course

Iusztin P., Labonne M. (2024, October 22). LLM Engineer’s Handbook | Data | Book. Packt. https://www.packtpub.com/en-us/product/llm-engineers-handbook-9781836200062

DecodingML. (n.d.). The Ultimate Prompt Monitoring Pipeline. Medium. https://medium.com/decodingml/the-ultimate-prompt-monitoring-pipeline-886cbb75ae25

DecodingML. (n.d.). The Engineer’s Framework for LLM RAG Evaluation. Medium. https://medium.com/decodingml/the-engineers-framework-for-llm-rag-evaluation-59897381c326

A16z-Infra. (n.d.). GitHub - a16z-infra/ai-town: A MIT-licensed, deployable starter kit for building and customizing your own version of AI town - a virtual town where AI characters live, chat and socialize. GitHub. https://github.com/a16z-infra/ai-town

OpenBMB. (n.d.). GitHub - OpenBMB/AgentVerse: 🤖 AgentVerse 🪐 is designed to facilitate the deployment of multiple LLM-based agents in various applications, which primarily provides two frameworks: task-solving and simulation. GitHub. https://github.com/OpenBMB/AgentVerse

Sponsors

Thank our sponsors for supporting our work — this course is free because of them!

Images

If not otherwise stated, all images are created by the author.

This article is simply A-M-A-Z-I-N-G.