7 tips to reduce your VRAM when training LLMs

3 techniques you must know to evaluate your LLMs. Introduction to deploying private LLMs with AWS SageMaker.

Decoding ML Notes

This week’s topics:

3 techniques you must know to evaluate your LLMs

7 tips you must know to reduce your VRAM consumption of your LLMs during training

Introduction to deploying private LLMs with AWS SageMaker

On the 3rd of May, I 𝗵𝗼𝘀𝘁𝗲𝗱 a 𝗳𝗿𝗲𝗲 𝘀𝗲𝘀𝘀𝗶𝗼𝗻 on Maven for 𝟵𝟰 𝗽𝗲𝗼𝗽𝗹𝗲 on how to 𝗔𝗿𝗰𝗵𝗶𝘁𝗲𝗰𝘁 𝗬𝗼𝘂𝗿 𝗟𝗟𝗠 𝗧𝘄𝗶𝗻. If you missed it, here is 𝗵𝗼𝘄 you can 𝗮𝗰𝗰𝗲𝘀𝘀 𝗶𝘁 for 𝗳𝗿𝗲𝗲 ↓

.

𝘒𝘦𝘺 𝘵𝘢𝘬𝘦𝘢𝘸𝘢𝘺𝘴 𝘸𝘦𝘳𝘦:

→ Why I started building my LLM Twin

→ The 3 pipeline design / The FTI pipeline architecture

→ System design of the LLM Twin Architecture

→ Break down the RAG system of the LLM Twin Architecture

→ Live Demo

.

If you want the recording, you can watch it for free here: https://bit.ly/3PZGV0S

𝘈𝘭𝘴𝘰, 𝘩𝘦𝘳𝘦 𝘢𝘳𝘦 𝘰𝘵𝘩𝘦𝘳 𝘶𝘴𝘦𝘧𝘶𝘭 𝘭𝘪𝘯𝘬𝘴:

- 𝘴𝘭𝘪𝘥𝘦𝘴: 🔗 https://lnkd.in/d_MdqGwS

- 𝘓𝘓𝘔 𝘛𝘸𝘪𝘯 𝘤𝘰𝘶𝘳𝘴𝘦 𝘎𝘪𝘵𝘏𝘶𝘣: 🔗 https://lnkd.in/dzat6PB6

- 𝘓𝘓𝘔 𝘛𝘸𝘪𝘯 𝘍𝘙𝘌𝘌 𝘭𝘦𝘴𝘴𝘰𝘯𝘴: 🔗 https://lnkd.in/dX__4mhX

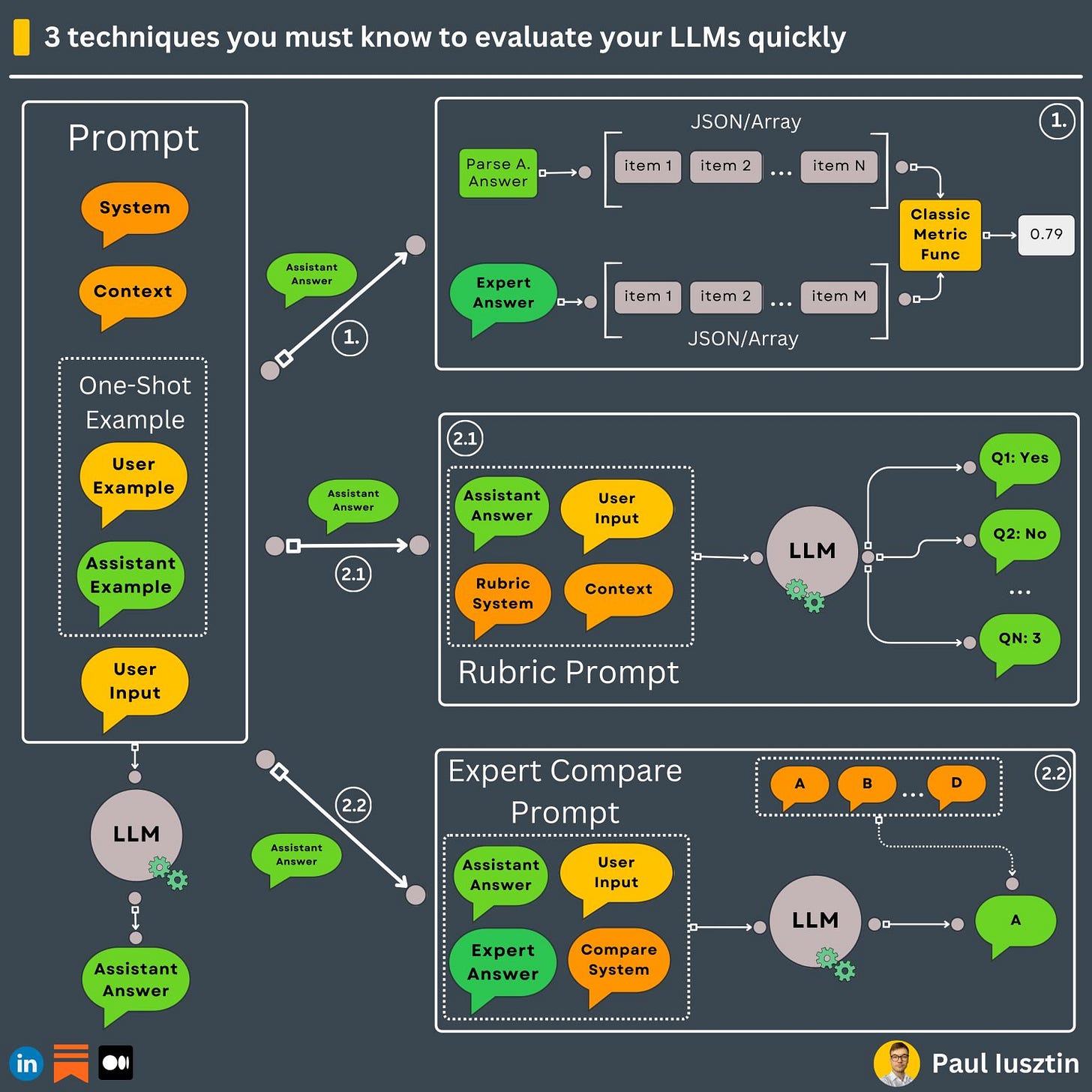

3 techniques you must know to evaluate your LLMs

Here are 3 techniques you must know to evaluate your LLMs quickly.

Manually testing the output of your LLMs is a tedious and painful process → you need to automate it.

In generative AI, most of the time, you cannot leverage standard metrics.

Thus, the real question is, how do you evaluate the outputs of an LLM?

#𝟭. 𝗦𝘁𝗿𝘂𝗰𝘁𝘂𝗿𝗲𝗱 𝗮𝗻𝘀𝘄𝗲𝗿𝘀 - 𝘆𝗼𝘂 𝗸𝗻𝗼𝘄 𝗲𝘅𝗮𝗰𝘁𝗹𝘆 𝘄𝗵𝗮𝘁 𝘆𝗼𝘂 𝘄𝗮𝗻𝘁 𝘁𝗼 𝗴𝗲𝘁

Even if you use an LLM to generate text, you can ask it to generate a response in a structured format (e.g., JSON) that can be parsed.

You know exactly what you want (e.g., a list of products extracted from the user's question).

Thus, you can easily compare the generated and ideal answers using classic approaches.

For example, when extracting the list of products from the user's input, you can do the following:

- check if the LLM outputs a valid JSON structure

- use a classic method to compare the generated and real answers

#𝟮. 𝗡𝗼 "𝗿𝗶𝗴𝗵𝘁" 𝗮𝗻𝘀𝘄𝗲𝗿 (𝗲.𝗴., 𝗴𝗲𝗻𝗲𝗿𝗮𝘁𝗶𝗻𝗴 𝗱𝗲𝘀𝗰𝗿𝗶𝗽𝘁𝗶𝗼𝗻𝘀, 𝘀𝘂𝗺𝗺𝗮𝗿𝗶𝗲𝘀, 𝗲𝘁𝗰.)

When generating sentences, the LLM can use different styles, words, etc. Thus, traditional metrics (e.g., BLUE score) are too rigid to be useful.

You can leverage another LLM to test the output of our initial LLM. The trick is in what questions to ask.

Here, we have another 2 sub scenarios:

↳ 𝟮.𝟭 𝗪𝗵𝗲𝗻 𝘆𝗼𝘂 𝗱𝗼𝗻'𝘁 𝗵𝗮𝘃𝗲 𝗮𝗻 𝗶𝗱𝗲𝗮𝗹 𝗮𝗻𝘀𝘄𝗲𝗿 𝘁𝗼 𝗰𝗼𝗺𝗽𝗮𝗿𝗲 𝘁𝗵𝗲 𝗮𝗻𝘀𝘄𝗲𝗿 𝘁𝗼 (𝘆𝗼𝘂 𝗱𝗼𝗻'𝘁 𝗵𝗮𝘃𝗲 𝗴𝗿𝗼𝘂𝗻𝗱 𝘁𝗿𝘂𝘁𝗵)

You don't have access to an expert to write an ideal answer for a given question to compare it to.

Based on the initial prompt and generated answer, you can compile a set of questions and pass them to an LLM. Usually, these are Y/N questions that you can easily quantify and check the validity of the generated answer.

This is known as "Rubric Evaluation"

For example:

"""

- Is there any disagreement between the response and the context? (Y or N)

- Count how many questions the user asked. (output a number)

...

"""

This strategy is intuitive, as you can ask the LLM any question you are interested in as long it can output a quantifiable answer (Y/N or a number).

↳ 𝟮.𝟮. 𝗪𝗵𝗲𝗻 𝘆𝗼𝘂 𝗱𝗼 𝗵𝗮𝘃𝗲 𝗮𝗻 𝗶𝗱𝗲𝗮𝗹 𝗮𝗻𝘀𝘄𝗲𝗿 𝘁𝗼 𝗰𝗼𝗺𝗽𝗮𝗿𝗲 𝘁𝗵𝗲 𝗿𝗲𝘀𝗽𝗼𝗻𝘀𝗲 𝘁𝗼 (𝘆𝗼𝘂 𝗵𝗮𝘃𝗲 𝗴𝗿𝗼𝘂𝗻𝗱 𝘁𝗿𝘂𝘁𝗵)

When you have access to an answer manually created by a group of experts, things are easier.

You will use an LLM to compare the generated and ideal answers based on semantics, not structure.

For example:

"""

(A) The submitted answer is a subset of the expert answer and entirely consistent.

...

(E) The answers differ, but these differences don't matter.

"""

7 tips you must know to reduce your VRAM consumption of your LLMs during training

Here are 𝟳 𝘁𝗶𝗽𝘀 you must know to 𝗿𝗲𝗱𝘂𝗰𝗲 your 𝗩𝗥𝗔𝗠 𝗰𝗼𝗻𝘀𝘂𝗺𝗽𝘁𝗶𝗼𝗻 of your 𝗟𝗟𝗠𝘀 during 𝘁𝗿𝗮𝗶𝗻𝗶𝗻𝗴 so you can 𝗳𝗶𝘁 it on 𝘅𝟭 𝗚𝗣𝗨.

𝟭. 𝗠𝗶𝘅𝗲𝗱-𝗽𝗿𝗲𝗰𝗶𝘀𝗶𝗼𝗻: During training you use both FP32 and FP16 in the following way: "FP32 weights" -> "FP16 weights" -> "FP16 gradients" -> "FP32 gradients" -> "Update weights" -> "FP32 weights" (and repeat). As you can see, the forward & backward passes are done in FP16, and only the optimization step is done in FP32, which reduces both the VRAM and runtime.

𝟮. 𝗟𝗼𝘄𝗲𝗿-𝗽𝗿𝗲𝗰𝗶𝘀𝗶𝗼𝗻: All your computations are done in FP16 instead of FP32. But the key is using bfloat16 ("Brain Floating Point"), a numerical representation Google developed for deep learning. It allows you to represent very large and small numbers, avoiding overflowing or underflowing scenarios.

𝟯. 𝗥𝗲𝗱𝘂𝗰𝗶𝗻𝗴 𝘁𝗵𝗲 𝗯𝗮𝘁𝗰𝗵 𝘀𝗶𝘇𝗲: This one is straightforward. Fewer samples per training iteration result in smaller VRAM requirements. The downside of this method is that you can't go too low with your batch size without impacting your model's performance.

𝟰. 𝗚𝗿𝗮𝗱𝗶𝗲𝗻𝘁 𝗮𝗰𝗰𝘂𝗺𝘂𝗹𝗮𝘁𝗶𝗼𝗻: It is a simple & powerful trick to increase your batch size virtually. You compute the gradients for "micro" batches (forward + backward passes). Once the accumulated gradients reach the given "virtual" target, the model weights are updated with the accumulated gradients. For example, you have a batch size of 4 and a micro-batch size of 1. Then, the forward & backward passes will be done using only x1 sample, and the optimization step will be done using the aggregated gradient of the 4 samples.

𝟱. 𝗨𝘀𝗲 𝗮 𝘀𝘁𝗮𝘁𝗲𝗹𝗲𝘀𝘀 𝗼𝗽𝘁𝗶𝗺𝗶𝘇𝗲𝗿: Adam is the most popular optimizer. It is one of the most stable optimizers, but the downside is that it has 2 additional parameters (a mean & variance) for every model parameter. If you use a stateless optimizer, such as SGD, you can reduce the number of parameters by 2/3, which is significant for LLMs.

𝟲. 𝗚𝗿𝗮𝗱𝗶𝗲𝗻𝘁 (𝗼𝗿 𝗮𝗰𝘁𝗶𝘃𝗮𝘁𝗶𝗼𝗻) 𝗰𝗵𝗲𝗰𝗸𝗽𝗼𝗶𝗻𝘁𝗶𝗻𝗴: It drops specific activations during the forward pass and recomputes them during the backward pass. Thus, it eliminates the need to hold all activations simultaneously in VRAM. This technique reduces VRAM consumption but makes the training slower.

𝟳. 𝗖𝗣𝗨 𝗽𝗮𝗿𝗮𝗺𝗲𝘁𝗲𝗿 𝗼𝗳𝗳𝗹𝗼𝗮𝗱𝗶𝗻𝗴: The parameters that do not fit on your GPU's VRAM are loaded on the CPU. Intuitively, you can see it as a model parallelism between your GPU & CPU.

Most of these methods are orthogonal, so you can combine them and drastically reduce your VRAM requirements during training.

Introduction to deploying private LLMs with AWS SageMaker

Ever wondered 𝗵𝗼𝘄 to 𝗱𝗲𝗽𝗹𝗼𝘆 in <𝟯𝟬 𝗺𝗶𝗻𝘂𝘁𝗲𝘀 𝗼𝗽𝗲𝗻-𝘀𝗼𝘂𝗿𝗰𝗲 𝗟𝗟𝗠𝘀, such as 𝗟𝗹𝗮𝗺𝗮𝟮, on 𝗔𝗪𝗦 𝗦𝗮𝗴𝗲𝗠𝗮𝗸𝗲𝗿? Then wonder no more ↓

Step 1: Deploy the LLM to AWS SageMaker

The sweet thing about SageMaker is that it accelerates the development process, enabling a more efficient and rapid transition to the production stage.

- designing a config class for the deployment of the LLM

- set up AWS and deploy the LLM to SageMaker

- implement an inference class to call the deployed LLM in real-time through a web endpoint

- define a prompt template function to ensure reproducibility & consistency

...and, ultimately, how to play yourself with your freshly deployed LLM.

Here is the full article explaining how to deploy the LLM to AWS SageMaker ↓

Step 2: Call the SageMaker inference endpoint

You've just deployed your Mistral LLM to SageMaker.

𝘕𝘰𝘸 𝘸𝘩𝘢𝘵?

Unfortunately, you are not done.

That was just the beginning of the journey.

→ Now, you have to write a Python client that calls the LLM.

𝗟𝗲𝘁'𝘀 𝘂𝘀𝗲 𝗮 𝗱𝗼𝗰𝘂𝗺𝗲𝗻𝘁 𝘀𝘂𝗺𝗺𝗮𝗿𝘆 𝘁𝗮𝘀𝗸 𝗮𝘀 𝗮𝗻 𝗲𝘅𝗮𝗺𝗽𝗹𝗲.

↓↓↓

𝗦𝘁𝗲𝗽 𝟭: Define a Settings object using 𝘱𝘺𝘥𝘢𝘯𝘵𝘪𝘤.

𝗦𝘁𝗲𝗽 𝟮: Create an inference interface that inherits from 𝘈𝘉𝘊

𝗦𝘁𝗲𝗽 𝟯: Implement an 𝘈𝘞𝘚 𝘚𝘢𝘨𝘦𝘔𝘢𝘬𝘦𝘳 version of the inference interface by specifying how to construct the HTTP payload and call the SageMaker endpoint. We want to keep this class independent from the summarization prompt!

𝗦𝘁𝗲𝗽 𝟰: Create the summarization prompt.

𝗦𝘁𝗲𝗽 𝟱: Encapsulate the summarization prompt and Python SageMaker client into a 𝘚𝘶𝘮𝘮𝘢𝘳𝘪𝘻𝘦𝘚𝘩𝘰𝘳𝘵𝘋𝘰𝘤𝘶𝘮𝘦𝘯𝘵 task.

𝗦𝘁𝗲𝗽 𝟲: Wrap the 𝘚𝘶𝘮𝘮𝘢𝘳𝘪𝘻𝘦𝘚𝘩𝘰𝘳𝘵𝘋𝘰𝘤𝘶𝘮𝘦𝘯𝘵 task with a FastAPI endpoint.

...and bam!

You have an LLM for summarizing any document.

.

𝗛𝗲𝗿𝗲 𝗮𝗿𝗲 𝘀𝗼𝗺𝗲 𝗮𝗱𝘃𝗮𝗻𝘁𝗮𝗴𝗲𝘀 𝗼𝗳 𝘁𝗵𝗲 𝗱𝗲𝘀𝗶𝗴𝗻 𝗱𝗲𝘀𝗰𝗿𝗶𝗯𝗲𝗱 𝗮𝗯𝗼𝘃𝗲:

- by using an inference interface, you can quickly swap the LLM implementation

- by decoupling the prompt construction logic from the inference class, you can reuse the inference client with any prompt

- by wrapping everything with a 𝘚𝘶𝘮𝘮𝘢𝘳𝘪𝘻𝘦𝘚𝘩𝘰𝘳𝘵𝘋𝘰𝘤𝘶𝘮𝘦𝘯𝘵 task you can quickly define & configure multiple types of tasks and leverage polymorphism to run them

Here is the full article explaining how to design the inference module ↓

Images

If not otherwise stated, all images are created by the author.