AI aggregation engine behind Square & Coinbase

My 2025 prediction on RAG, semantic search, and retrieval systems

This week’s topics:

The AI aggregation engine behind Square & Coinbase

Want to shave a few years off your MLOps learning curve?

My 2025 prediction on RAG, semantic search, and retrieval systems

The AI aggregation engine behind Square & Coinbase

The biggest challenge when deploying ML models to production is accessing features in real-time.

This gets incredibly complicated when working with data anchored in time that requires time window aggregation features, as you must constantly update millions of aggregates in real-time.

This creates serious issues with latency (serving in near-real time) and memory (keeping millions of data points in RAM) requirements.

Implementing window aggregations requires careful engineering.

To learn from real-world examples, let’s look at how Tecton solved the time window aggregation problem to power high-frequency platforms such as Square and Coinbase.

First, let’s look at how time window aggregations work.

They are defined by:

Boundaries: start and end limits of the window

Movement: how the window shifts over time

There are 3 types of time window boundaries:

Fixed (e.g., aggregate data from the last 6 days within a single value)

Lifetime (aggregate the whole history rather than a fixed period)

Time series (e.g., aggregate data from the past 6 days within a vector of length 3 by aggregating every 2 days in different sub-windows)

Also, there are 3 types of window movements:

Sliding: Moves continuously forward in time when capturing new events. It aggregates data from a fixed window duration (real-time data freshness)

Hopping: Moves forward in time by a fixed interval overlapping with previous ones (overlapping tiles are cached for faster query time performance, resulting in computation efficiency by introducing small delays in data freshness)

Sawtooth: it moves like the hoping window, but instead of having a fixed size, it aggregates values in real-time from the start until the maximum window size is reached (best of both worlds: real-time data freshness, faster query time performance)

Next, the real trick is in implementing these techniques.

Here, Tecton’s ingenuity kicks in.

This is how it implemented time window aggregations to power its feature platform. ↓

As Tecton supports different data sources, we look at 2 implementations: batch and streaming.

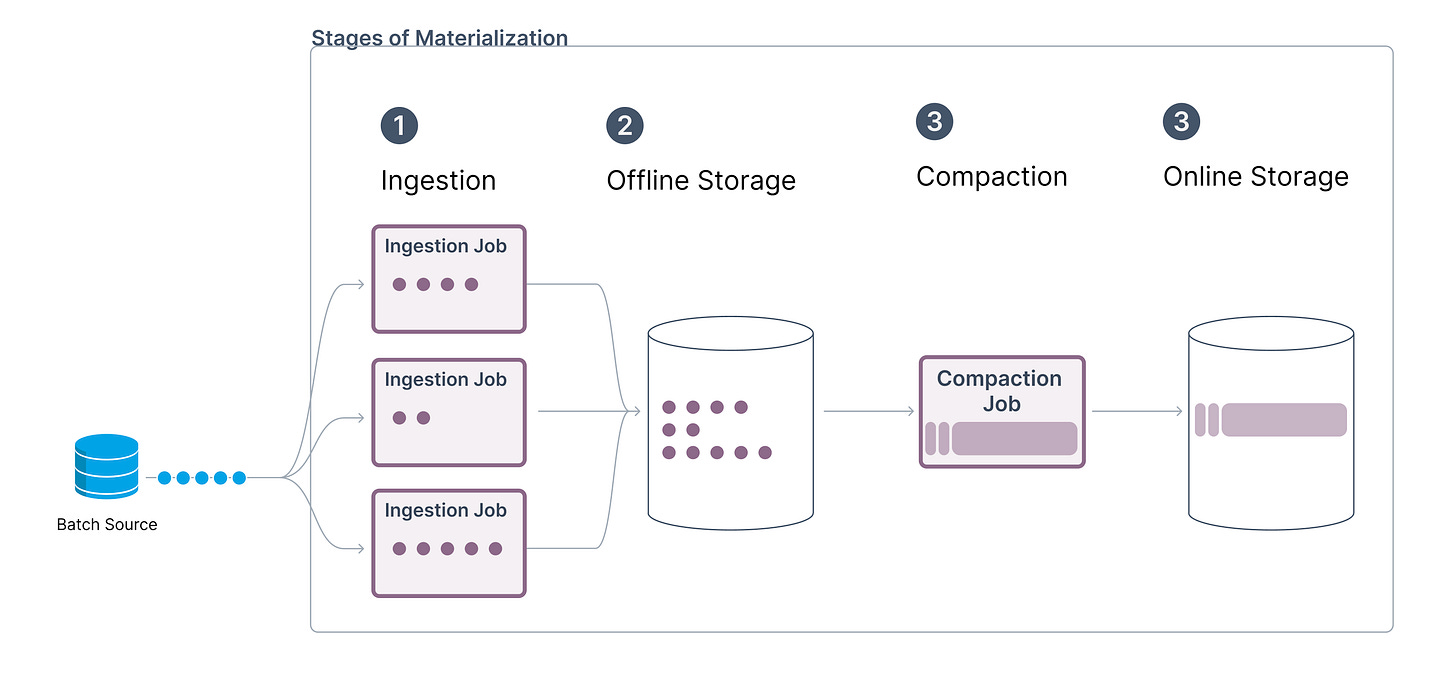

1. Batch

It uses the hoping window movement, where…

It aggregates and materializes data at regular intervals, defined by an `aggregation_interval` that dictates the size of each partial aggregation tile.

For example, for a time window of 3 hours with an `aggregation_interval` of 1 hour, we have 3 tiles of 1 hour combined into our final window aggregate.

By dividing the aggregates into small tiles, Tecton can efficiently compute them without storage or latency issues.

When working with lifetime boundaries, Tecton further compacts the entire history into a single tile, cached in the online store.

Here is how we can define a user’s lifetime and 7 days of total transactions sum in Tecton using their feature as code Python SDK:

@batch_feature_view(

sources=[transactions],

mode="spark_sql",

entities=[user],

features=[

Aggregate(input_column=Field("amount", Float64), function="sum", time_window=LifetimeWindow()),

Aggregate(

input_column=Field("amount", Float64), function="sum", time_window=TimeWindow(window_size=timedelta(days=7))

),

],

feature_start_time=datetime(2022, 5, 1),

lifetime_start_time=datetime(2022, 4, 1),

batch_schedule=timedelta(days=1),

online=True,

offline=True,

compaction_enabled=True,

)

def user_average_transaction_amount(transactions):

return f"SELECT user_id, timestamp, amount FROM {transactions}"Note the `compaction_enabled = True` flag, which enables the tiling logic discussed above.

2. Stream

We have more options in streaming mode depending on the trade-off between data freshness and query latency.

Continuous mode (using a sliding window movement)

✓ The features are aggregated at query time, where everything is kept in the online store for high freshness.

X Since there are no pre-computed values, the read-time performance can be slower and can result in higher infrastructure costs due to frequent writes and updates to the online store.

@stream_feature_view(

source=transactions,

entities=[user],

mode="pandas",

stream_processing_mode=StreamProcessingMode.CONTINUOUS,

timestamp_field="timestamp",

features=[

Aggregate(name="3_day_avg", input_column=Field("amt", Float64), function="mean", time_window=timedelta(days=3)),

Aggregate(name="7_day_avg", input_column=Field("amt", Float64), function="mean", time_window=timedelta(days=7)),

],

)

def user_transaction_averages(transactions):

return transactions[["user_id", "timestamp", "amt"]]Time interval mode (using a hopping window movement)

Moves with a given tile size, cached at the end of the interval.

✓ At query time, we aggregate only the pre-computed tiles, improving read-time performance and reducing costs.

X Medium data freshness, as the tiles are available only after the next time horizon is reached.

@stream_feature_view(

...

stream_processing_mode=StreamProcessingMode.TIME_INTERVAL,

...

)

def user_transaction_averages(transactions):

return transactions[["user_id", "timestamp", "amt"]]Compaction mode (using a sawtooth window movement)

To save memory, you can enable compaction, where Tecton runs regular compaction jobs that aggregate data from the offline store into larger tiles cached in the online store.

But instead of compacting everything into a single large tile (how it’s done for batch), we have to adopt a different strategy.

We divide sawtooth movements between one large tile and multiple small sawtooth tiles at the oldest part of the window.

One large tile represents the bulk of the data, enabling efficient reads and aggregations, while the small sawtooth tiles enable the window to move continuously, creating the “sawtooth” effect.

Whenever new events are incoming, they are aggregated into the large tile, moving with the span of the “sawtooth” tiles.

For example, for an aggregation of 7 hours, we have one large tile of 6 hours and multiple “sawtooh” tiles of 5 minutes. The window hops forward with the length of the “sawtooth” tile.

As the “sawtooth” tiles are small, this creates the “sawtooth” effect.

✓ A balance between store, and read-time efficiency with high freshness.

X Fuzzy windowing due to the “sawtooth” effect, which is often acceptable.

@stream_feature_view(

...

compaction_enabled=True,

...

)

def user_transaction_averages(transactions):

return transactions[["user_id", "timestamp", "amt"]]📌 Try out the code by building a real-time fraud detection model using Tecton:

Want to shave a few years off your MLOps learning curve? (Affiliate)

Here's something you'll be interested in:

End-to-end MLOps with Databricks

→ A live, hands-on course starting January 27th

(It's already a bestseller on Maven with a 4.9 🌟 rating)

The No. 1 thing that makes this course stand out?

While they use Databricks, the focus is on MLOps principles.

This means you won't only learn the tools; you'll master the process.

The course is led by

and , the founders ofI have known them for over a year, and I can genuinely say they are exceptional engineers and teachers - a rare combination!

Here's what you'll learn:

MLOps principles and components

Best Python development practices

Taking code from a notebook to production

Experiment tracking with MLflow & Unity Catalog

Databricks asset bundles (DAB)

Git branching & environment management

Model serving architectures

Setting up a model evaluation pipeline

Monitoring data/model drift & lakehouse solutions

.

This course is for:

ML/DS professionals looking to level up in MLOps

MLEs and platform engineers ready to dominate Databricks

.

Ready to go from MLOps noobie to pro?

→ Next cohort starts January 27th (only one week left)

→ Get 10% off with code: PAUL

→ 100% company reimbursement eligible

My 2025 prediction on RAG, semantic search, and retrieval systems

My 2025 prediction on RAG, semantic search, and retrieval systems:

(It might rub a few people the wrong way)

Their future is based on multi-attribute vector indexes that leverage tabular data and unstructured data such as text, images, or audio.

Why do I believe this?

Most applications use tabular data rather than unstructured data (e.g., search, analytics, retrieval systems).

That’s why leveraging such data for semantic search is the future.

(Not text-to-SQL—just another layer of complexity on top of what we currently have.)

Here's how you can build a multi-attribute vector index for Amazon e-commerce products:

(Note: we leveraged Superlinked and MongoDB Atlas Vector Search to build this)

Process the data

No chunking is needed. Clean up the columns similar to a data science problem (e.g., convert review counts to integers, fix NaNs, format reviews).

Treat each table row as a node in your vector space.Build the multi-attribute index

Define your schema leveraging any variable type (e.g., product category, review count, value, description, etc.).

Create vector similarity spaces between product description, price, and review rating attributes.Query the index

Customize searches by weighting semantic search between attributes to optimize results (e.g., prioritize low prices or high reviews).

Leveraging Superlinked, you can fine-tune your searches through weighted attributes.

This allows you to prioritize different aspects of your data during retrieval, leveraging the same vector index (quicker to experiment and cheaper to implement).

But how to do this is a discussion for another day.

📌 Read more about this here:

Forget text-to-SQL: Use this natural query instead

Search has been around for a long time, but it still often gives us bad or irrelevant results on many popular websites. Things got even trickier with the rise of GenAI chatbots like ChatGPT. Thanks to Google, people who have mostly learned to use keywords now want to talk to search apps more naturally and expect them to get what they mean without using …

Images

If not otherwise stated, all images are created by the author.