Monolith vs micro: The $1M ML design decision

The weight of your ML serving architectural choice

ML services can be deployed in three main architectures:

Offline batch transform

Online real-time inference

Asynchronous inference

There is also edge inference, but if you abstract away the implementation details, it still boils down to the three options above.

You can couple these architectures to batch or streaming data sources. For example, by getting streaming data from a Kafka topic, we use a real-time inference engine to compute predictions.

When discussing these three designs, the differences in architecture are mainly based on the interaction between the client and the ML service, such as the communication protocol, the ML service responsiveness, and prediction freshness.

However, another aspect to consider is the architecture of the ML service itself. It can be implemented as a monolithic server or as multiple microservices. The architecture will impact how the ML service is implemented, maintained, and scaled.

Here is an article from Decoding ML exploring the batch, real-time and async AI inference designs in detail:

This article will dig into the ML service and how to design it. We will explore the following:

Monolith design

Microservices

Monolith vs. Microservices

An LLM example

Table of Contents:

Monolithic architecture

Microservices architecture

Choosing between monolithic and microservices architectures

RAG inference pipelines using microservices

1. Monolithic architecture

The LLM (or any other ML model) and the associated business logic (preprocessing and post-processing steps) are bundled into a single service in a monolithic architecture. This approach is straightforward to implement at the beginning of a project, as everything is placed within one code base. Simplicity makes maintenance easy when working on small to medium projects, as updates and changes can be made within a unified system.

One key challenge of a monolithic architecture is the difficulty of scaling components independently. The LLM typically requires GPU power, while the rest of the business logic is CPU and IO-bound. As a result, the infrastructure must be optimized for both GPU and CPU. This can lead to inefficient resource use, with the GPU being idle when the business logic is executed and vice versa. Such inefficiency can result in additional costs that could be avoided.

Moreover, this architecture can limit flexibility, as all components must share the same tech stack and runtime environment. For example, you might want to run the LLM using Rust or C++ or compile it with ONNX or TensorRT while keeping the business logic in Python. Having all the code in one system makes this differentiation difficult.

Finally, splitting the work across different teams is complex, often leading to bottlenecks and reduced agility.

2. Microservices architecture

A microservices architecture breaks down the inference pipeline into separate, independent services—typically splitting the LLM service and the business logic into distinct components. These services communicate over a network using protocols such as REST or gRPC.

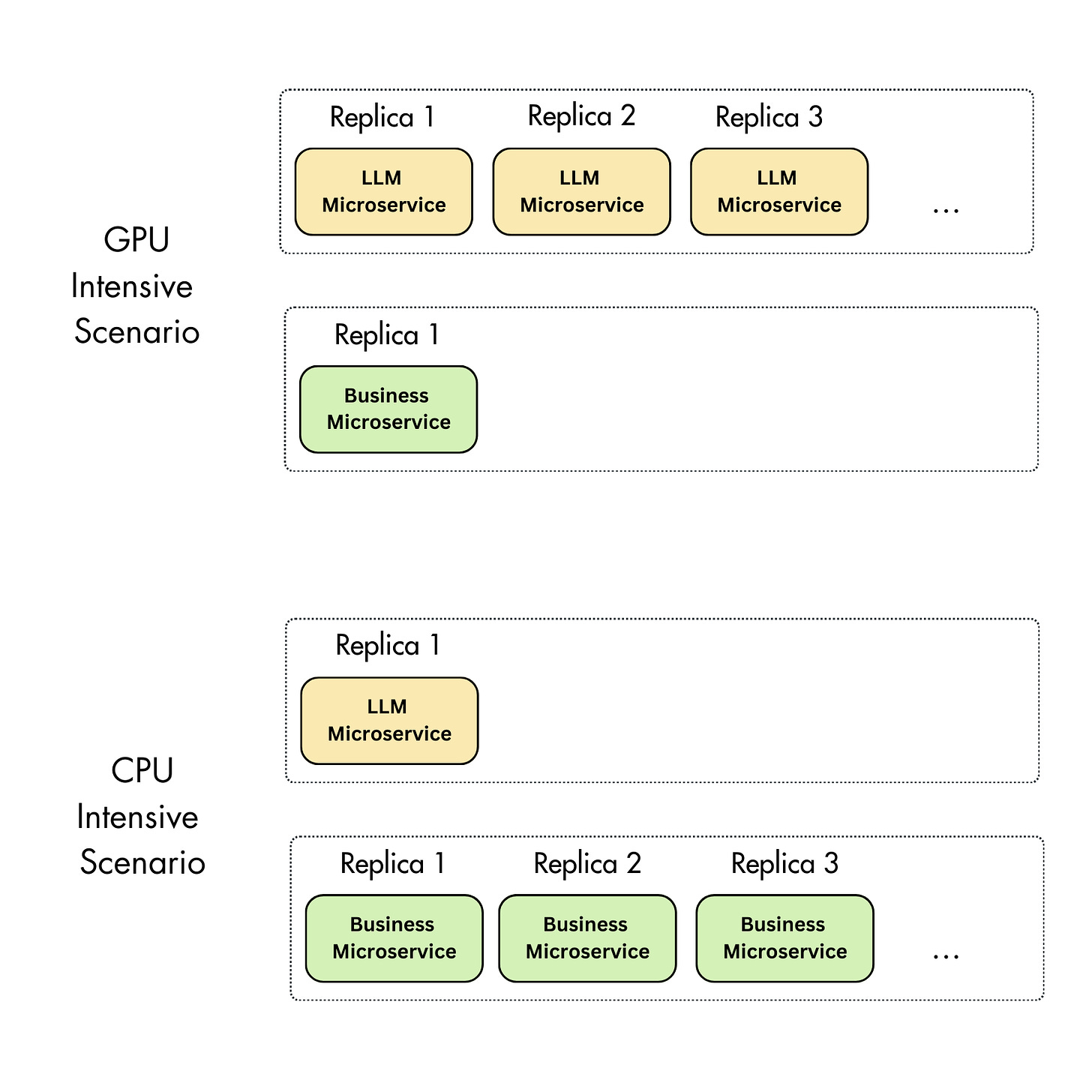

As illustrated in Figure 2, the main advantage of this approach is the ability to scale each component independently. For instance, since the LLM service might require more GPU resources than the business logic, it can be scaled horizontally without impacting the other components. This optimizes resource usage and reduces costs, as different types of machines (e.g., GPU versus CPU) can be used according to each service’s needs.

For example, let’s assume that the LLM inference takes longer, so you will need more ML service replicas to meet the demand. But remember that GPU VMs are expensive. By decoupling the two components, you will run only what is required on the GPU machine and not block the GPU VM with other computing that can be done on a much cheaper machine. Thus, by decoupling the components, you can scale horizontally as required, with minimal costs, providing a cost-effective solution to your system’s needs.

Additionally, each microservice can adopt the most suitable technology stack, allowing teams to innovate and optimize independently.

However, microservices introduce complexity in deployment and maintenance. Each service must be deployed, monitored, and maintained separately, which can be more challenging than managing a monolithic system. The increased network communication between services can also introduce latency and potential points of failure, necessitating robust monitoring and resilience mechanisms.

Note that the proposed design for decoupling the ML model and business logic into two services can be extended if necessary. For example, you can have one service for preprocessing the data, one for the model, and another for post-processing the data. Depending on the four pillars (latency, throughput, data, and infrastructure), you can get creative and design the most optimal architecture for your application needs.

3. Choosing between monolithic and microservices architectures

The choice between monolithic and microservices architectures for serving ML models largely depends on the application’s specific needs.

A monolithic approach might be ideal for smaller teams or more straightforward applications where ease of development and maintenance is a priority. It’s also a good starting point for projects without frequent scaling requirements. Also, if the ML models are smaller, don’t require a GPU, or don’t require smaller and cheaper GPUs, the trade-off between reducing costs and complicating your infrastructure is worth considering.

On the other hand, microservices, with their adaptability and scalability, are well suited for larger, more complex systems where different components have varying scaling needs or require distinct tech stacks. This architecture is particularly advantageous when scaling specific system parts, such as GPU-intensive LLM services. As LLMs require powerful machines with GPUs, such as Nvidia A100, V100, or A10g, which are incredibly costly, microservices offer the flexibility to optimize the system for keeping these machines busy all the time or quickly scaling down when the GPU is idle. However, this flexibility comes at the cost of increased complexity in both development and operations.

A common strategy is to start with a monolithic design and further decouple it into multiple services as the project grows.

However, to successfully do so without making the transition too complex and costly, you must design the monolith application with this in mind. For instance, even if all the code runs on a single machine, you can completely decouple the modules of the application at the software level.

This makes moving these modules to different microservices easier when the time comes. When working with Python, for example, you can implement the ML and business logic into two different Python modules that don’t interact with each other. Then, you can glue these two modules at a higher level, such as through a service class or directly into the framework you use to expose your application over the internet, such as FastAPI.

Another option is to write the ML and business logic as two different Python packages that you glue together in the same ways as before. This is better because it completely enforces a separation between the two but adds extra complexity at development time.

The main idea, therefore, is that if you start with a monolith and down the line you want to move to a microservices architecture, it’s essential to design your software with modularity in mind. Otherwise, if the logic is mixed, you will probably have to rewrite everything from scratch, adding tons of development time, translating into wasted resources.

4. RAG inference pipelines using microservices

Let’s look at implementing an RAG inference pipeline using the microservice architecture.

Our primary objective is to develop a chatbot. To achieve this, we will process requests sequentially, with a strong emphasis on low latency. This necessitates the selection of an online real-time inference deployment architecture.

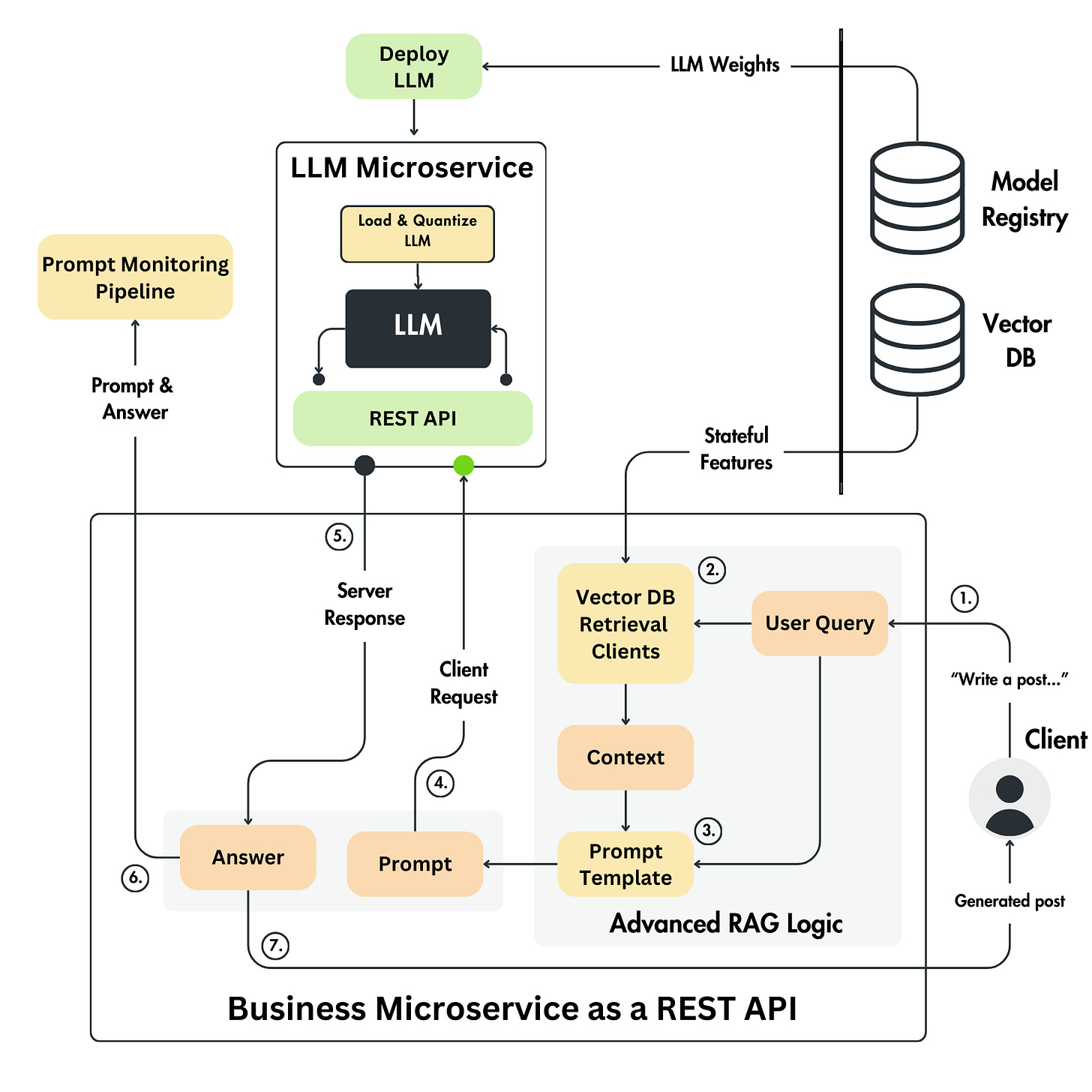

On the monolith versus microservice aspect, we will split the ML service between a REST API server containing the business (RAG) logic and an LLM microservice optimized for running the given LLM.

As the LLM requires a powerful machine to run the inference, and we can further optimize it with various engines to speed up the latency and memory usage, it makes the most sense to go with the microservice architecture.

By doing so, we can quickly adapt the infrastructure based on various LLM sizes. For example, if we run an 8B parameter model, the model can run on a single machine with a Nivida A10G GPU after quantization. But if we want to run a 30B model, we can upgrade to an Nvidia A100 GPU. Doing so allows us to upgrade only the LLM microservice while keeping the REST API untouched.

As illustrated in Figure 3, most business logic is centered around RAG in our particular use case. Thus, we will perform RAG’s retrieval and augmentation parts within the business microservice. It can also include advanced RAG techniques to optimize the pre-retrieval, retrieval, and post-retrieval steps. The LLM microservice is strictly optimized for the RAG generation component.

In summary, our approach involves implementing an online real-time ML service using a microservice architecture, which effectively splits the LLM and business (RAG) logic into two distinct services.

Let’s review the interface of the inference pipeline, which is defined by the feature/training/inference (FTI) architecture. For the pipeline to run, it needs two things:

Real-time features used for RAG, generated by the feature pipeline, which is queried from our online feature store, more concretely from a vector database (DB)

A fine-tuned LLM generated by the training pipeline, which is pulled from our model registry

With that in mind, the flow of the ML service looks as follows, as illustrated in Figure 3:

A user sends a query through an HTTP request.

The user’s input retrieves the proper context by leveraging the advanced RAG retrieval module.

The user’s input and retrieved context are packed into the final prompt using a dedicated prompt template.

The prompt is sent to the LLM microservice through an HTTP request.

The business microservices wait for the generated answer.

After the answer is generated, it is sent to the prompt monitoring pipeline along with the user’s input and other vital information to monitor.

Ultimately, the generated answer is sent back to the user.

Conclusion

In summary, monolithic architectures offer simplicity and ease of maintenance but at the cost of flexibility and scalability. At the same time, microservices provide the agility to scale and innovate but require more sophisticated management and operational practices.

Our latest book, the LLM Engineer’s Handbook, inspired this article.

If you liked this article, consider supporting our work by buying our book and getting access to an end-to-end framework on how to engineer production LLM & RAG applications, from data collection to fine-tuning, serving and LLMOps:

Images

If not otherwise stated, all images are created by the author.

I am gaining huge knowledge for monolithic vs micro architecture related and mostly i can used monolithic architecture to build LLM Or RAG Application. Thank You for sharing valuable content for architectures.

Funny to see how the AI world slowly hits the normal engineering issues.

Architecture, Scaling, Caching, …

I would recommend anyone to put the LLM into their service. I would recommend to treat it always as an external service.

A lot of the points are true, but there are more. What if you want to test a different model? What about automatic testing? Wanna try it against the real OpenAI?

Use OpenAI REST API as your boundary. Most LLM providers are supporting it.

Another big issue what I’m seeing is the scalability of the LLM (the GPU). While a CPU with more threads can do more in parallel, a GPU is quite limited. You mainly scale via the amount of them.

Separating your service and the LLM has one big drawback. You can scale your services faster than the LLM.

So testing the handling of a lot of requests in a service to service setup becomes crucial.