AI Agents: Architect the long-term memory

When to prompt, RAG, fine-tune, or deploy agents. Architecting LLM fine-tuning pipelines.

This week’s topics:

Architecting the long-term memory layer of an AI agent

Build LLM-first systems: When to prompt, RAG, fine-tune, or deploy agents

Architecting LLM fine-tuning pipelines

Architecting the long-term memory layer of an AI agent

Short-term memory makes your agent feel smart.

Long-term memory is what makes it feel alive.

In Lesson 4 of the PhiloAgents course, we show you how to build a long-term memory system from scratch.

This is done using a production-grade RAG architecture that bridges context, recall, and reasoning.

Because here’s the thing:

Most LLM agents “forget” everything outside the current conversation.

They fake continuity.

They hallucinate facts.

They break under pressure.

We wanted to change that.

So we built a long-term memory layer using three main components:

1. The RAG Ingestion Pipeline

Think of this as the library builder.

It runs offline and prepares high-quality knowledge before a single question is asked.

It includes:

Extracting data from Wikipedia + Stanford Encyclopedia

Cleaning noise and irrelevant fluff

Chunking into semantically meaningful slices

Deduplication to remove redundancy (using MinHash)

Embedding using HuggingFace open-source models + metadata tagging

Hybrid indexing inside MongoDB for semantic + keyword search

This gives our PhiloAgents a clean, optimized, and searchable memory base - ready to serve on demand.

2. The RAG Retriever Tool

This is where it gets live.

During a conversation, when the agent needs external knowledge, it:

Embeds the user query using the same model as ingestion

Performs vector search via MongoDB to fetch relevant memory chunks

Those chunks are passed to the LLM as grounded context, giving it a rich, factual foundation to respond from.

3. Procedural Memory

Encoded directly into the Python code: LangGraph structure, LLM prompts and tools.

And none of this would be possible without the full stack in play:

Grok to give us ultra-fast inference with Llama 3.1

Opik (by Comet) provides us with traceability and prompt monitoring.

This is agentic RAG in action.

And it’s the difference between shallow Q&A and real-time philosophical simulation.

We chose MongoDB because it's fast, battle-tested, and supports hybrid search out of the box.

That gives us semantic recall and precision filters when needed.

You can implement this yourself.

All the code, lessons, and architecture diagrams are free and open-source.

Want to build agentic systems that remember, reason, and evolve?

Lesson 4 of the PhiloAgents free course will get you there ↓↓↓

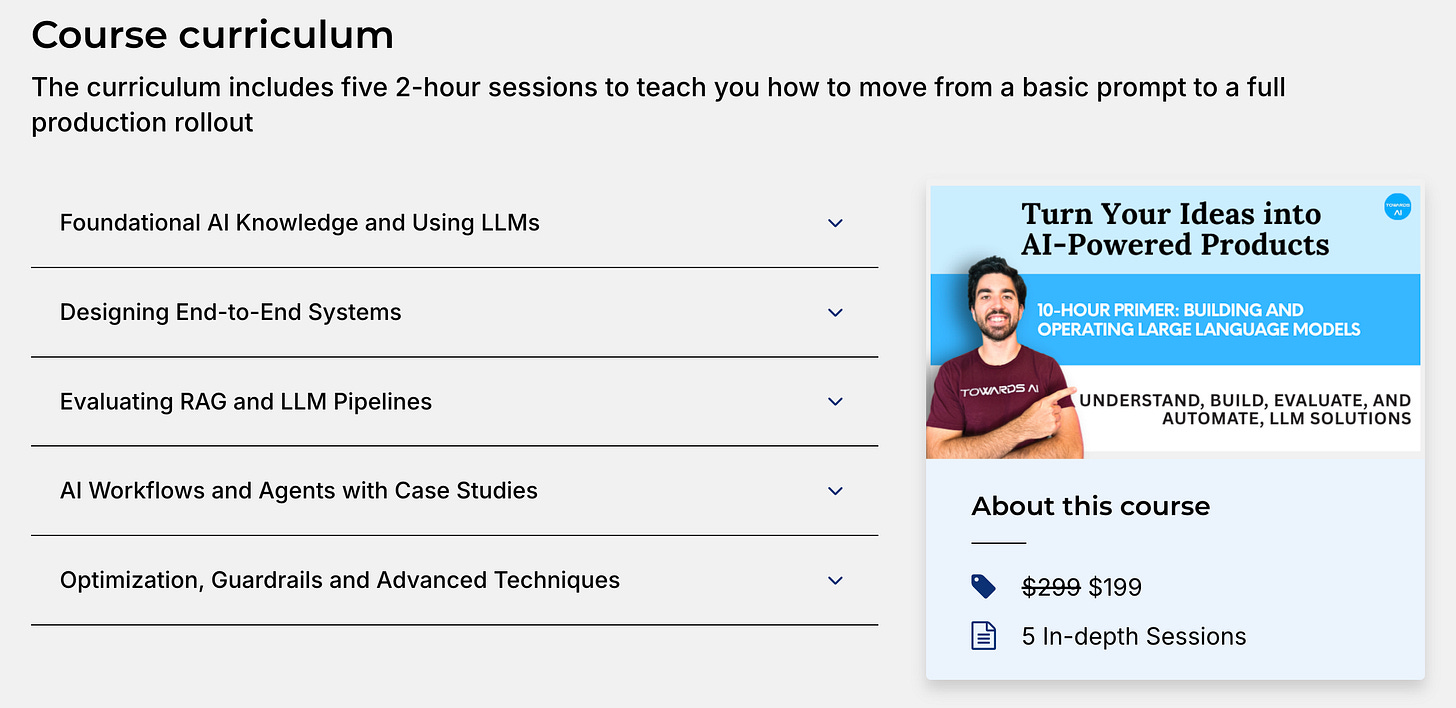

Build LLM-First Systems: When to Prompt, RAG, Fine-Tune, or Deploy Agents (Affiliate)

Two months ago, I started collaborating with Towards AI and

on our agent's course, for which we sold our 150 pre-release slots in under 24 hours.You know Louis? The one and only who co-authored the bestseller Building LLMs for Production

🔎 Why did I start collaborating with them?

Because we share precisely the same values: “Building REAL-WORLD AI applications and teaching people about the process.”

No hype. No toys. Just sweat and hands-on engineering, ready for the industry.

🥂 With that in mind, I want to share with you that they released a new top-notch course on understanding how to build, evaluate, automate, and maintain robust LLM solutions.

Everything is packed in 10 hours of high-quality video footage.

Hence the name of the course: 10-Hour Primer: Building and Operating LLMs

I personally recommend this course for individuals new to AI who want to get hands-on experience and learn how to transition from a basic prompt to a full LLM production rollout.

Use code Paul_15 to get 15% off the price.

Architecting LLM fine-tuning pipelines

Fine-tuning isn’t hard.

Here's where most pipelines fall apart:

Integrating it into a full LLM system.

So here’s how we architected our training pipeline:

Inputs and outputs

The training pipeline has one job:

Input:

A dataset from the data registry

A base model from the model registry

Output:

A fine-tuned model registered in a model registry and ready for deployment

In our case:

Base: Llama 3.1 8B Instruct

Dataset: Custom summarization data generated from web documents

Output: A specialized model that summarizes web content

Pipeline steps

Load base model and apply LoRA adapters

Load dataset and format using Alpaca-style instructions

Fine-tune with Unsloth on T4 GPUs (via Colab)

Track training + eval metrics with Comet

If performance is good, push to Hugging Face model registry

If not, iterate with new data or hyperparameters

Most research happens in Notebooks (and that’s okay).

So we kept our training pipeline in a Jupyter Notebook on Colab.

Why?

Let researchers feel at home

No SSH friction

Visualize results fast

Enable rapid iteration

Plug into the rest of the system via registries

Just because it’s manual doesn’t mean it’s isolated.

Here's how it connects:

Data registry: feeds in the right fine-tuning set

Model registry: stores the fine-tuned weights

Inference service: serves the fine-tuned model solely using the model registry

Eval tracker: logs metrics + compares runs in real-time

The Notebook is completely decoupled from the rest of the LLM system.

Can it be automated?

Yes... and we’re almost there.

With ZenML already managing our offline pipelines, the training code can be converted to a deployable pipeline.

The only barrier? Cost and compute.

That’s why continuous training (CT) in the LLM space is more of a dream than something that you actually want to do in practice.

TL;DR: If you’re thinking of training your own LLMs, don’t just ask “How do I fine-tune this?”

Ask:

How does it integrate?

What data version did I use?

Where do I store the weights?

How do I track experiments across runs?

How can I detach the fine-tuning from deployment?

That’s what separates model builders from AI engineers.

Complete breakdown in Lesson 4 of the Second Brain free course ↓↓↓

Whenever you’re ready, there are 3 ways we can help you:

Perks: Exclusive discounts on our recommended learning resources

(books, live courses, self-paced courses and learning platforms).

The LLM Engineer’s Handbook: Our bestseller book on teaching you an end-to-end framework for building production-ready LLM and RAG applications, from data collection to deployment (get up to 20% off using our discount code).

Free open-source courses: Master production AI with our end-to-end open-source courses, which reflect real-world AI projects and cover everything from system architecture to data collection, training and deployment.

Images

If not otherwise stated, all images are created by the author.