AI off-the-shelf tools can't keep up

The most complex step in AI/ML in production? Why text-to-SQL is NOT the future of tabular data search

This week’s topics:

Why text-to-SQL is NOT the future of tabular data search

AI off-the-shelf tools can't keep up

The most complex step in AI/ML in production?

Why text-to-SQL is NOT the future of tabular data search

I’ll say it (and it may get under a few people's skin):

Text-to-SQL is not the future of tabular data search.

Instead, tabular semantic search + natural language is 10x more powerful for search.

This approach leverages multi-attribute vector indexes that integrate all the columns of your tabular dataset—both structured and unstructured.

It’s redefining how we retrieve relevant products from massive datasets.

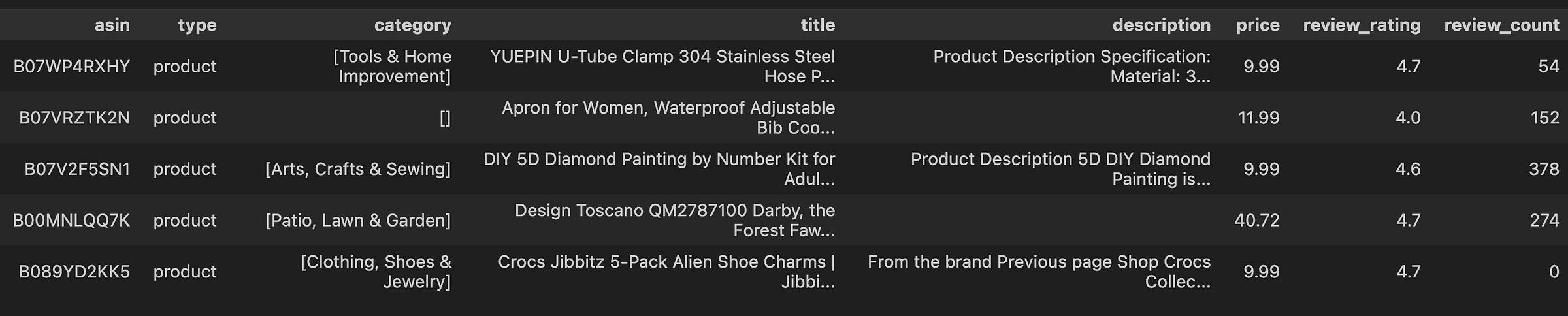

Let me show you how to build these multi-attribute indexes for Amazon e-commerce products using Superlinked and MongoDB Atlas vector search.

Step 1: Prepare the dataset

Before building a multi-attribute vector index, you must clean and preprocess the dataset. This involves:

Handling NaNs

Converting data types

Outlier removal

Why is there no chunking?

With tabular data, there’s no need to chunk rows. Each row represents a distinct product entry.

Step 2: Define embedding spaces for your tabular data

The key to this process is enabling search and ranking of products based on multiple attributes, allowing interactions between them to unlock complex relationships.

For example, in an Amazon product dataset, here’s how the data is broken down:

Text fields (like product titles and descriptions):

Embedded using models like Alibaba-NLP/gte-large-en-v1.5.Categorical data (like product categories):

Mapped to CategoricalSimilaritySpaces for finding identical or similar categories (e.g., laptop, electronics, etc.).Numerical values (like price and ratings):

Represented in NumberSpaces to optimize search results by minimizing (e.g., price) or maximizing (e.g., reviews) across multiple attributes.

from superlinked import framework as sl

class ProductSchema(sl.Schema):

category: sl.StringList

description: sl.String

review_rating: sl.Float

product = ProductSchema()

category_space = sl.CategoricalSimilaritySpace(

category_input=product.category,

categories=constants.CATEGORIES,

uncategorized_as_category=True,

negative_filter=-1,

)

description_space = sl.TextSimilaritySpace(

text=product.description, model="Alibaba-NLP/gte-large-en-v1.5"

)

review_rating_maximizer_space = sl.NumberSpace(

number=product.review_rating, min_value=-1.0, max_value=5.0, mode=sl.Mode.MAXIMUM

)Step 3: Bring it all together

Finally, we combine all these similarity spaces into a single multi-attribute product index.

This allows for the creation of complex queries that simultaneously optimize for:

Text relevance

Category matching

Numerical goals (e.g., maximizing reviews or minimizing prices)

At query time, we can weight each attribute embedding, giving certain attributes more (or less) relevance for a particular search. This enables quick experimentation without the need for reindexing.

By eliminating post-processing (like ranking), we also remove unnecessary engineering overhead.

The result? Efficiently searching millions of products and returning a highly relevant subset—all while balancing multiple attributes.

product_index = sl.Index(

spaces=[

category_space,

description_space,

review_rating_maximizer_space,

price_minimizer_space,

]

)AI off-the-shelf tools can't keep up (Sponsored)

With fraud attacks evolving faster than ever, businesses find that off-the-shelf solutions aren't always enough.

To stay ahead, teams need the agility to deploy custom detection models using their unique data and domain expertise.

The goal is to build a new data/ML pipeline in days, not months!

Building these capabilities—whether for transaction fraud detection, ML monitoring, or risk decisioning—requires integrating streaming transactions, historical patterns, and third-party signals at millisecond latencies.

Yet even with strong data science teams and promising models, organizations struggle to productionize these advanced fraud detection systems.

The challenge lies in building and maintaining production-grade data infrastructure that can scale reliably while meeting strict latency requirements.

Tecton hosts a FREE virtual talk teaching how leading organizations like Coinbase, Plaid, and Tide are improving their fraud detection capabilities by modernizing their data infrastructure.

Through technical deep-dives and real-world examples, we'll explore:

Common challenges and best practices for productionizing fraud detection data

Approaches to unifying batch and streaming data processing

Methods for ensuring the delivery of fresh features at scale

Strategies for reducing data engineering complexity without compromising reliability

You'll learn proven approaches for building fraud detection infrastructure that can adapt as quickly as fraud tactics evolve, drawing from examples of organizations that have reduced their model deployment time from months to minutes.

P.S. In the past months, I had fantastic talks with Sergio Ferragut, one of the webinar hosts on feature platforms, MLOps and ML systems. He is a great teacher and knows what he is talking about!

The free webinar starts on January 28 (in 3 days). Still, enough time to join ↓

The most complex step in AI/ML in production?

You can probably guess: serving the ML models.

Why is it so challenging?

Because you have to put all the pieces together into a unified system, all while considering:

Throughput/latency requirements

Infrastructure costs

Data and model access

Training-serving skew

The good news? We’ve released the fourth lesson from the H&M Real-Time Personalized Recommender open-source course.

In this lesson, we show you how to build and deploy scalable inference pipelines.

Since we started this project with production in mind by using the Hopsworks AI Lakehouse, we’ve been able to bypass most of these challenges. For instance:

The query and ranking models are accessed from the model registry.

Customer and H&M article features are accessed from the feature store using the offline and online stores, depending on throughput/latency requirements.

Features are accessed from a single source of truth (feature store), solving the training-serving skew.

Estimating infrastructure costs in a proof of concept (PoC) is more complicated.

However, we leverage a Kubernetes cluster managed by Hopsworks, which uses KServe to scale up and down our real-time personalized recommender based on traffic.

What will you learn in the fourth lesson of the H&M recommender course?

In this lesson, you’ll learn how to:

Architect offline and online inference pipelines using MLOps best practices.

Implement offline and online pipelines for an H&M real-time personalized recommender.

Deploy the online inference pipeline using the KServe engine.

Test the H&M personalized recommender from a Streamlit app.

Deploy the offline ML pipelines using GitHub Actions.

Images

If not otherwise stated, all images are created by the author.

solid visuals. What tool did you use for it?