Build a TikTok-like recommender from scratch!

RAG fundamentals first: Why use RAG and not fine-tune your LLMs?

Quick questions from Decoding ML for you

We will post different content on Substack’s social feed along with our blog and newsletter articles.

We are curious about your opinion.

Help us with a simple click ↓

This week’s topics:

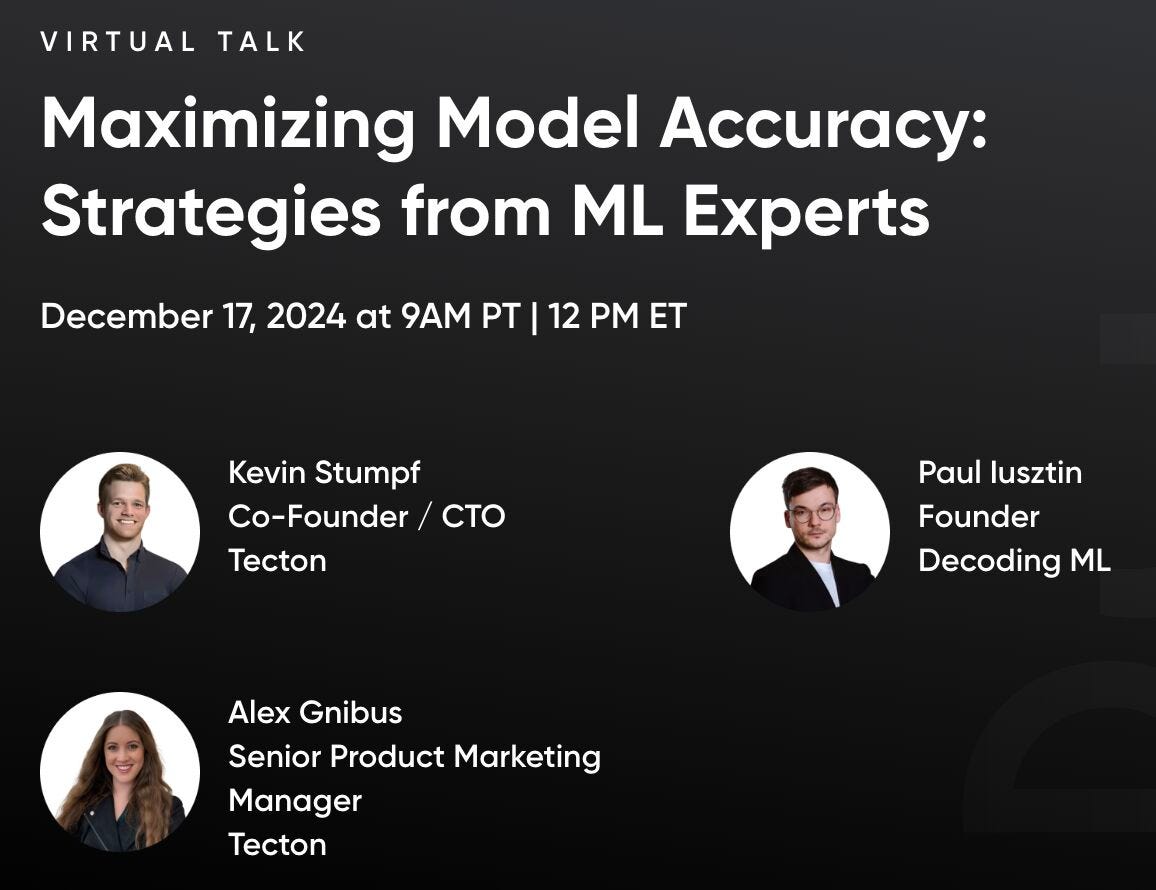

Maximizing model accuracy: Strategies from ML experts

RAG fundamentals first: Why use RAG and not fine-tune your LLMs?

Build your own TikTok-style recommender from scratch!

Maximizing model accuracy: Strategies from ML experts

91% of models degrade in production. I will participate in a webinar about maximizing model accuracy while in production: a must-have skill for real-world AI applications.

Tecton will host the webinar, which will be between Kevin Stumpf (Co-Founder / CTO at Tecton) and me.

During the webinar, we talk about topics such as:

- What are the "silent killers" of accuracy?

- What goes wrong when you go from training to serving?

- How do you balance accuracy goals with resource constraints (e.g., costs, available talent)?

We will explore architectural patterns to solve these issues.

This is not GenAI, but it's precious information that can be used in any AI system...

...as model degradation is present in most AI applications, 91% of models degrade over time.

🕰️ The panel talk will take place virtually on Tuesday, Dec. 17.

RAG fundamentals first: Why use RAG and not fine-tune your LLMs?

Retrieval-augmented generation (RAG) enhances the accuracy and reliability of generative AI models with information fetched from external sources.

It is a technique complementary to the internal knowledge of the LLMs.

𝘛𝘩𝘦𝘳𝘦 𝘢𝘳𝘦 𝘵𝘸𝘰 𝘧𝘶𝘯𝘥𝘢𝘮𝘦𝘯𝘵𝘢𝘭 𝘱𝘳𝘰𝘣𝘭𝘦𝘮𝘴 𝘵𝘩𝘢𝘵 𝘙𝘈𝘎 𝘴𝘰𝘭𝘷𝘦𝘴:

1. Hallucinations

2. Old or private information

> 𝟭. 𝗛𝗮𝗹𝗹𝘂𝗰𝗶𝗻𝗮𝘁𝗶𝗼𝗻𝘀

If a chatbot without RAG is asked a question about something it wasn’t trained on, there are big changes that will give you a confident answer about something that isn’t true.

Let’s take the 2024 soccer EURO Cup as an example. If the model is trained up to October 2023 and we ask something about the tournament, it will most likely give a random answer that is hard to differentiate from reality.

Even if the LLM doesn’t hallucinate constantly, it raises the concern of trust in its answers.

Thus, we must ask ourselves: “𝘞𝘩𝘦𝘯 𝘤𝘢𝘯 𝘸𝘦 𝘵𝘳𝘶𝘴𝘵 𝘵𝘩𝘦 𝘓𝘓𝘔’𝘴 𝘢𝘯𝘴𝘸𝘦𝘳𝘴?” and “𝘏𝘰𝘸 𝘤𝘢𝘯 𝘸𝘦 𝘦𝘷𝘢𝘭𝘶𝘢𝘵𝘦 𝘪𝘧 𝘵𝘩𝘦 𝘢𝘯𝘴𝘸𝘦𝘳𝘴 𝘢𝘳𝘦 𝘤𝘰𝘳𝘳𝘦𝘤𝘵?”

By introducing RAG, we will enforce the LLM always to answer solely based on the introduced context.

The LLM will act as the reasoning engine, while the additional information added through RAG will be the single source of truth for the generated answer.

By doing so, we can quickly evaluate if the LLM’s answer is based on the external data or not.

> 𝟮. 𝗢𝗹𝗱 𝗼𝗿 𝗽𝗿𝗶𝘃𝗮𝘁𝗲 𝗶𝗻𝗳𝗼𝗿𝗺𝗮𝘁𝗶𝗼𝗻

Any LLM is trained or fine-tuned on a subset of the total world knowledge dataset.

This is due to three main issues:

1. 𝗣𝗿𝗶𝘃𝗮𝘁𝗲 𝗱𝗮𝘁𝗮: You cannot train your model on data you don’t own or have the right to use.

2. 𝗡𝗲𝘄 𝗱𝗮𝘁𝗮: New data is generated every second. Thus, you would have to train your LLM to keep up constantly.

3. 𝗖𝗼𝘀𝘁𝘀: Training or fine-tuning an LLM is a highly costly operation. Hence, it is not feasible to do it hourly or daily.

RAG solved these issues, as you no longer have to constantly fine-tune your LLM on new data (or even private data).

Directly injecting the necessary data to respond to user questions into the prompts fed to the LLM is enough to generate correct and valuable answers.

For an in-depth article on the RAG fundamentals, consider reading our article:

Build your own TikTok-style recommender from scratch!

We just released a free, production-ready course using H&M's data to build real-time personalized recommenders.

The 5-lesson course has written tutorials, notebooks, Python code, and cloud deployments.

.

What you'll learn:

The course will focus on engineering a production-ready recommender system touching:

ML system design

real-time personalized recommenders architectures

MLOps best practices (feature store, model registry)

What you'll build:

A production-ready recommender system with real-time personalization

4-stage recommender architecture for instant recommendations

Two-tower model for user and fashion item embeddings

LLM-enhanced recommendation system

Tech stack & deployment:

Feature engineering with Polars

Real-time serving with KServe

MLOps infrastructure using Hopsworks

Offline batch deployments using GitHub Actions

Interactive UI with Streamlit Cloud

dependency management using 𝘶𝘷

The outcome will be a deployed personalized recommender you can tinker with.

I've learned a lot from my past open-source courses, and together with Hopsworks, we've made something special. Enjoy!

Or start reading the:

Images

If not otherwise stated, all images are created by the author.