How to fine-tune LLMs on custom datasets at Scale using Qwak and CometML

How to fine-tune a Mistral7b-Instruct model, leveraging best MLOps practices using Qwak for cloud deployments at scale and CometML for experiment management.

→ the 7th out of 11 lessons of the LLM Twin free course

What is your LLM Twin? It is an AI character that writes like yourself by incorporating your style, personality, and voice into an LLM.

Why is this course different?

By finishing the “LLM Twin: Building Your Production-Ready AI Replica” free course, you will learn how to design, train, and deploy a production-ready LLM twin of yourself powered by LLMs, vector DBs, and LLMOps good practices.

Why should you care? 🫵

→ No more isolated scripts or Notebooks! Learn production ML by building and deploying an end-to-end production-grade LLM system.

More details on what you will learn within the LLM Twin course, here 👈

Latest Lessons of the LLM Twin Course

Lesson 4: Python Streaming Pipelines for Fine-tuning LLMs and RAG - in Real-Time!

→ Feature pipeline, Bytewax streaming engine, Pydantic models, The dispatcher layer

Lesson 5: The 4 Advanced RAG Algorithms You Must Know to Implement

→ RAG System, Qdrant, Query Expansion, Self Query, Filtered vector search

Lesson 6: The Role of Feature Stores in Fine-Tuning LLMs

→ Custom Dataset Generation, Artifact Versioning, GPT3.5-Turbo Distillation, Qdrant

Lesson 7: How to fine-tune LLMs on custom datasets at Scale using Qwak and CometML

In the 7th lesson, we will build the fine-tuning pipeline, use the versioned datasets we’ve logged on CometML, compose the workflow, and deploy the pipeline on Qwak to fine-tune our model.

Completing this lesson, you’ll gain a solid understanding of the following:

what is Qwak AI and how does it help solve MLOps challenges

how to fine-tune a Mistral7b-Instruct on our custom llm-twin dataset

what purpose do QLoRA Adapters and BitsAndBytes configs serve

how to fetch versioned datasets from CometML

how to log training metrics and model to CometML

the detailed walkthrough of how the Qwak build system works

Table of Contents:

What is LLM Finetuning

Qwak AI Platform

Mistral7b-Instruct LLM Model

The Finetuning Pipeline

Experiment Tracking with CometML

Conclusion

1. What is LLM Finetuning

Represents the process of taking pre-trained models and further training them on smaller, specific datasets to refine their capabilities and improve performance in a particular task or domain.

Foundation models know a lot about a lot, but for production, we need models that know a lot about a little.

Here are the common components of efficient fine-tuning:

🔗 PEFT (parameter-efficient-fine-tuning)

A technique designed to adapt large pre-trained models to new tasks with minimal computational overhead and memory usage🔗 QLoRA (Quantized Low-Rank Adaptation)

A specific PEFT technique which enhances the efficiency of fine-tuning LMs by introducing low-rank matrices into the model’s architecture, capturing task-specific information without altering the core model weights.🔗 BitsAndBytes

Library designed to optimize the memory usage and computational efficiency of large models by employing low-precision arithmetic.

2. Qwak AI Platform

An ML engineering platform that simplifies the process of building, deploying, and monitoring machine learning models, bridging the gap between data scientists and engineers.

🔗 For more details, see Qwak.

Key points within the Qwak ML Lifecycle:

Deploying and iterating on your models faster

Testing, serializing, and packaging your models using a flexible build mechanism

Deploying models as REST endpoints or streaming applications

Gradually deploying and A/B testing your models in production

Build and Deployment versioning

Qwak provides both CPU and GPU-powered instances based on the QPU quota.

The QPU stands for qwak-processing-unit and helps users manage their platform quota.

Cost: Qwak offers free 100QPU/month!

A QPU is the equivalent of 4 CPUs with 16 GB RAM, which costs $1.2/hour.

2.1 The Build Lifecycle

To build and deploy on Qwak, we would have to wrap our functionality (e.g. inference/training) as a QwakModel. The QwakModel is an interface with the following methods that are required to be implemented:

[QwakModel] class implements these methods:

|

|-- build - called on `qwak build .. from cli` at build time.

|-- schema - specifies model inputs and outputs

|-- initialize_model - invoked when model is loaded at serving time.

|-- predict - invoked on each request to the deployment's endpoint.

! Important

The predict method is decorated with qwak.api() which provides qwak_analytics

on model inference requests.The stages run in this order:

Get the required instance (CPU/GPU)

Prepare environment (install requirements)

Build Function (downloads LLM model)

Testing (runs defined UT and Integration Tests)

Serialization (serializes the build into a deployable image)

Validating (wraps the image with uvicorn, and pings it for health)

Pushing the artifact to Qwak registry for version control and tracking.

3. Mistral7b-Instruct LLM Model

In our use case, we’ll fine-tune a Mistral7b-Instruct model on our custom dataset that we prepared in the previous lesson, Lesson 6.

Model Card

Mistral 7B is a 7 billion parameter LM that outperforms Llama 2 13B on all benchmarks and rivals Llama 1 34B in many areas. It features Grouped-query attention for faster inference and Sliding Window Attention for handling longer sequences efficiently. It’s released under the Apache 2.0 license.

Special Tokens

For Mistral7b Instruct, the special_tokens_map.json includes the following tokens "bos_token": "<s>", "eos_token": "</s>", and "unk_token": "<unk>". These tokens define the start and end delimiters for prompts.

Apart from that, two new tokens [INST] and [/INST] are used within the prompt scope <s>[INST]....[/INST]</s>. Since the model is instruction-based, these tokens help separate the instructions, improving the model's ability to understand and respond to them effectively.

🔗 Find more about Mistral7b’s Instruction Format.

4. The Finetuning Pipeline

As the starting point, here’s how our fine-tuning module’s folder structure would look like:

|--finetuning/

| |__ __init.py__

| |__ config.yaml

| |__ dataset_client.py

| |__ model.py

| |__ requirements.txt

| |__ settings.py

|

|__ .env

|__ build_config.yaml

|__ Makefile

|__ test_local.pyHere, we will focus on the following implementations:

config.yaml - fine-tuning configuration (epochs, steps, checkpointing)

dataset_client.yaml - functionality to download the versioned dataset from CometML and split it into train/val.

model.py - main functionality of the fine-tuning pipeline.

Prepare PEFT, QLora, and BitsAndBytes configs

Model Preparation/Download, Dataset Preparation, Training Loop

Logging training metrics/parameters to CometML

Fine-tuning sample formatting using a prompt template

Now, diving into what the model’s definition as a QwakModel looks like:

class CopywriterMistralModel(QwakModel):

def __init__(

self,

is_saved: bool = False,

model_save_dir: str = "./model",

model_type: str = "mistralai/Mistral-7B-Instruct-v0.1",

comet_artifact_name: str = "cleaned_posts",

config_file: str = "./finetuning/config.yaml",

):

def _prep_environment(self):

def _init_4bit_config(self):

def _initialize_qlora(self, model: PreTrainedModel) -> PeftModel:

def _init_trainig_args(self):

def _remove_model_class_attributes(self):

def load_dataset(self) -> DatasetDict:

def preprocess_data_split(self, raw_datasets: DatasetDict):

def generate_prompt(self, sample: dict) -> dict:

def tokenize(self, prompt: str) -> dict:

def init_model(self):

def build(self):

def initialize_model(self):

def schema(self) -> ModelSchema:

@qwak.api(output_adapter=DefaultOutputAdapter())

def predict(self, df):Check the full implementation: 🔗 Here

Once, we’ve implemented the fine-tuning logic and have wrapped it as a QwakModel, we have to trigger a remote Qwak build to deploy our pipeline.

To do that, we’ll use the build_config.yaml.

build_env:

docker:

assumed_iam_role_arn: null

base_image: public.ecr.aws/qwak-us-east-1/qwak-base:0.0.13-gpu

cache: true

env_vars:

- HUGGINGFACE_ACCESS_TOKEN=""

- COMET_API_KEY=""

- COMET_WORKSPACE=""

- COMET_PROJECT=""

....

python_env:

dependency_file_path: finetuning/requirements.txt

remote:

is_remote: true

resources:

instance: gpu.a10.2xl

...

build_properties:

branch: finetuning

gpu_compatible: false

model_id: copywriter_model

model_uri:

git_branch: master

main_dir: finetuning

uri: .

tags: []

deploy: false

...

step:

tests: true

validate_build_artifact: true

validate_build_artifact_timeout: 120

verbose: 0In short, this config says:

Install requirements from finetuning/requirements.txt

Use a gpu.a10.2xl

In the QwakUI, register this build under the copywriter_model versioning candidates.

Once the build is complete, validate artifacts and push them to the Qwak Registry.

For the full setup and how to deploy on Qwak, check Lesson 7 Readme.

5. Experiment Tracking with CometML

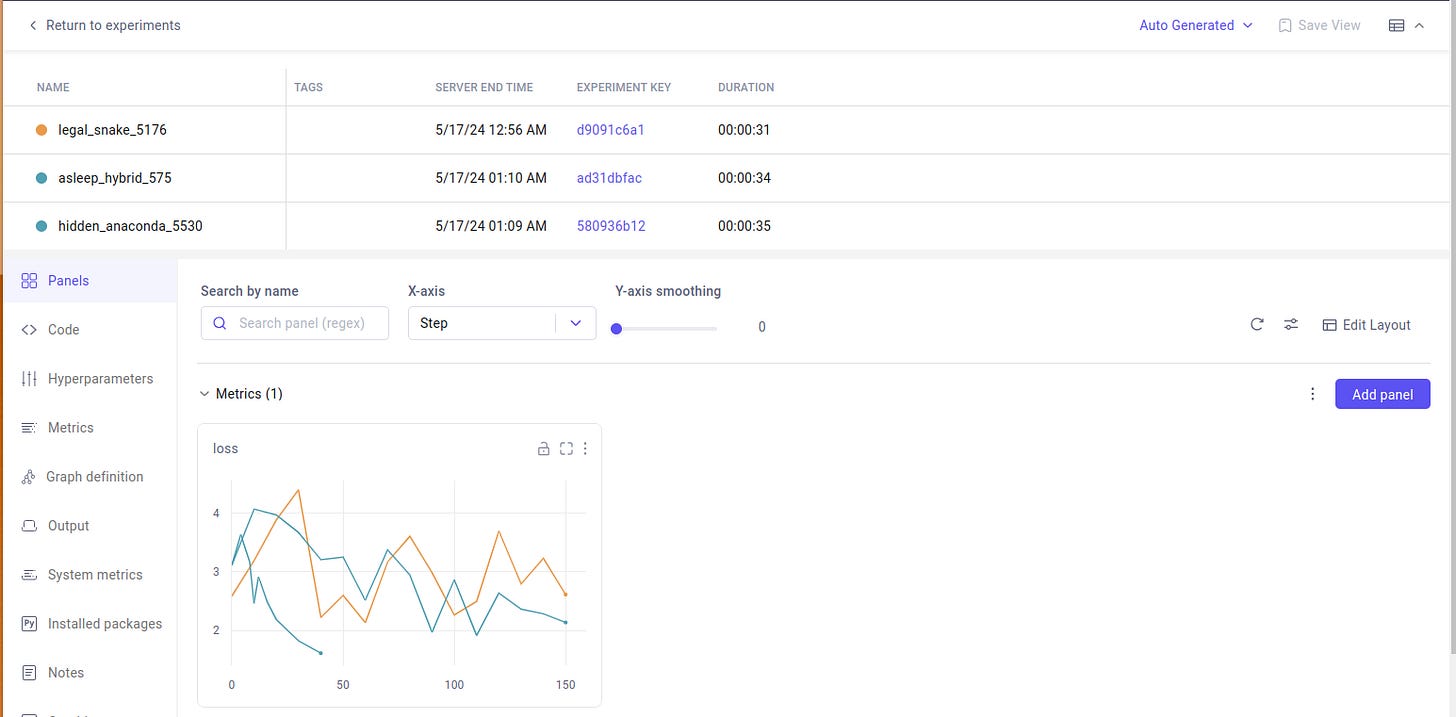

Once we’ve successfully deployed the fine-tuning module, let’s inspect the Experiments we’ve tracked on CometML.

Upon comparing multiple experiments, we can inspect which set of parameters and model respects the key indicators we’re interested in.

With all the features it offers, the extensibility of the UI dashboard, and the dev experience, CometML takes a top spot in the MLOps Lifecycle Modelling Stage.

6. Conclusion

Here’s what we’ve learned in Lesson 7:

The end-to-end fine-tuning process for a Mistral7b-Instruct model on a custom instruct dataset.

How to deploy a fine-tuning pipeline on Qwak.

How to work with versioned datasets and compare training experiments on CometML.

Refresher on PEFT, QLoRA, and the utility of BitsAndBytes within memory-efficient fine-tuning.

In Lesson 8, we’ll cover the evaluation topic. We’ll discuss common evaluation techniques, and traditional metrics, and dive into production-stage recommendations on the topic. See you there!

🔗 Check out the code on GitHub and support us with a ⭐️

Next Steps

Step 1

This is just the short version of Lesson 7 on How to fine-tune LLMs on custom datasets at Scale using Qwak and CometML

→ For…

The full implementation.

Discussion on detailed code

In-depth walkthrough of how Qwak Deployments work.

More insights on how to use CometML Dashboard

Check out the full version of Lesson 7 on our Medium publication. It’s still FREE:

Step 2

→ Check out the LLM Twin GitHub repository and try it yourself 🫵

Nothing compares with getting your hands dirty and building it yourself!

Images

If not otherwise stated, all images are created by the author.